Oktane18 API and Microservices Best Practice

Transcript

Details

Keith Casey: All right, as he said, we had to strip out all the forecast sales date, and everything. This is not the right presentation to come to if you're interested in any of that. I'm Keith Casey and the title that she gave is not my actual title. My actual title is API Problem Solver. I see myself as an API Psychologist. I help you solve your problems before they become issues.

Matt Raible: My name is Matt Raible. I'm a hick from the sticks. I grew up in Montana, and I am on the Developer Relations Program, and do developer advocacies, speak at conferences, right. Example, some example programmer basically.

Keith Casey: An example programmer or example of a programmer?

Matt Raible: Kind of both.

Keith Casey: All right. Today, we're going to be talking about securing Microservices with API Access Management. We're going to talk about some of the patterns that we've seen from customers in the real world. This is not a lot of hypothetical stuff. This is things that we're seeing on a day-to-day basis. We're going to go through five parts of this presentation. First of all, assumptions. We write presentations. We have assumptions that were baked into our thought process about who you are, so we're going to go over those to see how close we were, and actually going through it. We're going to go a little bit into the background of Microservices. Some people are still getting their feet wet, and then some people are still just figuring out what they are. We just want to bring everyone up to a common level. We'll go through a couple architectural approaches, maybe hit some pitfalls if we have some time, and then open questions from there some things that we will not address during this presentation. With that, I'm going to get ahead and dive in, right. Okay, cool. Assumptions. I'm assuming everyone here is technical, right, or has built things or does build things. Does anyone not build things? All right, fantastic. I assume most people here are dependent on APIs. Okay, that's another good assumption. Who's building APIs? All right, who's building APIs for external audiences? Okay. Who's only building APIs for internal audiences? Who's both? Oh, this is fantastic.

Matt Raible: Right.

Keith Casey: You're at the right conference.

Matt Raible: Good crowd.

Keith Casey: All right, most of you are probably building or at least considering Microservices. Who is building and deploying Microservices right now? All right. Who's considering it? Who doesn't know one in the world of Microservices?

Matt Raible: There's got to be one.

Keith Casey: Raise your arm. Oh, she's taking a picture, it's all right. I hope, I hope, I hope if you're here at Oktane, security is a top concern. If security is not a top concern for you, please don't raise a hand, just leave, okay. Oh, I'm not supposed to say that, right?

Matt Raible: Right, you're not.

Keith Casey: Sorry. All right, so hopefully we've got some assumptions all like most of a certain very similar places. We have some similar constraints and similar concerns, so let's talk a little bit about what a Microservice actually is. Microservice would go to a technical definition. It's an architectural style of a bunch of pieces loosely joined. If we go back to UNIX definition or the API definition, we have all these components that are sitting out there, and we compose them, we pull them together to accomplish something useful. That's really about it. Part of this gets back to the aspect of ... Has anyone read Mythical Man-Month from Fred Brooks? It's a fantastic book. One of the things he talks about in there is adding developers to a late project mix later, because the aspect of every developer you add to the team, adds more communication paths. More communication paths mean you've just lowered the average understanding of the team because now, you have to spend time bringing new people up to speed. You have to spend more time synchronising, making sure everyone is understanding exactly what's going on. Part of the concept of Microservices is if we can encapsulate the complexity of this particular component over there, and reduce all the communication paths to one, then that's a well-publicised, well-understood interface, we can simplify the process. Now, like Fred Brooks said, "There is no silver bullet," this does not solve every problem, but what this does is it simplifies a number of them.

The most important aspect of this is it allows teams to scale independently, so you can have aspects of my application, my organisations becoming successful. This particular component that everyone depends on, we need to scale this up in our organisation. Well now, we can scale that up in a lot safer, easier way. Does that make sense to people? Dead silence, okay, I'm going to assume yes. All right. Fundamentally though, this comes down to what's called the Bezos Memo. Jeff Bezos, he runs a company called what company?

Matt Raible: What?

Keith Casey: He runs a company. He wrote this memo in 2002 and he said, "Hey look, we're growing this little company. It's going to be really fundamental like what we're doing is really fundamentally important to us, but you know what? Most of the things that we're building are going to be important to other people too." As we're building these components, instead of doing things like sharing databases, you need to wrap your component in well-understood, well-documented interfaces. If you publish these interfaces internally, now you can ... other teams can reuse your stuff more easily. Eventually, it's not just a team down the hall or the team across campus, it's that team with that other company on the other side of the planet. Now, in 2018, this is obvious, right, we understand APIs, we understand why this make sense. Remember, this was in 2002. That's a long time ago. You can attribute a lot of the growth of APIs and Microservices specifically to this memo all the way back then.

When you stop and think, this is kind of visionary. The things that a lot of our organisations are just now like the conclusions we're just now coming to were penned 16 years ago. It's cool. All right. Before, this is what our applications looked like. They're big monolithic applications. Odds are there's one layer of authentication on the sides there, and every single application have the same components to it. Regardless of how we were scaling, how it needed to scale, how it need to be managed, every deployment looked exactly the same. This is the monolith.

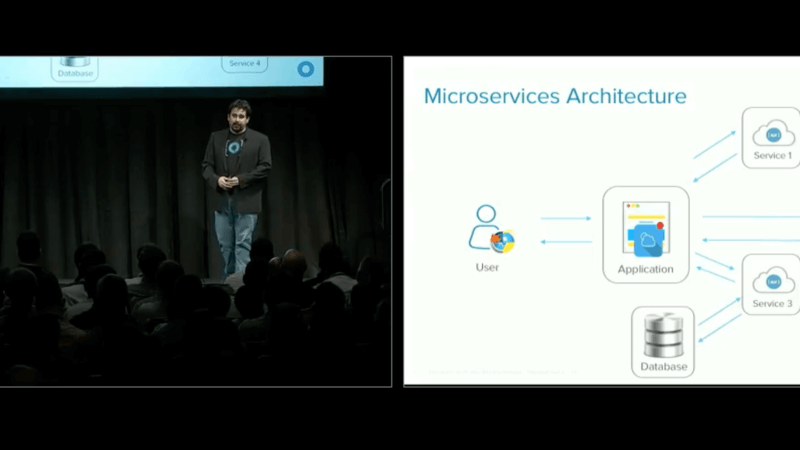

The concept behind Microservices is if we can break this into pieces, into well-defined, well-established pieces with very clear and straightforward interfaces between them, now we can scale them as we need. That breaks into something like this. We have all the same components. The components haven't changed. The way they're structured and how many of them has changed. That's where things become really powerful. Now, one cell will change between this slide to this slide. Before, here, we had one set of boundaries. The application had a boundary. You authenticated or you didn't authenticate, that was it. Well once we start breaking things up, when we start understanding, well these things have to communicate together. Now, we have lots of little boundaries and this gets to be a lot more complicated. How do we manage username and password? How do we manage credentials across all these different systems?

In fact, as we're deploying these systems and we think of continuous integration, continuous deployments, those things, how do we manage this thing at scale? If you're a handful of people deploying free Microservices to Amazon, you could probably do it on your own. When your Netflix deploying hundreds, thousands, tens of thousands of services, how in the world does that work? This is what some of our customers are starting to do. We've been working with them, watching the things that are good that are happening, trying to double down on those, watching the bad things that happen, helping fix those, and try and take the lessons learned, and apply them at scale. The four, yeah, the four or one ... the base architecture and the three architectures we lay out today are going to be things we've seen in the field so far. We've got code to backup.

Matt Raible: We do?

Keith Casey: All of them because we're not just blowing smoke here. This is actual stuff that works. All right, so Architecture Overview. This is what our monolith looks like. Well we've got one single application. It's ... a user authenticates with the application has a very clear boundary. It's pretty simple. Realistically, it actually probably looks like this. There's a database behind the scenes and it has its own credentials. Realistically, it probably looks like this. It's a monolithic application, it's actually pointing in the APIs and interacting with databases. I put monolithic in quotes here because this is no longer a monolith, is it?

We're already starting to breakout components, vital components. Everything from authentication, to setting a text message. We start breaking out of our main application and interacting with it over APIs. The idea of we're only building monolithic applications I think is bull. We're already just trying this ... we're already starting to solve these problems. The whole distributed application systems and the problems that we think we have to scale with are solved with Microservices, we're already having to solve them. Keep that in mind as we go on.

This is what a traditional Microservices Architecture looks like. There's one application. There's one endpoint as far as the user is concerned. Behind the scenes are a ton of components that all interact with ... well, some of them interact with some of the others to accomplish whatever the application does. This is what it looks like. It's many components loosely joined. The nice thing is that when we're doing this and Service 3 ends up being really important, so think of something like generating a PDF for H&R Block. In the US, tax ... they was just a couple of months ago. Generating PDFs became really important right then. They could scale up the service to generate those PDFs really easily with an architecture like this. It's pretty straightforward. All right. Let's go to Version 1. Ready? All right. Version 1 is really simple. This is where most people start. They've got a simple application. There's simple authentication at that layer. Then that application goes ahead and authenticates with the services behind it. The user authenticates with the app. The app authenticates with Service 1. Service 1 authenticates with Service 2. This is where most people start. You want to go ahead and walk into that?

Matt Raible: Yes, so I have a demo here. Let me make sure the monitor is mirrored so you see everything. Close some stuff. Get that out. Basically, the first thing we're going to demonstrate is Basic Authentication, how you might have a backend services protected with Basic Authentication. You have a front end service like a gateway that actually talks to that backend service and passes a basic header.

The other more common thing that I've often seen is actually IP whitelisting. There's not even a concept of authentication, just the backend Microservice is ... has an IP that lets these IPs in. There's no user information and it's behind the firewall. That's Version 0 which you shouldn't do at all. I'll show you Version 1 with the Basic Authentication example. Basically, what we have here is we have an app. You'll see it, we're in Vegas so it returns a list of card games, black jack, pai gow poker, and Texas Hold'em. If I do spring run app.groovy, then it starts it up, and I specified a port of 80, 88. If I go to that port, you'll see it requires a username and password. This is using Spring technologies and the Java system, and you'll notice they actually generate the password when it starts up. The default username is user, password. This is actually the home page.

You'll notice there's no mapping forward, just slash. If I do slash card games, it actually returns the data, right. You have to log in to do that. Well now, we have another app called gateway. This one is under the Basic Authentication. We'll give you a URL to the GitHub repo after this. CD into gateway and if I run spring run, gateway. This one will actually talk to that backend line. You can see here, it uses a RestTemplate, and it's got a basic authorisation, user and password. Well, that password is not going to work, right, we saw that, we need to paste in that long password. We got to restart our gateway after doing that. Then it uses that RestTemplate to talk to those card games, and just gets back a string.

We'll make sure this works. This is running on a different port 80, 80. All right, and it says okay. Four, four, that's not found. That's because I mapped it to the [route 1 00:12:46] so it's going to talk to that backend service and it gets those list, right, so it's actually doing that propagation. Well the problem is that every time I change that backend service, it actually changes that password, right. Terrible architecture, but at the same time, you can go here and you can use this in memory, details manager, which is a feature of Spring Boot. You'll notice you're still hard coding the username and password, so an anti-pattern, not a good way to do it and continue on the next one.

Keith Casey: It's not a way to do it, but this is where most people start because it's the easiest, it's the fastest to get deployed. It feels like the right way because after all, that's how we connected to our database, right. We've embedded username and password, and we call it done.

Well, that has some drawbacks. First of all, when have these Service 1 and Service 2 behind the scenes because it ... the credentials are generated by the service itself, these services don't have any context on the user. Those services might need user information to actually accomplish something, so we lose that, so we have a big drawback there. Further, there's no scoping here. The application may not need everything that Service 1 can do, and Service 1 may not need everything that Service 2 can do. Since we can't lock that down those fine-grain access management, we run to this problem of well, you have access to everything just like giving your database password away because you're going to interact with a select or delete. You could do everything you can through that interface, which ends up potentially be a problem. Remember, this is an access management conference. We're a big fan of limiting permissions.

The next one is rotating credentials. Let's say for some reason one of those credentials is compromised. If Service 2 was generating that password on boot, and we're embedding that in Service 1, okay well, when we reboot Service 2, we update a single service. What happens if we have five or ten or twenty? We have 20 services all interacting with Service 2, and now, we need to reboot it. We may need to now update things in 20 places. We love doing that, don't we? Yeah. This gets really complex as we add more services. In this one, Service 1 was just interacting with Service 2. What happens when Service 1-

Matt Raible: Service one was just interacting with service two. Well what happens when service one needs service two though twenty? So now you've got twenty different sets of credentials to manager, and it needs to know which set of credentials go with which server, and it ends up being a pain in the butt pretty quickly. So while this feels like a good starting point, while it feels like this will work, it completely fails at anything resembling scale. So we have to remember, this is a starting point, but it's not a good starting point.

Alright, so that. Let's go to version two! Version two, we have a number of customers doing this right now, and this is add an authorisation server. So this is obviously the Okta approach to things. So when the user comes to the application, they do some sort of single sign-on, open ID connect, authentication, and authorisation through us, back to the application. And now, when the application wants to get access to service one, it goes through the authorisation server for it. And it says, “Hey I need to authenticate here, can I get a token?”. And it goes through whatever process, whatever procedures involved there, and then each service has its own authorisation server. So, let's see that.

Keith Casey: Kay, so this client credential example is actually based on a blog post that we wrote. So let me kill this. There's a great command if you don't like java on a mac called kill all java. Kills all your java services. And this blog post is written by a good friend, Brian Demers, who's here down in our developer hub, called Secure Server-to-Server Communications with Spring Boot and OAuth2. And basically he shows how to use the client credentials grant, so let me show you that code. I have it under this one.

We have a server, client credentials server. Oh, now I got the beach ball. It's getting ready for the party tonight, right? So this server application, all it has in it is basically, it's a standard spring boot application so that's normal. This allows preauthorised annotations to work, and then it's got a message of the day control. And it's mapped to slash mod, and it looks for an OAuth2 scope of custom mod. And so then it returns a message of the day. So we can go ahead and start this one. And then we have another one called the client. Let me open that one up, client application. So this one talks to that service, and how this one works is it actually reads from a properties file to get the OAuth information. So, it's going to use a grant type of client credentials, client ID, client secret, we created an app on Okta, we created custom scopes all that. I won't bore you with that. And it shows you an access token URI, and the scope that's used. And so what it does, is it basically configures a client credential resource, this is just a feature of spring boot, they make OAuth a lot easier, it configures that rest template with that data, and then it calls with that rest template to that back-end service and passes those client credentials on. So I should be able to start this one as well. I might have to do it from the command line. So we'll go back and the client credentials. And this one is just a command line runner so all its going to do is spit out a command after I do this.

Oh, it didn't work. Well that's why you have this tutorial here and you're like, “how does he do it”. Right? You got to have one failed demo. He says you just use this so. Well maybe I'm in the wrong directory, nope. Well, it worked when I tried it last time. Let's try it if it has a little icon here, nope. One more try, third times a charm? Nope. Oh you know what it is, I think it's trying to use Java 10. So let me make sure, well we'll move along. It works if you try the actual blog post.

Oh, I did it wrong. Awkward pause.

Matt Raible: Up, up up. Up, up. There you go.

Keith Casey: Right here?

Matt Raible: Alright, thank you.

Keith Casey: Oh I like it, even got some music.

Speaker 1: Is that the leave the stage music?

Keith Casey: So what Matt's example is doing behind the scenes is connecting to the authorisation server, using the client ID and client secret to generate a token from there. And the most important part there was the custom mod scope. So one of the things that comes with this example, is that you can go ahead and you can start putting in scoped tokens. So like I said earlier, application one may not need everything that service one provides. So with scopes you can go ahead and lock things down accordingly. You can say, “this doesn't need everything”. Maybe it's an API to a banking interface. I don't need to be able to send money in this use case. I just need to be able to download the transactions. So a read only scope gives me a little finer grain control and a lot more security as a result. So, it's a good option to be able to sort of step up and make sure things are a little bit better done here.

One thing we didn't touch on there was token expiration. So, earlier we mentioned that rotating credentials is hard. Especially once those credentials get propagated across different systems. Well, if our tokens naturally expire, just as part of the OAuth spec itself. Then now we have to build in the ability to know that, hey look, our token is expired, let's go get a new one. So rotating those and making sure that if a token is compromised we can kill it and rotate it relatively easy, is actually really valuable. Because no matter how good we are, at some point your system will be compromised, let's try to minimised the damage and minimise the window where that compromise can be abused.

So the drawbacks that we had earlier, we haven't addressed all of them, but we've addressed a few of them. So while this is a better approach, it's still not perfect. So our next later, propagating the ID token. So in this scenario, I have a customer that's doing this right now, it looks very similar to the previous one. So they still have a custom authorisation server for each service. The thing that's different though, is when this application makes the request token, gets the access token, comes back, it also passes along the ID token from the user. Now the benefit of this is now you've got some context user and what's going on there. The drawback is, that that token, the ID token, the access token, have no connection to each other whatsoever. So you can't use this for authorisation. You can use this for logging. You can say, “hey Matt requested this action that we took and successfully completed”. But we can't say, “this action was taken on Matt's behalf, as Matt's context”. So we end up with informational purposes, not authorisation purposes. Does that make sense to people? Alright.

So we have an example for this one right?

Matt Raible: Well, so, if you have to get an access token first to get an ID token, what's the point? And I didn't have time to write the example so no, no example here.

Keith Casey: No, on a serious note, while this is something we have seen some customers doing, the marginal value to this is not huge. So we add a little bit of additional information through the ID token for logging purposes, realistically, it's not all that useful. It's useful for logging and that's it. So while this is available to us, it's not something we recommend. Because the bottom line's there, we've also added the extra overhead of a second token. So now we have to pass two tokens along. Because we all love passing additional information along. So that's what Matt said about, if we have to go through the effort of getting an access token in the first place, why bother also getting the ID token, Which has no relationship with it? Isn't this fun? And like I said, this is only suitable for logging, it's not suitable for authorisation. So it solves, this problem, and it creates two more. So while this is possible, it's not something that's super useful, so we don't recommend it.

Is anyone trying ... no I won't ask. If that customer that I haven't named is in the audience, I'm sorry. We did warn you not to do this. Alright, this is why I don't make friends people.

Alright, architecture number four. This is actually kind of my favorite. So architecture number four is a little bit different. It's actually a lot simpler. So in this scenario we've got the user. They authenticate to the authorisation server with the application itself, and then the application passes that access token onto service one. There's scopes in there that are applied to service one. It takes whatever actions. If it needs service two, then it passes the access token to service two, and the scopes that are applicable there get applied. You do have an example of this one right?

Matt Raible: Yes.

Keith Casey: Okay cool.

Matt Raible: So this one just ... To look at the slide a bit first. One of the features that we use in this one is called Feign, it's from Netflix and Spring Cloud. And what this does is it actually makes a call from the first microservice to the second microservice and passes along the authorisation header in a bearer token, so you can kind of see what that looks like with this java code here. This also has a blog post called Build and Secure Microservices with Spring Boot and OAuth2. And it walks you through that and I also recorded a screen cast of this last week so if you want to just watch a video, I think it's like 27 minutes, you can certainly do that. Just to dive into the code.

Keith Casey: It looks like we recommend this one.

Matt Raible: This is the gold standard so far. So this one is under this access token propagation, and I wrote a beer service because I like beer, and this just returns a list of beers. So if we wanted to get fancy I could let you actually influence this list of beers but part of the application goes out to Giphy and gets a list of animated gifs that match that beer name, so, I've done it in conferences before and it doesn't always go well. So you can't influence my list right here. These are taken form beeradvocate.com and I have some bad ones in here alright? So this is our beer catalog service in the back end.

We have a Eureka service, this is a service registry right. This is how the microservices talk to each other it doesn't really have anything to do with authorisation but it allows them to talk with each other instead of using DNS, they just use names that they have in the application. So, the only thing here is just enable Eureka server, that's it, Spring Boot makes it real easy. Our edge service on the other hand will take those beers and actually give us a list of just good beers. So, I had to do some core stuff here, but here's the magic where it reads the beers from the back end, gets those contents, streams them, and then takes out the ones that aren't great. So if you're a fan of Budweiser, Coors, or PBR, I'm sorry, I'm from Colorado. Those are not good beers. The hipsters like PBR, but, you know, it's not that great. So anyway, and then I have an angular client. So I'm going to start all these and hopefully it'll work. I have a single command so not too much funky stuff. Access token propagation. And just run.

So it starts up that Eureka service through the registry, starts up the beer catalog service and an edge service, and they all talk to each other. And then I have this angular client that uses our sign-in widget to basically authenticate you and then pass on that bearer token to those services and it propagates down. So, the one thing in this beer catalog I also have, think it's in here, is a home controller. And you'll notice what this does is actually uses standard java idioms with a principle, which is a standard java class. It's in the core java SDK. And you're basically just grabbing that and it has all the user's information in it. So Spring handled going out to the user info end point as part of OIDC, grabbing that information and populating that object.

And private internet access says I'm disconnected so I just lost internet. Maybe, we'll see. I don't think I really need it for this. So we'll start with localhost E80. That's our beer catalog service and that one should actually prompt us to log in because nit’s protected. Still got to wait for things to start. How many people use Maven? Are you java developers use Maven? Kay, we got a few of you. And now how many people use MPM? To do like MPM install? So yeah, one of the jokes I say is that Maven downloads the internet, MPM installs and downloads internet and invites all its friends. So some of this stuff does take a while to start up, but ... Now we have our back end lock down right? This is our beer catalog service. There's actually no basic authentication configured so there's no way for me to even log into that one. Then we have 8081, which is actually our beer service. So we propagate down to that beers API on the back end but whoa, we got to log in to do that. Right? So, that one's protected. And once I log in, then I'll actually come back to my application, give me those beers. I could also go and say, “hey, for the good beers, give me that list”. So this happens when the back end actually doesn't respond to the request, because I have it configured for Hystrix to do failover. So if it can't find the beers or it can't talk to the back end, just returns an empty array.

Matt Raible: Well after a while this will actually come up. You just got to click it a bunch of times. But I wanted to show you that home one, so, let's see if that home one works. So this is working right, it actually goes and grabs my information, shows what coming from Okta in that ID token. Let's try good beers again, because if good beers doesn't work then the angular one doesn't work. Okay so now it's returning that. Good ole refresh button. And then we have angular running on 4,200 and this probably ... usually when you do any sign-in widget stuff you're going to want to use incognito because it does use local storage for some stuff so that can get stuck. So this comes up. I can log in.

Matt Raible: This comes up, I can log in, and we see our list of beer names, with appropriate images, so, I like this one because it says very nice. Oh I did it again, darn. I'm used to using Keynote, not PowerPoint, sorry.

Keith Casey: Do I have to start at the beginning again?

Matt Raible: No, right here.

Keith Casey: Okay. Alright, so that's a simple scenario where user goes ahead and logs in with Okta on the front end using the OIDC and OAuth custom authorisation server. Goes ahead and gets that access token and propagates it across to each service. So, when we go back to our drawbacks that we had originally, we actually get most of them taken care of. So one, we gave user context now. Now we're getting information from the actual user info, and point if we need to, we can embed it in the token, so we can pass that along. So now when service two needs to take action as Matt, we've got the right context to be able to do that. Because we know that's a signed token with all the information in it, we can do what we need with it. So, that's a huge benefit.

We get rid of the extra overhead of the second token. So pass along the access token, ID token combination we don't have to do that anymore, so that's nice. We don't have the limitation of only logging in contextual information. We've got scoped tokens, so now we can say this service only needs these particular aspects; so we've addressed that drawback. We've stopped token expiration. The one thing, and Matt hit this with a little bit with the Eureka server. This requires more coordination. So, service one needs to understand how to find service two. And you talked about that with the Eureka server, the directory service needs to be able to say where do I find these things, how do I interact with them? Because that access token is an access token, it's your sign, it's all your signed user information, you don't want to arbitrarily send that around. You don't want to accidentally log it somewhere. You want to be careful with it, treat it like a password. So, you need to understand what that's being used for and make sure it’s being used in the right places.

So that, the next step, one of the ways you sort of address that, is with the authorisation server discovery. This is a document so there's a, draft version 8 or 9 right now from the IUTF, of being able to say when an authorisation server has certain capabilities, this is a way to publish those in a standard way, so that services can read them. So, with Okta we implement this standard, or this specification and so every authorisation server has a well-known endpoint so that you can actually interact with and look and configure itself accordingly. So you've got the token endpoint, that's available, you've got the keys so when you need to validate that token you can do that really easily. Alternatively if you want to use the introspect endpoint it's actually done here at the bottom. You've got all the grant types, and the last bit of information here is the scopes. So all the scopes that are available from that authorisation server are actually discoverable up front. So this is a really easy way to be able to query your server and say, does it do what I think it does? How do we do this? So it's a really powerful way to be able to query the server, figure out what it does, figure out how to interact with it, and being able to take steps from there.

A couple drawbacks to this approach, it is still technically a draft specification. So it's on version 8 or 9 right now, usually when it hits version 10 it becomes pretty solid. The spec hasn't changed a lot in the last year or two, so we think it's very stable, we think it's very easy to use; technically it's still a draft. So just be aware of that.

Self-configuring applications kind of scare people, right? I mean, when you fire up the service it's communicating with what? Well you don't necessarily know in advance, so being able to have that directory service to actually publish and sort of map things out is really important, but that makes some people nervous. It's hard to validate, it's hard to audit, unless you've got really tight controls over that. So it's kind of scary at the end of the day. If people, if you come from an older school mindset of I need control over everything that's going on, I need to understand everything on day one no matter what, this is not going to resonate with that team. So if your security and compliance team has that sort of mindset this is going to be struggle for them, just be aware of that. Next up is client registration and provisioning. This is kind of a fun one because there's another specification, RFC7591, dynamic client registration. So it basically says, when you have an OAuth provider, you should be able to register new OAuth clients with these particular parameters. And so basically the idea is that when you have a microservice, and you go to deploy that, imagine being able to drop an Okta API key into your CICD server. So that when you deploy that microservice, at the same time, you come to Okta, you create an OAuth client with a client ID and client secret, inject that into the service and then deploy it. That's what this spec does, that's what this spec loves. It's really incredibly powerful because now we don't need to go out and provision those clients in advance. We don't have to count on people copying and pasting those parameters correctly. We can make the request, get the information, embed it and enroll. That's kind of powerful.

So the thing that we've been playing with, and we've started seeing a couple customers do, is plug this into their CIDC system, and then assign a client to a particular authorisation server with the scopes and everything along those lines. So then when they're deploying version 1 of their service, they assign it to that authorisation server. Later on when they deploy version 2 they assign it to the same authorisation server, and in fact, they can get more advanced, and they can create custom policies within that authorisation server for a test mode. And so now you can deploy version 1, you can deploy version 2, it's all going to the same authorisation server, and you can know what that authorisation server offers because of that discovery document. I think that's kind of badass. When you start putting these pieces together, it feels really complex at the beginning until you start thinking how these discovery documents link together, how the discovery service works together. And you see, oh, this is how microservices work at scale. A couple draw backs to this one, you have to track and map which clients, which OAuth clients map to which services. Luckily one of the things that we have in our API is the ability to name the client ID so you can name them according to the microservice; that mitigates some of it. And you have to take the step of assigning these to authorisation servers. It's not a big deal, just a couple little extra workflow steps that you need to be aware of.

So the thing that we're pushing towards, and this is a little bit of future roadmaps sort of thing, Okta as a DevOps platform, to be able to plug this into your CICD system to be able to make it work. And this is basically all the steps required. You create authorisation server, you create the required policies and rules, that's what your security team does in advance. So you've got all that figured out, all that locked out. And then in your deployment pipeline, you create this microservice, you create the client, assign it, assign it to an existing authorisation server and now you've got orchestration, you've got everything working just out of the box, it's not too much effort.

So the most powerful aspect of all this is now we've completely, 100% separated your deployment systems from your security policies. One of the big fears that's come around microservices is how do I know what's being deployed? How do I know what it interacts with? And by plugging it all together here, we've got that visibility now. In the Okta Syslog, one of the things we log is every time a token is issued. We know that. We know which client ID issued which token for which scopes. You've got all that baked in here, just the way things are configured right now. It doesn't take much to do it.

With a couple drawbacks, this is an advanced scenario. There's not a ton of people doing this yet, so we're trying to document this, trying to make a... build out some baseline materials as Matt talked about, there's blog posts that support pieces of this. We're trying to turn this into a solid playbook that everyone can easily replicate. So if you're using these components, we'll get you the first 80, 90% of the way there pretty quickly. The other aspect, and it's kind of a drawback with Okta's API right now, is we have a new API access management admin role that you can issue tokens for. We launched that two, three months ago, something like that, and that's not super fine grained yet. So if you use that role, you can create authorisation servers you can create clients, but you can't say and just manage these clients. So we need a little bit more fine grained thing there. But the nice thing is we're getting there, we're improving the system across the board.

So a couple open questions we have here. Notice, at no point do we talk about API Gateways. It's because it's irrelevant here. Should you use an API Gateway? Quite possibly. Do you have to? No. Do we have a particular one to recommend? Well, for microservices usually Kong is a favorite, Tyk is another common one. Fundamentally the patterns hold the same, regardless of which system you are using. Fundamentally we don't care, it's totally irrelevant in these scenarios. We also didn't talk about validating tokens. Like Matt showed in Spring Boot, that'll handle a lot of the... or Spring Security, I'm sorry, that'll handle a lot of the token validation for you. There's standard methods for doing that. That's RFC7519, we're not doing anything special or creative there, we're following the specification, that's a good thing, right.

And then we didn't talk about scope naming. Scope naming is one of the more complex things. I'm actually also working on a blog post to sort of throw out some strategies there because of the big ugly complex thing that no one has really figured out, but a lot of people are doing. And that always leads to great options. So from that, Matt you want to go ahead and share some helpful [crosstalk 00:40:36]-

Matt Raible: Yes, so we have a number of helpful resources, all the demos I showed today are on the GitHub repo. If you're interested in learning more just about OAuth and OIDC there's a YouTube video from Nate Barbettini which is great in that it breaks it down to plain English. And the reason I know it's great is that is has so many comments and people actually watch it for an hour and then leave a comment that says they loved it. We also have the OAuth.com playground from Aaron Parecki on our team, and Nate also wrote OAuth debugger and OIDC debugger if you actually want to debug those flows. And we also have one of the prize t-shirts for the question that gets asked, if you're an extra-large. It's great to wear through the airport.

Keith Casey: So for people in the back the shirt says...

Matt Raible: I find your lack of security disturbing.

Keith Casey: The TSA loves these. Do we have a question not related to the t-shirt? Yes. Oh hold on just a second.

Audience 1: Hi, so we spoke about passing the tokens between the APIs, but what if they're a different lines, like, different angular apps or different react tabs which are calling the same API endpoint, so how do we get single sign on, or how do we pass API tokens, access token, across the different apps?

Matt Raible: So you would likely have those apps using the same client on Okta, and then they would get a bearer token that they could pass on to the API. The API would also be configured with that client ID on Okta, and so it could validate those tokens coming in.

Audience 1: Okay, thank you.

Audience 2: So what you just said a moment ago about sharing the same client, but each client has a different authentication flow, so if we have something that maybe we want to use refresh tokens and some things we don't want to use refresh tokens, how do we handle that?

Keith Casey: So when you configure the authorisation servers, you can say which clients can actually connect to it. So you can go ahead, I usually don't recommend, you can technically say all clients, I'm not a fan of that, but you can say this client, this client, this client, and in fact within the authorisation server you can set up policies specific to clients, to be able to have really fine grained control over that.

Matt Raible: So for the rest of the questions we're running out of time. We'll go ahead and talk outside. We'll be available, we'll also be down in the developer hub. I also want to make you aware of the microservices example. There's actually a codified version of it with JHipster that'll generate all that code for you, and it supports Okta. All you got to do is basically set like five environment variables and you're talking to Okta and you can generate all that. And so this is a book I wrote, and I like to end it by showing that I'm kind of a hipster too.

Keith Casey: Wow. And on that we're going to close. Please don't. There's a couple other sessions you might want to check out. Today, right after this, there's a session from Aaron Parecki the author of OAuth 2 Simplified.com talking about OIDC and OAuth in your applications, definitely check that out. Tomorrow we've got two sessions. One of my favourites is How to Make Your Okta Org Ridiculously Good Looking. That's on customising your login page and your flows, and then Take the Okta Management APIs Challenge, that is in the developer hub, right?

Matt Raible: Yep.

Keith Casey: Yeah, so definitely check those out, and don't forget to take your survey. Thank you.

Interested in implementing microservices in your workflow? Watch as Okta developers create explain the microservice architecture using OAuth 2.0, find-grained scope management, and potential drawbacks.