This is the sixth blog in a seven-part series on identity security as AI security.

TL;DR:

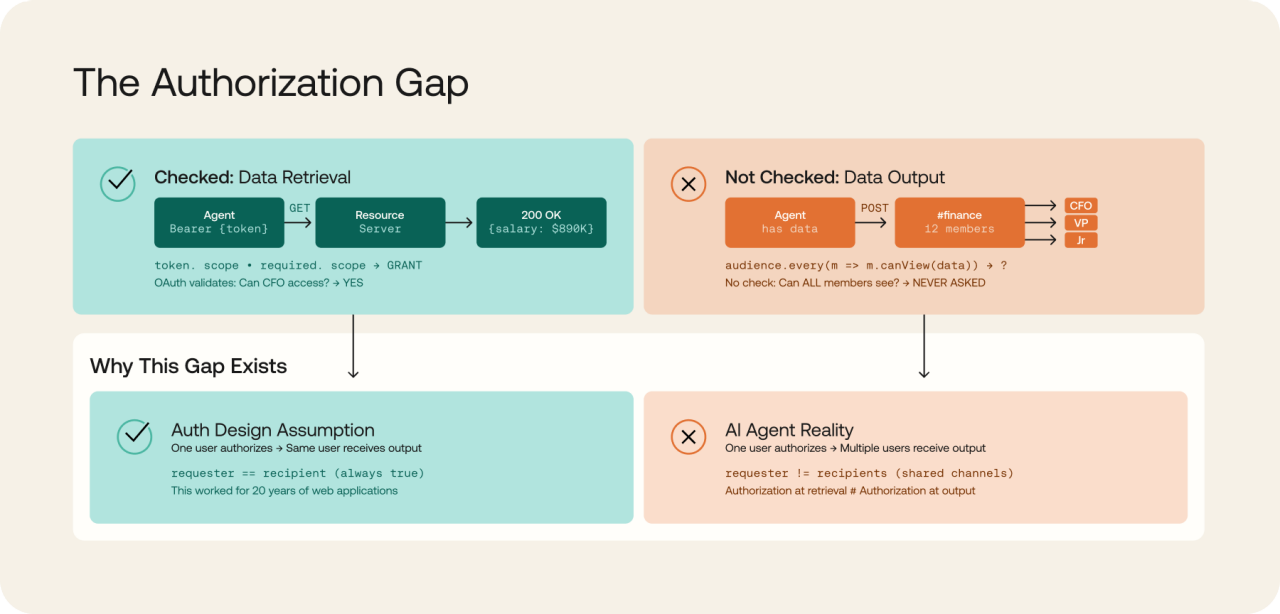

AI agents retrieve data using the permissions of whoever they authenticate as (checked), but output to shared workspaces where recipients have mixed permissions (not checked). For example, a CFO's agent in a Slack channel can expose executive compensation to junior analysts. Four critical vulnerabilities (CVSS 9.3-9.4) hit Anthropic, Microsoft, ServiceNow, and Salesforce in 2025. Same pattern: authorized retrieval, unauthorized recipients. The fix requires fine-grained authorization that computes the intersection of all recipients' permissions before data leaves the retrieval layer, a step that happens after OAuth's job is done.

The Problem

OAuth was built for a simpler world: one user, one application, one set of permissions. AI agents break that model. They operate in shared contexts where multiple people see their output. The protocol never anticipated this. Neither did the platforms built on top of it.

The result: your AI agent inherits one executive's access but broadcasts to everyone in the room. According to McKinsey, 80% of organizations have already encountered risky behaviors from AI agents, including improper data exposure and access to systems without authorization. They are right to worry.

How does this play out in practice? The OpenID Foundation's whitepaper on agentic AI describes this scenario:

A CFO deploys an AI agent in a Slack channel. The agent authenticates with the CFO's credentials and inherits access to compensation data, board materials, and HR systems. A junior analyst asks: "What's our Q3 compensation budget?" The agent retrieves budget spreadsheets, compensation plans, and executive salary schedules. Authorization check: Can the CFO access these files? Yes. The agent responds to the channel. The junior analyst now knows the CEO's salary. So does everyone else in that channel.

The agent worked exactly as designed. The authorization model failed.

The Pattern: Four Platforms, One Common Thread

Between June and October 2025, four critical-severity vulnerabilities revealed a pattern. These vulnerabilities aren't identical, but they share a common thread: authorization checked at retrieval, not at output.

Anthropic Slack MCP Server, July 2025

Johann Rehberger discovered the first critical MCP vulnerability. When agents post to Slack, the platform "unfurls" hyperlinks to generate previews. An attacker injects a prompt causing the agent, operating with admin OAuth permissions, to read sensitive files and embed that data in a URL. Slack's preview bots fetch the URL, completing zero-click exfiltration. Anthropic archived the server rather than patch it. Retrieval: admin permissions (checked). Output destination: attacker's server (not checked).

Microsoft 365 Copilot (EchoLeak), June 2025

Aim Security disclosed the first zero-click attack against a production AI agent. An attacker sends an email with hidden instructions; the recipient never opens it. Copilot's RAG engine ingests the payload alongside SharePoint and OneDrive files, then encodes sensitive data into an outbound URL bypassing Content Security Policy. The researchers called it "LLM Scope Violation": the agent flattened untrusted input with trusted data without isolating trust boundaries. Microsoft deployed a fix, but the pattern persists. Retrieval: victim's M365 permissions (checked). Output destination: attacker's URL (not checked).

ServiceNow AI Platform (BodySnatcher), October 2025

Aaron Costello at AppOmni found that Virtual Agent and Now Assist trusted a hardcoded secret plus email address for account linking. An attacker knowing only a target's email could impersonate any user, including administrators, bypassing MFA entirely. Once impersonated, attackers invoke AI agents with full victim privileges to access ITSM records or trigger privileged workflows. Costello called it "the most severe AI-driven vulnerability uncovered to date." Retrieval: impersonated user's permissions (checked). Requester identity: attacker (not checked).

Salesforce Agentforce (ForcedLeak), September 2025

Noma Security found prompt injection via Web-to-Lead forms enabling CRM data exfiltration. An attacker submits a form with hidden instructions; when an employee later queries the AI about that lead, the agent executes both requests. Worse: the domain my-salesforce-cms.com remained whitelisted despite expiring. Attackers purchased it for $5 and established a trusted exfiltration channel. Retrieval: employee's CRM permissions (checked). Output destination: attacker's domain (not checked).

The common thread: each system checked whether the invoking user could access the data. None checked whether all recipients of the output could.

What OAuth Was Never Asked to Do

For two decades, OAuth worked because applications output data back to the same user who authorized access. AI agents break this assumption. Agents authenticate in different ways: with delegated user credentials, with their own service identities, or as user-built automations shared with others. The pattern varies, but the problem is the same - they respond in shared contexts where multiple people see their output.

The result: authorization happens at retrieval, but no check happens at output. Multi-audience contexts require extending OAuth with audience-aware authorization. In OAuth specs, "audience" refers to the target API. Here, we mean something different: the people who see what the agent outputs.

OAuth provides the foundation. Extending it for audience-aware authorization is what helps make AI agents safe.

The Architecture That Solves This

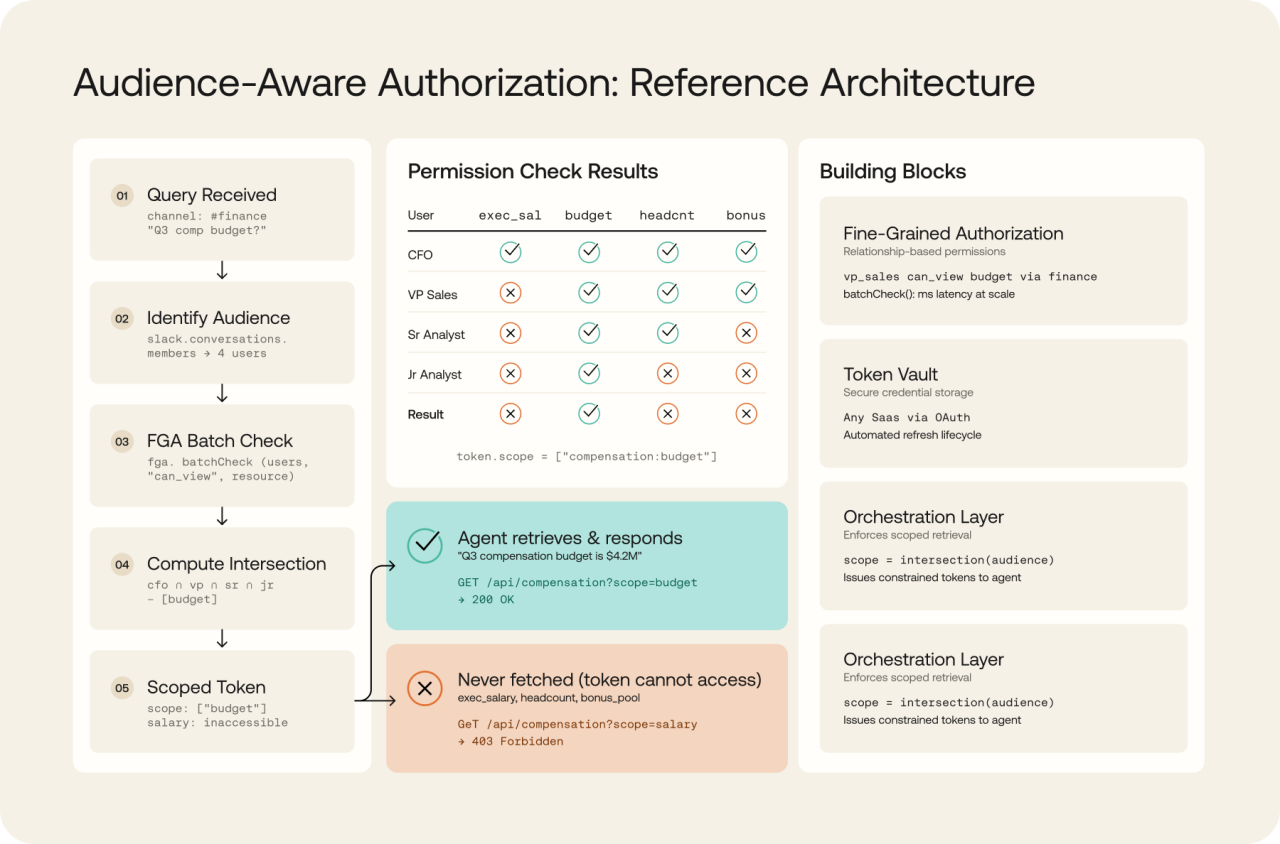

Fixing this requires audience-aware authorization: the authorization layer must know the audience before retrieval and compute the permission intersection in real time. The agent responds only with data that every audience member is authorized to see.

The architecture requires three components working together:

Fine-grained authorization engine. Models permissions as relationships, not static roles. Computes the intersection of what all audience members can access in milliseconds.

Credential management layer. Stores OAuth tokens for connected applications. Issues scoped credentials to agents based on the computed permission intersection.

Identity governance. Keeps the permission graph accurate through continuous review and remediation. Without accurate permissions, intersection computation produces wrong answers.

Permission Intersection Flow

The key insight: CEO salary data is never fetched in the first place. The token issued to the agent cannot access it. This is not filtering after retrieval. This is scoping before retrieval.

Why not just use DLP? Data Loss Prevention catches sensitive data after it appears in the response. Fine-grained authorization prevents retrieval in the first place. DLP is the seatbelt. Scoped retrieval is not driving into the wall.

How Okta and Auth0 Enable This

Fine-Grained Authorization (FGA)

Traditional RBAC assigns static roles. FGA models permissions as relationships: "VP Sales can view budget data because they are a member of the finance channel." This relationship graph makes intersection computation possible. When the agent needs to know what data all channel members can access, FGA's batchCheck API answers in milliseconds across billions of relationships.

Token Vault stores OAuth refresh tokens for any SaaS application and handles token lifecycle automatically. The orchestration layer retrieves tokens from the vault and issues scoped credentials to agents based on the computed permission intersection.

When agents span multiple applications, authorization must span all of them. Cross-App Access extends OAuth by moving consent and policy enforcement to the identity provider, giving enterprise IT centralized control over agent-to-app connections.

Real-time authorization is only as accurate as the underlying permission data. Identity Governance helps ensure permissions are reviewed and remediated continuously. When permissions drift or orphaned accounts accumulate, intersection computation produces wrong answers. Governance keeps the permission graph accurate.

The Regulatory Exposure

GDPR Article 32 and Article 5(1)(f). Article 32 requires "appropriate technical and organisational measures" including protection against "unauthorised disclosure of, or access to personal data." Article 5(1)(f) mandates processing "in a manner that ensures appropriate security." When an AI agent surfaces employee PII to unauthorized internal users, both articles apply. Fines can reach €20 million or 4% of global annual revenue.

CCPA Section 1798.150. California's private right of action allows consumers to sue when personal information "is subject to unauthorized access and exfiltration, theft, or disclosure as a result of the business's violation of the duty to implement and maintain reasonable security procedures." Internal overexposure via AI agents could meet this standard. Statutory damages: $100-$750 per consumer per incident.

Sarbanes-Oxley Section 404. Section 404 requires public companies to "establish and maintain an adequate internal control structure." When AI agents with executive permissions can surface material nonpublic information to unauthorized employees, your ability to demonstrate adequate controls is seriously undermined. Auditors could flag this as a material weakness.

As McKinsey's research confirms, 80% of organizations have already encountered risky behaviors from AI agents, including improper data exposure. FGA computing permission intersections before retrieval to help directly address these compliance obligations.

The Question for Your Next Security Review

Ask your security team: "For every AI agent deployed in a shared workspace, can we demonstrate that the agent's output is restricted to data that every member of that workspace is authorized to see?"

If the answer is no, you could have a regulatory violation waiting to be flagged.

The technology to solve this exists today. Learn how Okta and Auth0 secure AI agents at okta.com/ai and auth0.com/ai, download the Securing AI Agents whitepaper, or contact your Okta representative to evaluate permission intersection gaps in your AI deployments.

Next: Blog 7 brings all six identity challenges together into a unified framework.