As organizations have moved to explore AI's opportunities, the specter of shadow AI has risen as well.

In a sense, this should come as little surprise. When cloud adoption surged, so did the unauthorized use of cloud applications, or “shadow IT.” Messaging apps? Personal devices? They all continue to contribute to the challenge of addressing shadow IT on enterprise networks. Shadow AI is simply the latest iteration of an old problem.

A push-pull situation

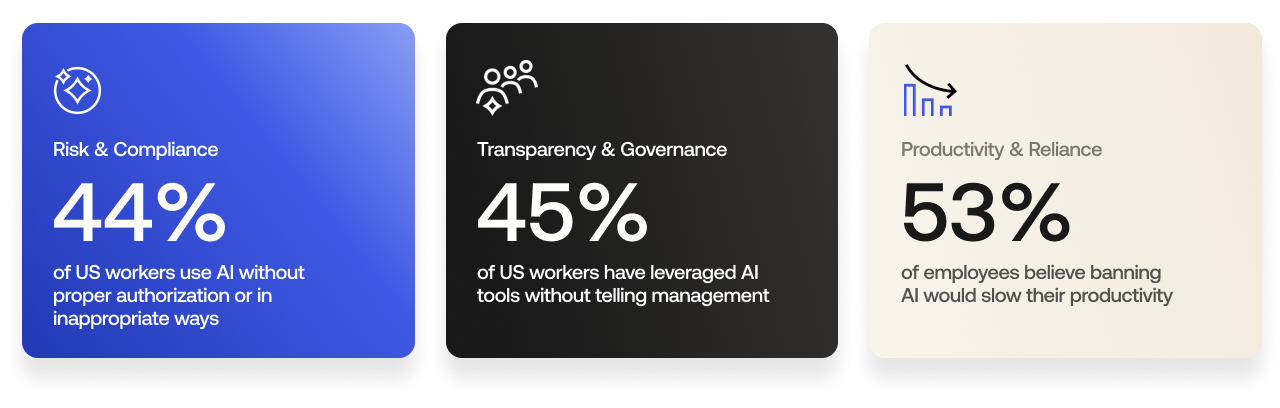

As AI tools flood the market and new startups emerge daily, employee adoption is inevitable — though adherence to approved tools and processes is not. A KPMG survey reported that 44% of US workers use AI without proper authorization or in inappropriate ways. Another study from Gusto stated that 45% of workers in the US have leveraged AI on the job without telling their employer or manager, and 53% felt their productivity would slow down if AI were banned.

This reality leaves organizations struggling with a push-pull situation — the push to embrace AI's productivity gains versus the pull of security and risk management. Unmanaged AI can introduce everything from biased outputs to data leaks to regulatory compliance issues.

Still, Bryan McGowan, Global Trusted AI Leader at KPMG, explains that employees often turn to unauthorized tools because they offer greater speed and ease of use compared to sanctioned alternatives.

"The evolution of governance frameworks, risk controls, and employee education has lagged behind AI adoption," he notes. "Moreover, organizations struggle to find the right balance between internally designed safeguards and those offered by technology platforms, such as hyperscalers."

This lack of policy and governance exacerbates the situation. Okta's AI at Work 2025 report revealed that only 36% of organizations had a centralized governance model for AI. Additionally, further examination of the survey data revealed that when asked what the key elements were to the successful adoption and integration of AI, two of the top three answers were “processes and guardrails for assurance of quality data” and “governance and security.”

Establishing effective AI governance

"Effective organizations absolutely need the visibility, policy setting, and governance that comes from a centralized, top-down model," notes Ben King, Vice President, Security Trust and Culture, at Okta. "A central team or committee can define data use, consistent policy and processes, and guardrails; meet international and regulatory expectations; include security and privacy by design; and make the most efficient use of resources with full visibility of need and use cases across an organization."

Effective identity access management must underpin all of this, providing the level of control and visibility organizations need. Overpermissioned AI agents, for example, can expose enterprises to security risks and data leaks. But in addition to a top-down focus on IAM, organizations also need a culture of innovation, experimentation, and feedback from the bottom up.

"Employees must feel empowered to test, learn, fail, iterate, and try again without feeling restricted," King says. "The result being a hybrid model where centralized visibility and control exists, but is designed to allow and encourage innovative use of AI."

The real question, he continues, is to determine how organizations should define this model and where to draw the line. The answer to that question is a function of organizational maturity across the tech, legal, privacy, security, and risk lenses in the markets the organization operates in.

"A mature organization may allow more experimentation with less defined control, if the existing employee culture and education means the testing will be conducted in a refined and secure way," King says. "By contrast, a less mature org will require more robust guardrails, explicit expectations on what's acceptable and what's not, with review and approval boards."

Pull AI out of the shadows by empowering employees

McGowan suggests that organizations set up an AI labs function designed to promote the standardized and collaborative development of AI solutions. Its primary role, he explains, is to create and share design patterns, playbooks, and a uniform AI architecture across the firm.

"This provides a secure, low-risk environment where employees can experiment with AI tools and technologies — a sandbox that allows for hands-on learning and testing of real-world challenges before solutions are deployed more broadly," he adds. "It also works with various teams, clients, and partners to co-create and test AI solutions, ensuring they are practical and effective, while also helping align new market innovations with the company's established AI architecture to maintain consistency and security."

"The goal is to foster an AI-centric culture, so access is often provided to a wide range of employees," McGowan says. "For instance, at KPMG, the ‘AI & Data Labs’ and its tools are available to all US employees, and specific labs are accessible to all advisory professionals upon request. This encourages widespread AI literacy and innovation."

Building a culture of confidence in AI requires a human-centered approach focused on engagement, education, and empowerment, McGowan says. To foster that environment, he adds, organizations should follow these key strategies:

Build trust and reduce fear: Address common fears about AI by debunking myths and being transparent about its role. Create clear "from-to" definitions for how roles will evolve, emphasizing AI as a tool to augment human potential, not replace it.

Empower and upskill employees: Provide access to secure AI tools and "sandbox" environments where employees can experiment without risk. Offer diverse training options, such as hands-on hackathons, formal training sessions, and on-demand learning paths to build confidence and proficiency.

Engage and inspire: Develop a clear strategic narrative from leadership about the company's AI vision. Recognize and reward employees for experimenting with and successfully using new AI-powered ways of working.

Make AI accessible and easy to use: Integrate AI tools seamlessly into daily workflows so they become second nature. Create a centralized hub where employees can easily find AI tools, resources, and best practices.

“By implementing these strategies,” McGowan says, “organizations can move their workforce towards a place of curiosity, inspiration, and commitment to leveraging AI for innovation and growth.”

For recommendations to develop an effective AI governance strategy, read Why your AI strategy is exposing you to risk — and what to do about it.