As AI agents increasingly interact with data and perform complex tasks with minimal human input, they introduce new security challenges and amplify preexisting ones. The enterprise has long struggled with issues of control and visibility into disparate systems. Now, with AI agents operating autonomously across the enterprise, those same challenges are surfacing at a new scale and speed, threatening the productivity gains that secure agent-to-app communication and collaboration could offer.

Legacy consent models expose weaknesses

Traditionally, enterprise applications allowed users to grant third-party apps access to their data using OAuth, where users see a permission screen asking them to approve specific data access, like reading emails or calendars, before the app can proceed.

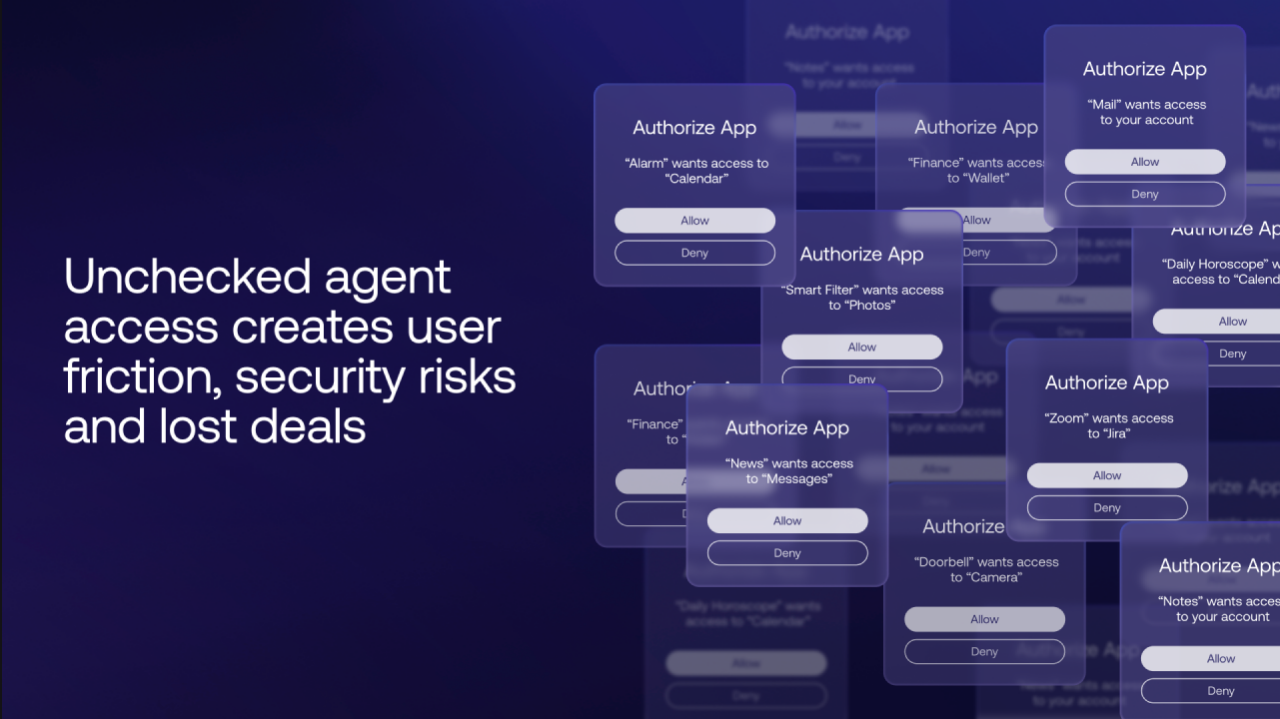

As it was originally built for human-to-app interactions, OAuth was designed to put the human in the middle of the exchange. Recent breaches of customer records at large technology companies, insurers, airlines, among others, in which users were tricked into authorizing attacker-controlled OAuth applications, highlight the risks of relying on users to distinguish between benign and malicious apps. Users can be directed to legitimate sign-in and consent pages, with little context to scrutinize the application requesting access. This can lead to "cognitive fatigue," when a user is presented with too much information or too many decisions, reducing their ability to assess risks effectively.

Beyond security, there are also usability concerns. Repeated or fragmented OAuth consent prompts can create a poor user experience and result in inconsistent functionalities across different users within a product.

AI agents are forcing a breaking point

As AI agents use protocols like Model Context Protocol (MCP) and Agent2Agent (A2A) to connect to other systems, human-centric access models have already reached their limits. These agents are moving between environments, accessing data, and initiating workflows in ways that are difficult to track, let alone govern.

Cross App Access: A new standard for AI security

Recognizing these challenges, Okta has been working with industry leaders within standards bodies to develop Cross App Access (XAA). XAA is an open protocol that extends OAuth to secure interactions across ecosystems. It centralizes visibility and control of app-to-app and agent-to-app connections within the identity provider (IdP), rather than individual applications. This helps ensure access policies are consistently enforced and easily auditable, while improving the end-user experience by removing repetitive authorization screens.

XAA treats AI agents as first-class entities, enabling their actions to be governed, audited, and secured like any other user or application.

Driving industry alignment for a secure future

Industry leaders like Automation Anywhere, AWS, Boomi, Box, Glean, Google Cloud, Grammarly, Miro, Salesforce, and WRITER plan to support Cross App Access, recognizing the need for secure, transparent AI agent interactions across enterprise applications.

"As autonomous AI agents take on increasingly complex tasks across mission-critical operations, from finance and compliance to customer service, enterprises need full visibility and governance over every interaction between agents, models, and tools,” said Adi Kuruganti, Chief Product Officer, Automation Anywhere. "Cross App Access provides a critical new standard for building the trust required to securely scale these powerful capabilities across the enterprise.”

Securing every identity from humans to agents

Okta's vision is to build an identity security fabric that secures every identity — human, non-human, and AI agents — across all systems and applications, before, during, and after authentication. To make agents “fabric ready,” XAA is planned to soon be available with out-of-the-box support in Auth0, enabling B2B SaaS developers to build applications and AI tools that can natively participate in the protocol. It is also planned to be available within the Okta Platform fabric, providing organizations with a way to enforce policies that govern how those agents interact across the enterprise.

Securing this age-old problem, now magnified by agentic AI, isn’t something one organization can solve alone. It requires collective responsibility, industry-wide collaboration, and alignment around open standards to create secure ecosystems capable of keeping up with evolving technologies and threats.