Okta recently announced the results of an executive poll on AI security and oversight, which shows that while Australian organisations are rapidly embracing AI, many are still defining who owns the risks and how best to govern them.

The live poll, conducted across Okta's Oktane on the Road events in Sydney and Melbourne, captured responses from hundreds of technology and security executives. The findings show that AI adoption and awareness are accelerating, but that governance frameworks and identity systems now need to mature at the same pace.

Key findings

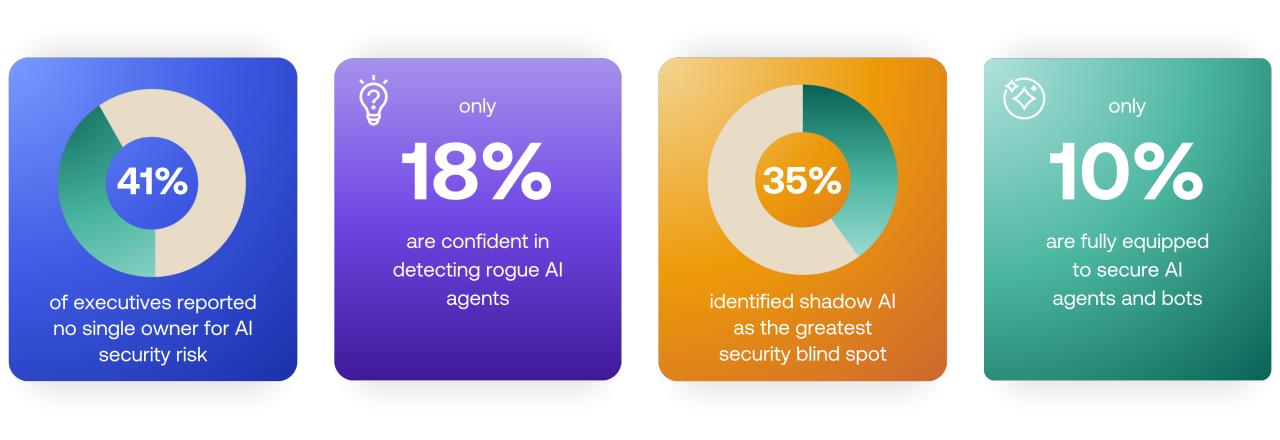

41% of respondents said no single person or function currently owns AI security risk in their organisation.

Only 18% said they were confident they could detect if an AI agent acted outside its intended scope.

Shadow AI, unapproved or unmonitored tools, was identified as the top security blind spot (35%), followed by data leakage through integrations (33%).

Just 10% said their identity systems were fully equipped to secure non-human identities such as AI agents, bots, and service accounts, while 52% said they were partially equipped.

Board awareness is improving, with 70% saying their boards are aware of AI-related risks, but only 28% said boards are fully engaged in oversight.

The findings highlight Australia's enthusiasm for AI and the growing recognition that security and governance must evolve in parallel.

"Australian organisations are embracing AI with real momentum, and that's a positive sign," said Mike Reddie, Vice President and Country Manager, Okta ANZ. "We are seeing a shift from early experimentation to responsible, strategic adoption. The next step is ensuring governance and security evolve at the same pace."

"Securing AI isn't about slowing progress; it's about starting with the right foundation. When identity is strong, trust follows, and that's what enables innovation to scale safely and sustainably," Reddie added.

The results show that most organisations already view identity as central to building AI trust. However, many are still adapting traditional access controls to the new risks posed by AI agents and automation.

Okta's recent AI at Work 2025 report found that 91% of organisations globally are already using or experimenting with AI agents. Yet fewer than 10% have a strategy to secure them.

As AI becomes more mainstream, organizations must apply the same discipline to securing AI agents as they do to human users, helping ensure every agent has a verified identity, defined permissions, and full auditability.

Methodology

All results are from live, interactive polls conducted during the Oktane on the Road event series in Sydney and Melbourne in October 2025. The polls, administered using the Slido platform, targeted Okta customers and partners, specifically senior IT and cybersecurity professionals attending the events. The total number of respondents was 219.

The poll consisted of five questions to gauge executive sentiment on the use of AI. The questions were designed to measure where customers are and how they are evolving on their AI journey. The collected data was used to generate country-specific results. The Okta Newsroom team analyzed the results and produced this article.