An Automated Approach to Convert Okta System Logs into Open Cybersecurity Schema Framework (OCSF) Schema

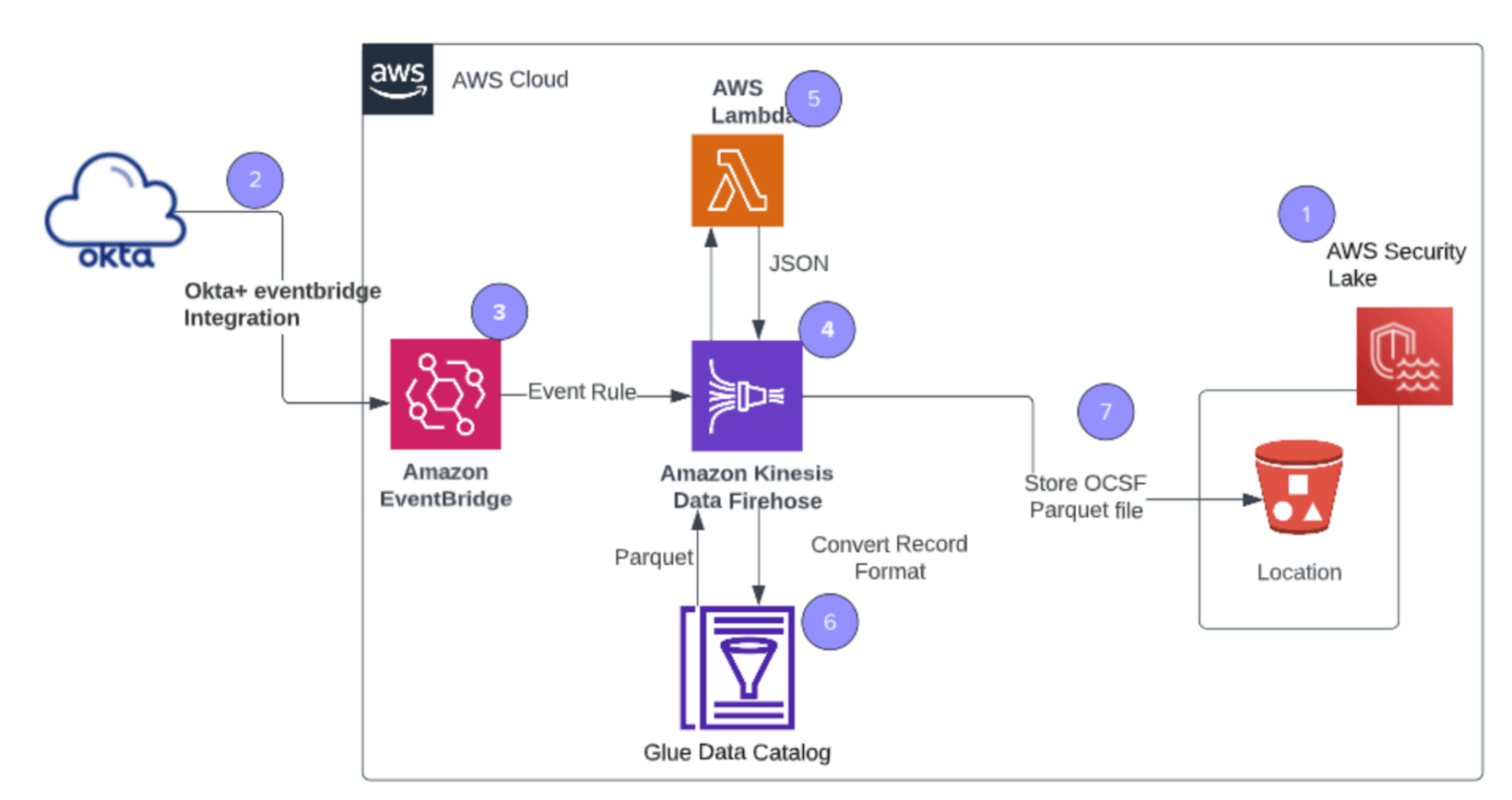

This solution demonstrates the setup required to convert Okta System Log events into Apache Parquet files. Converted and OCFS-formatted Okta Syslog will be stored in an Amazon S3 bucket. Amazon Security Lake consumers will use OCSF logs from the S3 bucket for downstream analytical processes.

This post will show how you can convert Okta System Log events using Amazon EventBridge, Amazon Kinesis Data Firehose, and AWS Lambda functions. Amazon Kinesis Firehose delivery stream will enable record conversion to convert the JSON data into Parquet format before sending it to the S3 bucket backed by Amazon Security Lake.

Open Cybersecurity Schema Framework (OCSF)

The Open Cybersecurity Schema Framework is an open-source project that delivers an extensible framework for developing schemas and a vendor-agnostic core security schema. Vendors and other data producers can adopt and extend the schema for their specific domains. Data engineers can map differing schemas to help security teams simplify data ingestion and normalization so that data scientists and analysts can work with a common language for threat detection and investigation. The goal is to provide an open standard adopted in any environment, application, or solution while complementing existing security standards and processes. You can find more information here: https://github.com/ocsf/

Okta produces critical logging information about your identities and actions. Okta System Log includes events related to your organization to provide an audit trail that is helpful in understanding platform activity and diagnosing security problems. Converting Okta’s System Logs to OCSF compatible version will help customers query security events using an open-standard schema, while complementing all existing security events.

Architecture diagram

Here’s how to convert incoming Okta System Log JSON data using the AWS Lambda function. Use the format conversion feature of Kinesis Firehose to convert the JSON data into Parquet.

- Step 1: Create a custom source using the AWS Security Lake service.

- Step 2: Create integration between Okta and Amazon EventBridge

- Step 3: Define an event rule filter to capture events from Okta System Log and communicate to Amazon Kinesis Firehose

- Step 4: Firehose stream invokes a Lambda as it receives an event from EventBridge. The Lambda function will transform Okta’s System Log data into OCSF-formatted JSON.

- Step 5: Configure a Firehose data stream to transform Okta System Log into OCSF format by invoking a Lambda function.

- Step 6: Configure a Firehose data stream to convert the OCSF format from step 4 into a parquet record. (Parquet is the only acceptable format for Amazon Security Lake.)

- Step 7: Converted Parquet files with OCSF schema will be stored in an S3 bucket created as part of Amazon Security Lake’s custom sources.

Preparing the environment

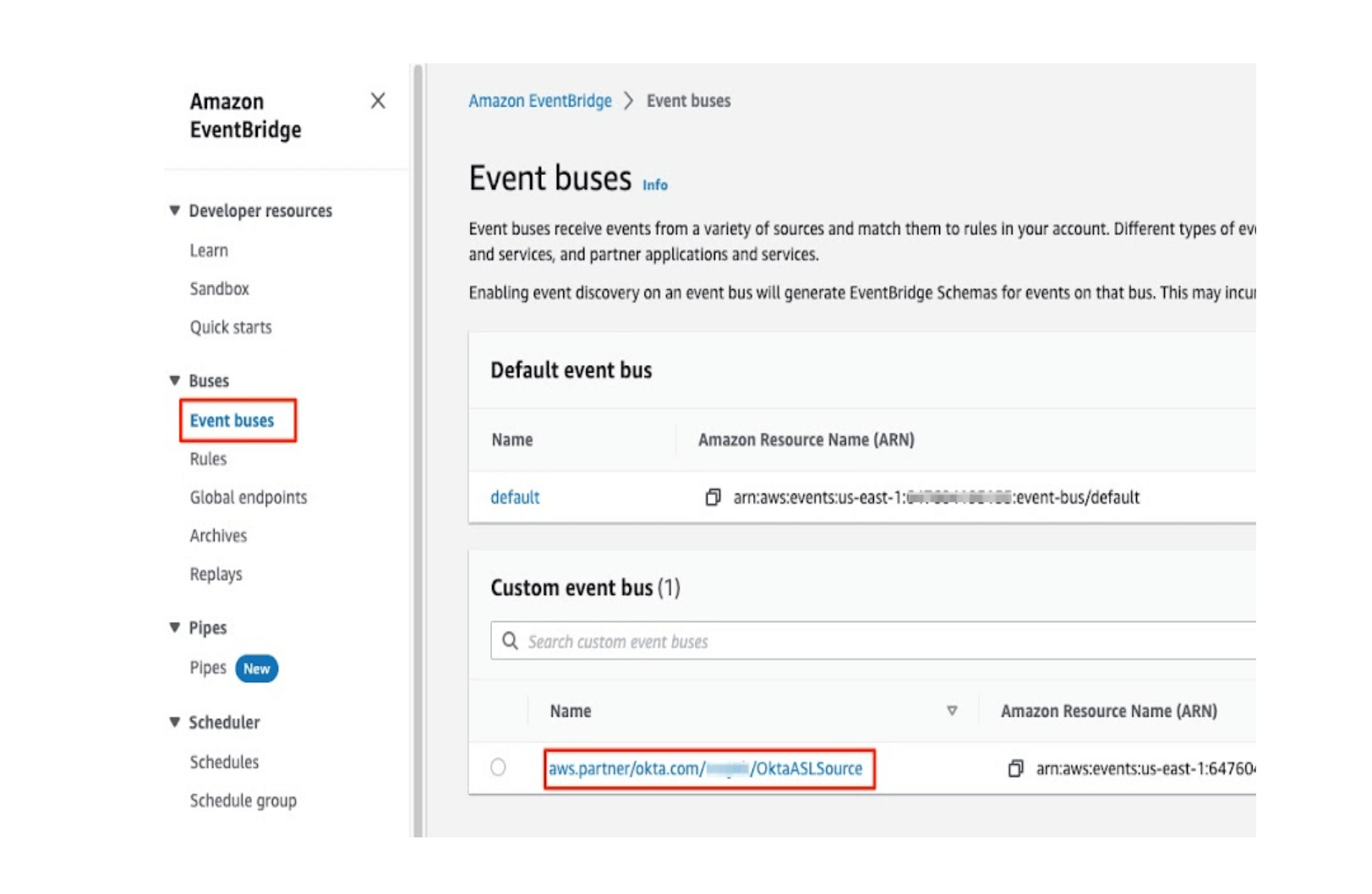

Before diving in you need the Okta and Amazon EventBridge integration. Okta will send Okta System Log events to Amazon EventBridge. You must add an Amazon EventBridge log stream in Okta and configure it in the Amazon EventBridge console. You can find the document for setting Okta and Amazon EventBridge integration here.

After completing the Okta and EventBridge integration, you will notice an Event bus created in the EventBridge service console. Please note the Event bus name. This Event bus name is an input to the AWS CloudFormation template.

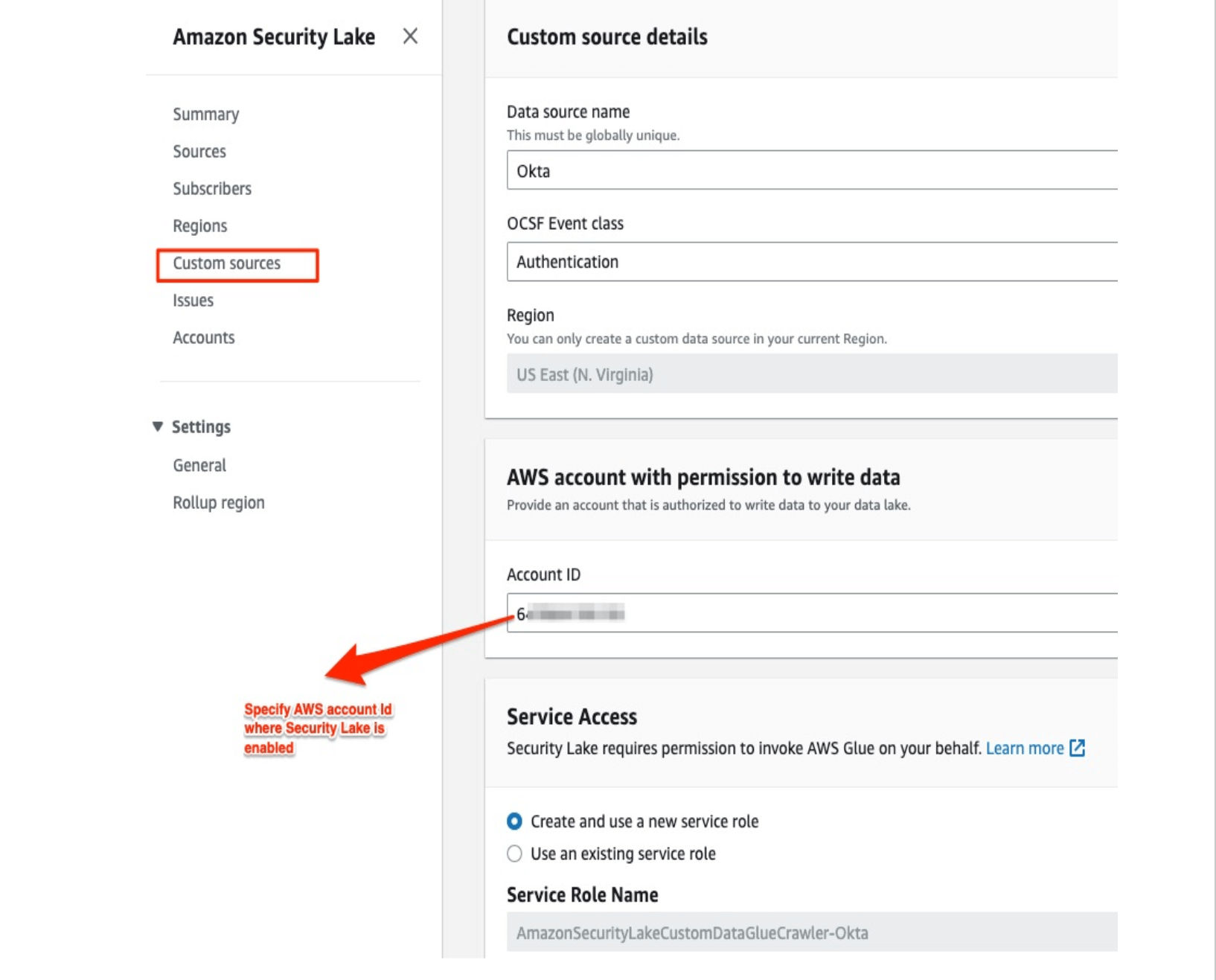

After completing the above steps, you must create a custom source inside the Amazon Security Lake service. Please follow the link to add a custom source inside the Amazon Security Lake service.

Creating a custom source in AWS Security Lake service

Once you enable the Amazon Security Lake service, copy the S3 location name from the AWS console under Regions.

Note: Please use the AWS Account ID where AWS Security Lake is configured and enabled. This solution can’t deliver OCSF logs to a cross-account (another account).

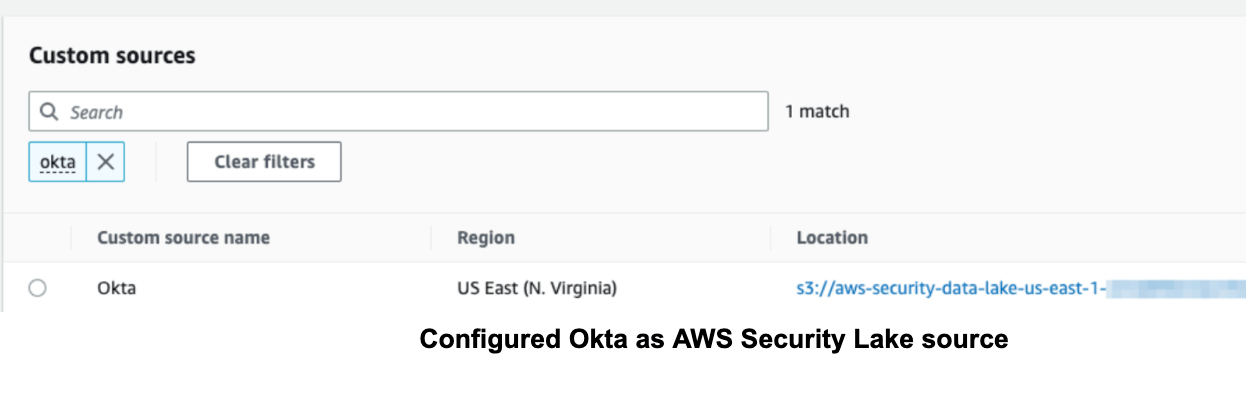

Configure Okta as AWS Security Lake source

Deployment

An approach here uses AWS CloudFormation to model, provision, and manage AWS Services by treating infrastructure as code. An AWS CloudFormation script will launch the following AWS resources:

- AWS Lambda

- Amazon Kinesis Firehose Delivery Stream

- AWS Glue Database and Table for Data Transformation

- AWS Lake formation permissions

- Amazon Eventbus rule

The CloudFormation template for deploying the solution below can be found here: https://github.com/okta/okta-ocsf-syslog.

Step 1 – Create Lab resources using AWS CloudFormation

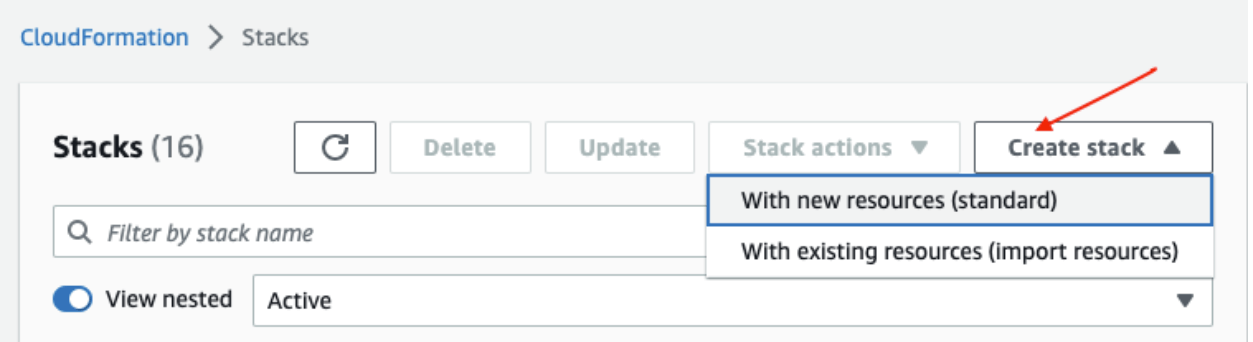

Click "Create a stack with new resources."

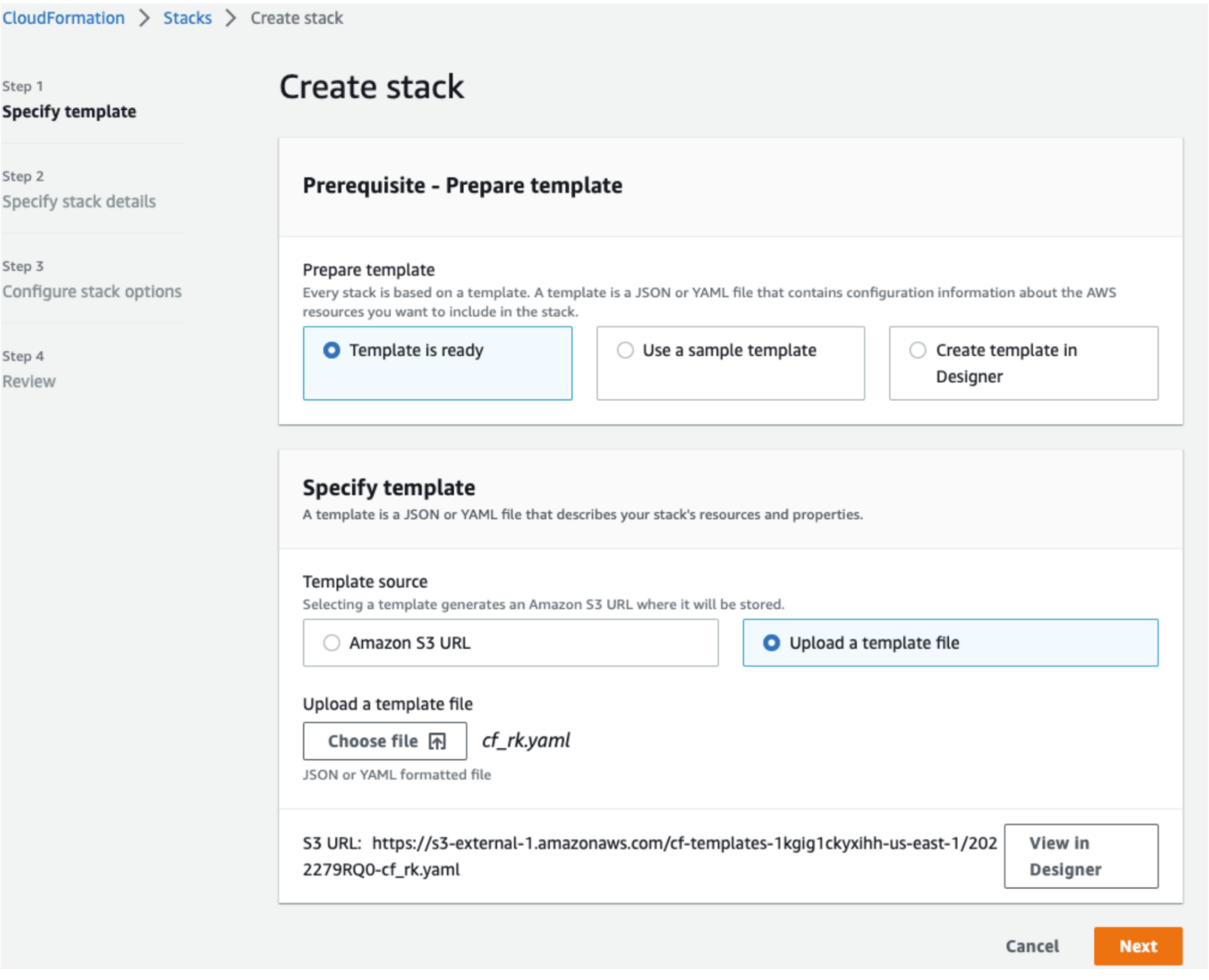

On the "Create stack" page, select the CloudFormation template. The CloudFormation template can be on your local system or an S3 bucket, and click "Next."

On the "Specify stack details" page, enter the Partner EventBus and S3 bucket location from the Amazon Security Lake custom source, and click "Next."

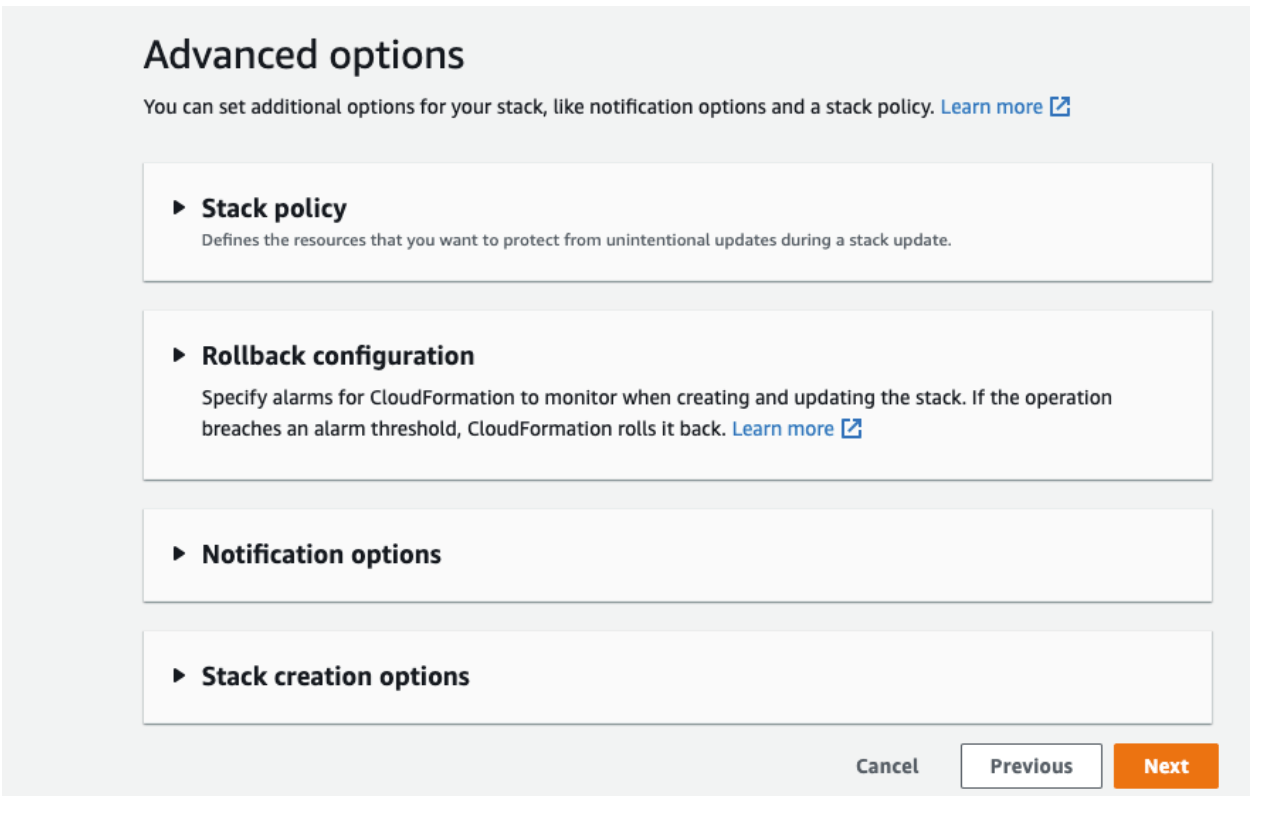

Scroll down and click "Next" on the Stack options page without changing anything, and click "Next."

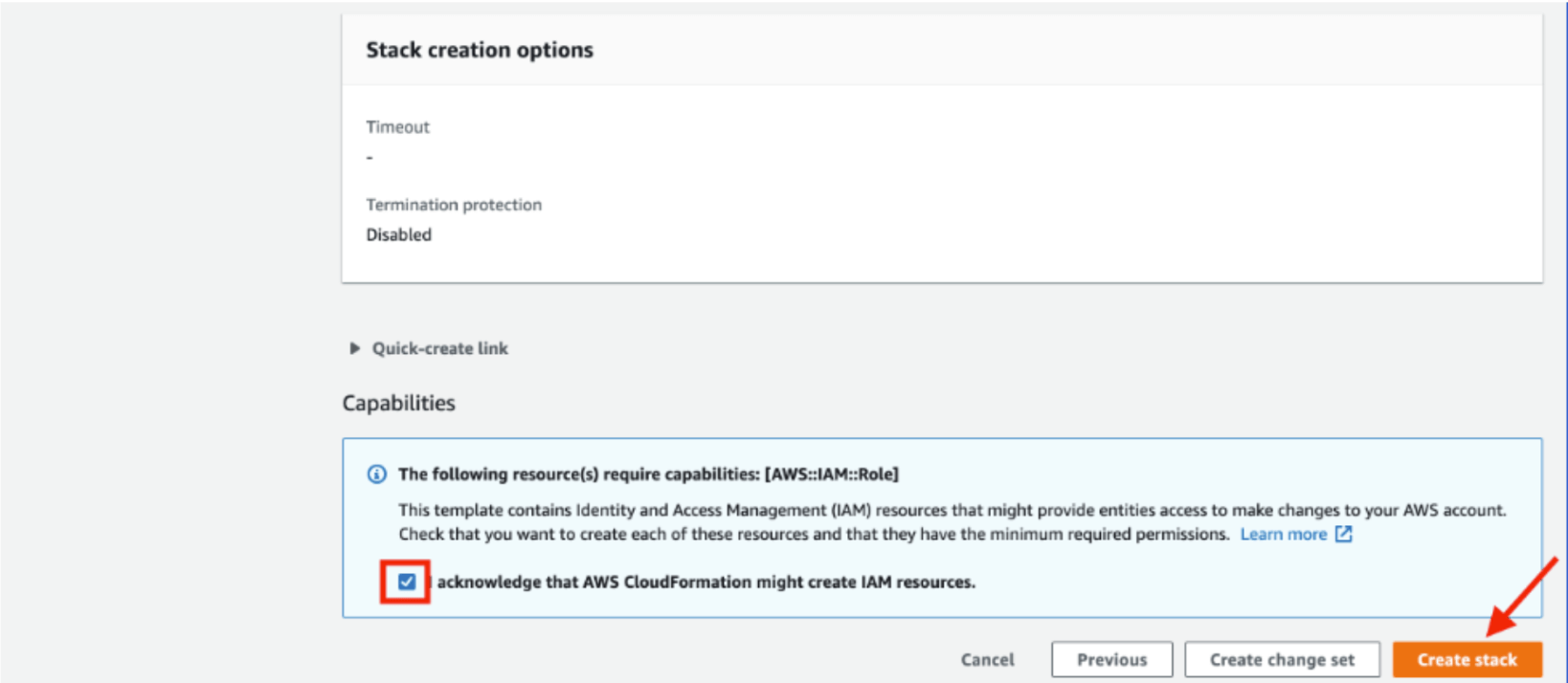

On the "Review" page, review the configuration, check the acknowledgment at the bottom, and click "Create stack."

The CloudFormation stack creation can take up to five minutes to complete. You will notice the status changes from CREATE_IN_PROGRESS to CREATE_COMPLETE during the creation process.

Once the process is complete, review the "Outputs" section to validate.

Step 2 - Verify CloudFormation resources

Follow the steps below to verify the resources created by the CloudFormation stack in the previous step.

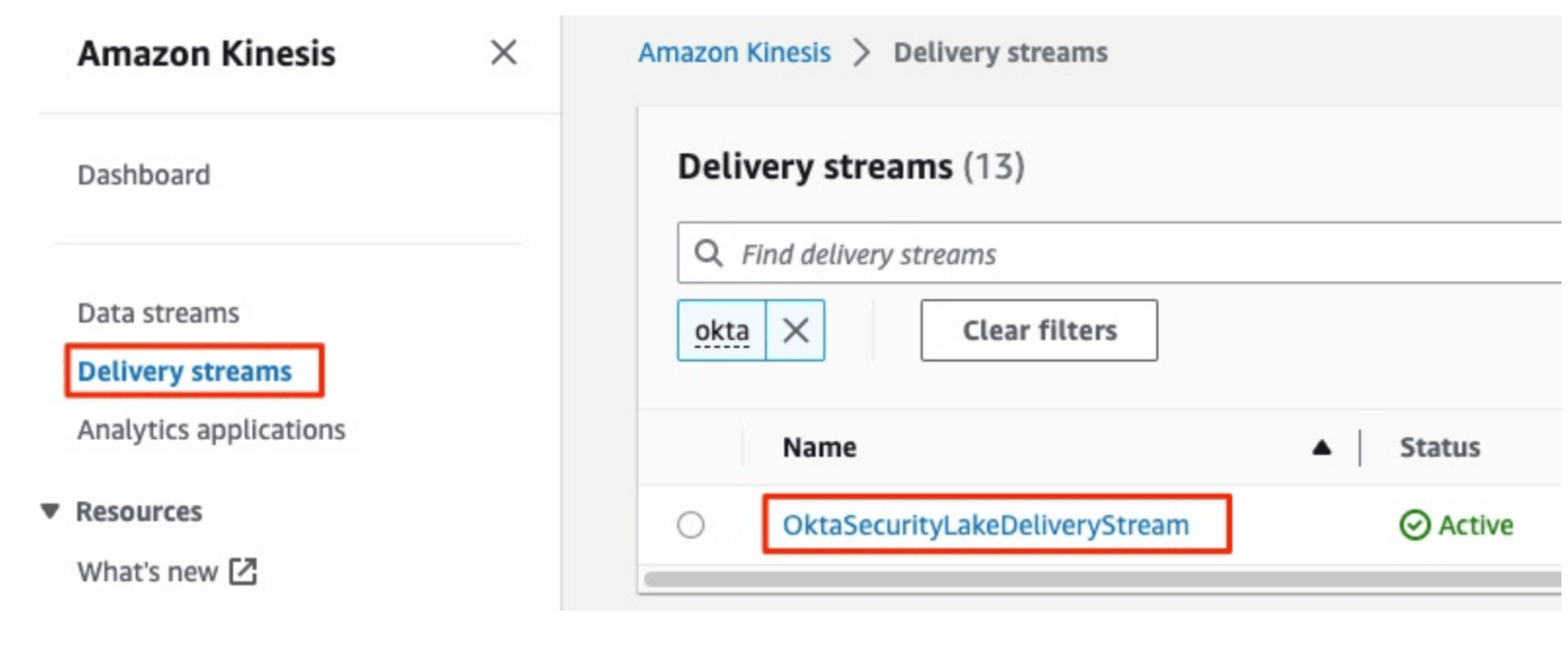

Open the Kinesis Data Firehose Console. Here, you will see “access-logs-sink-parquet” as a delivery stream. Click the access-logs-sink-parquet delivery stream.

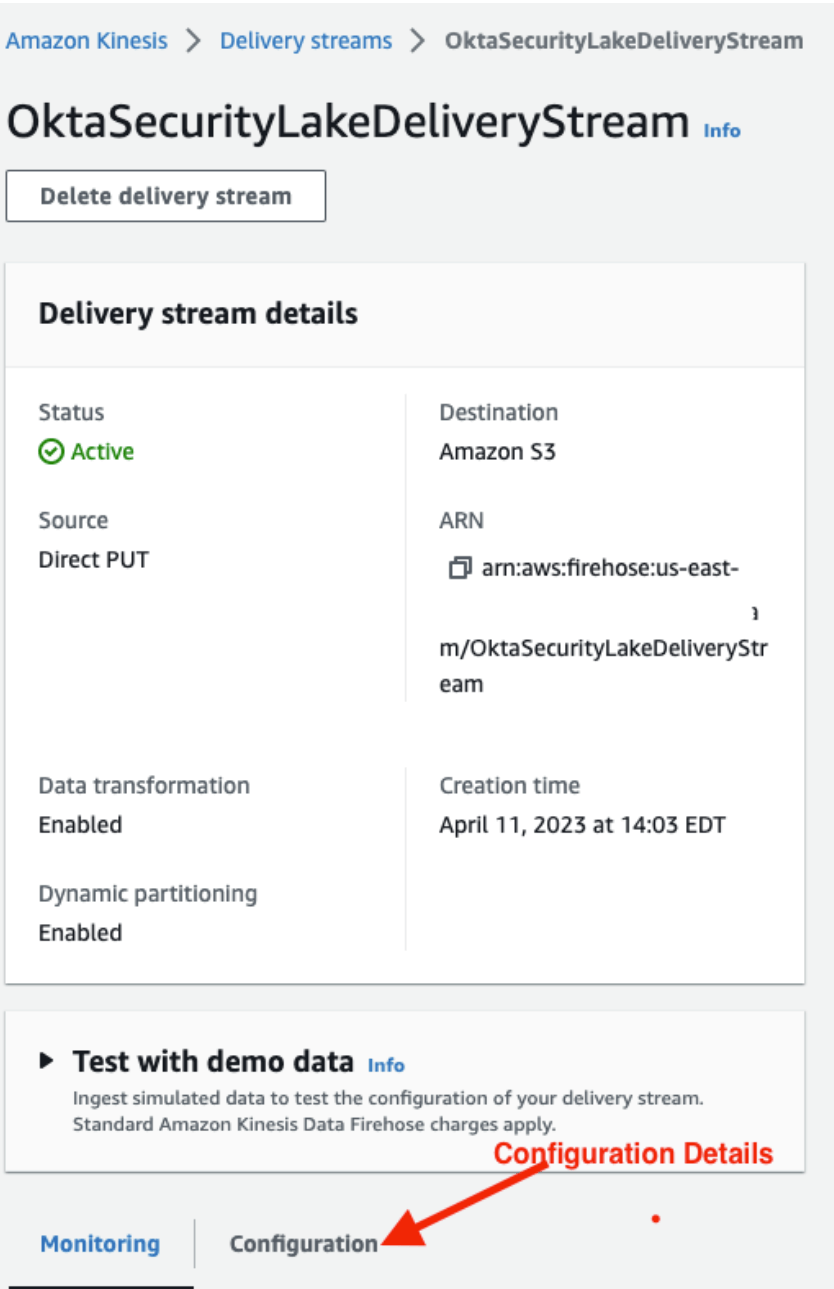

Review delivery stream configuration details.

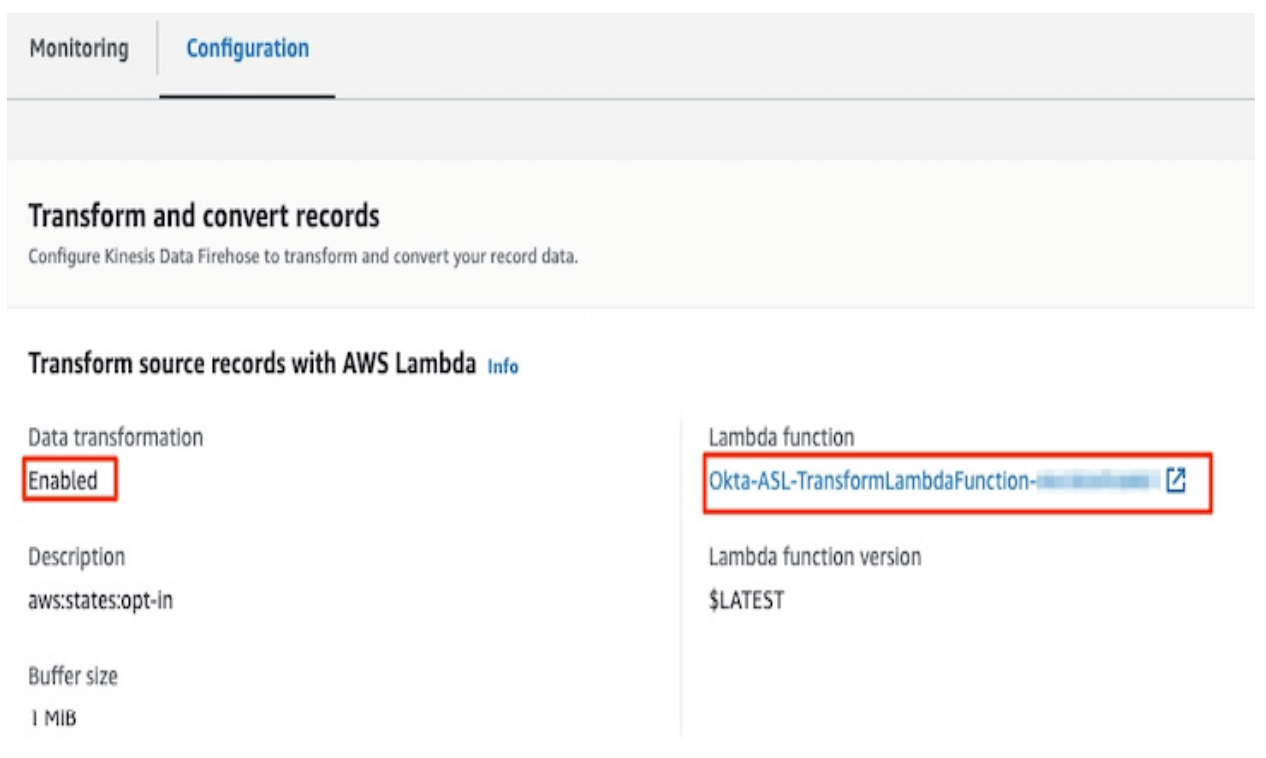

Notice that data transformation is enabled. The Lambda function will transform incoming Okta System Log events into OCSF JSON format.

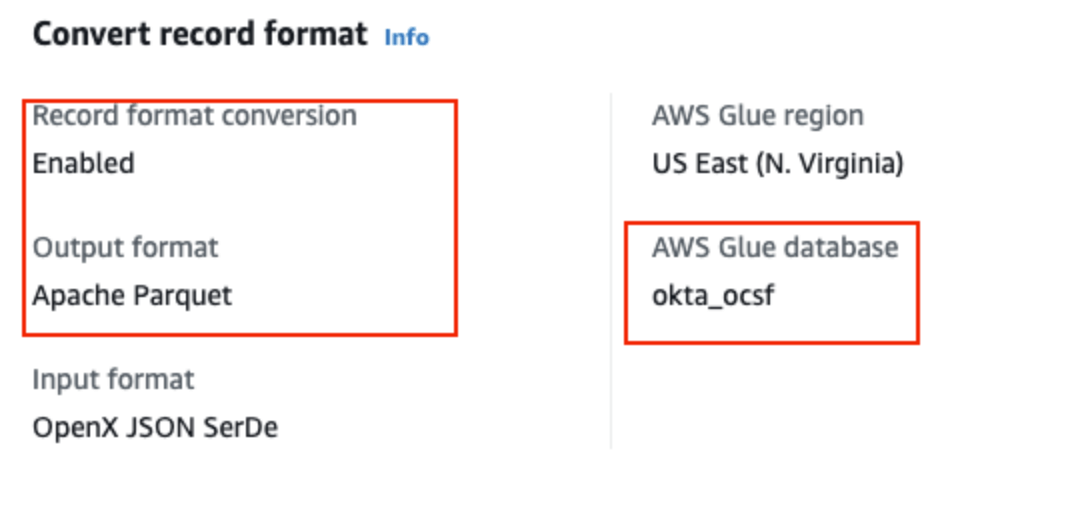

Review the "Convert record format" section for the Kinesis Firehose. This section shows that the record format conversion is enabled, and the output format is "Apache Parquet."

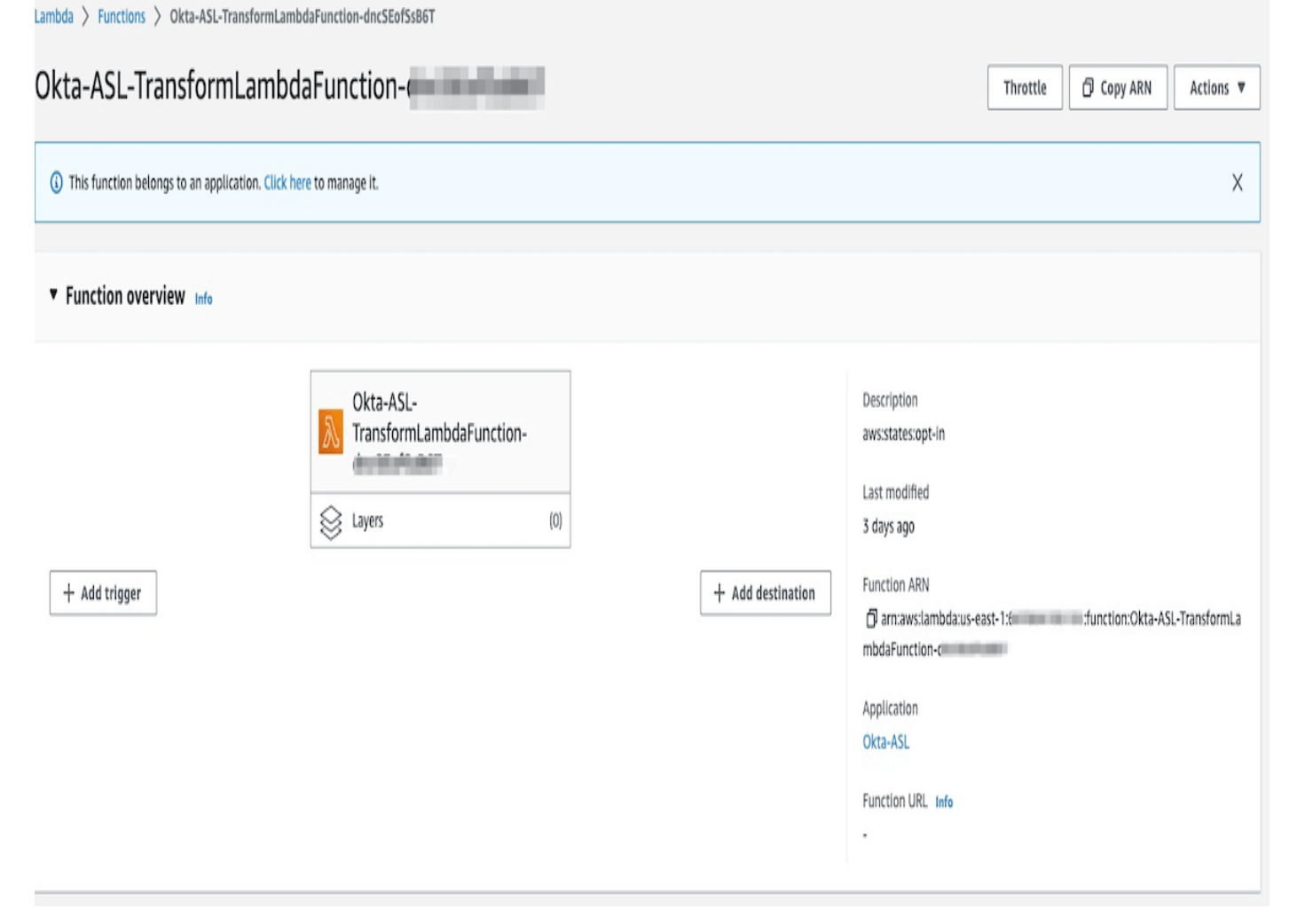

Click the Lambda function to review the Lambda configuration.

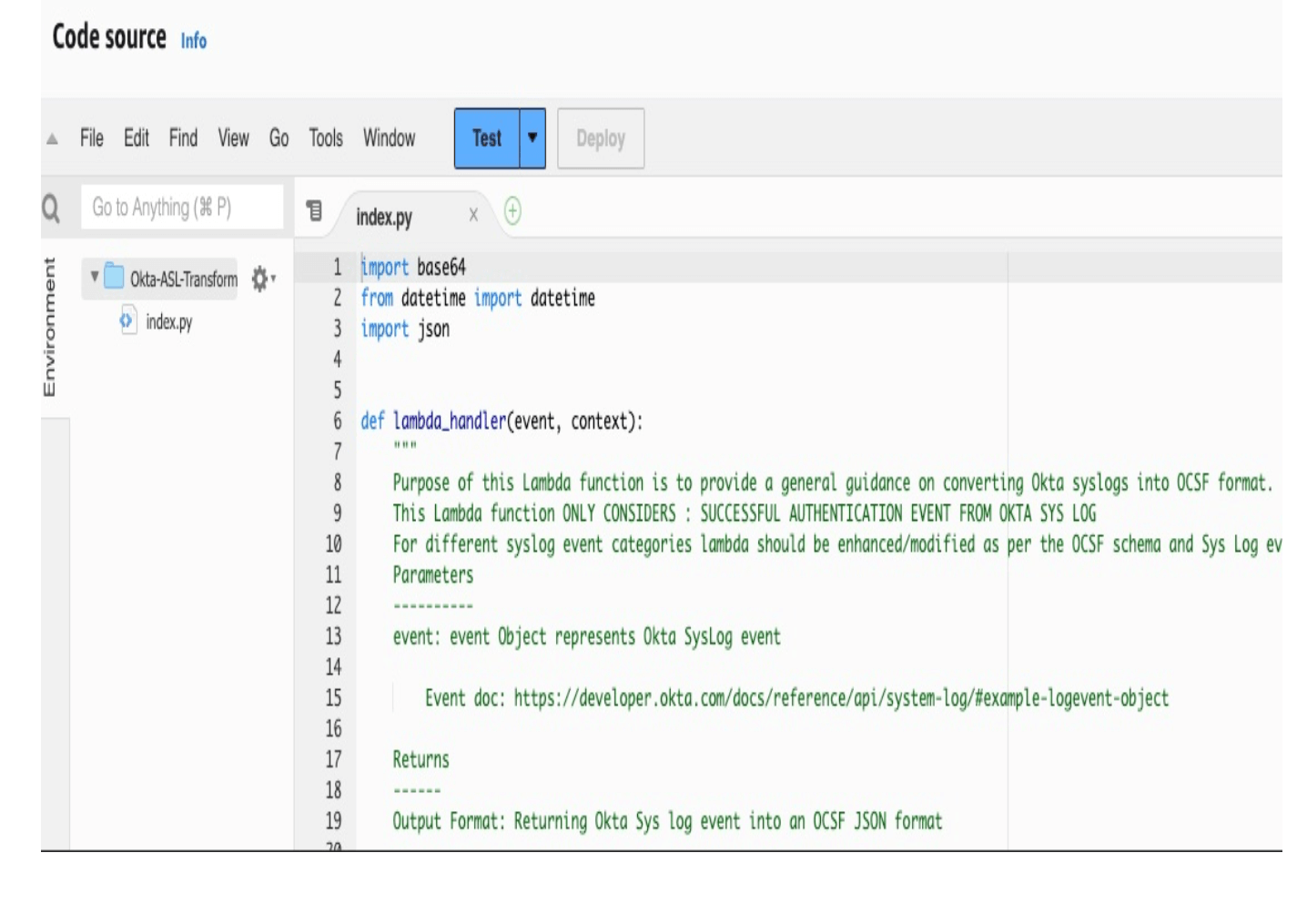

Click the code tab to review the Lambda function code. The Lambda function transforms the incoming Okta System Log into JSON format.

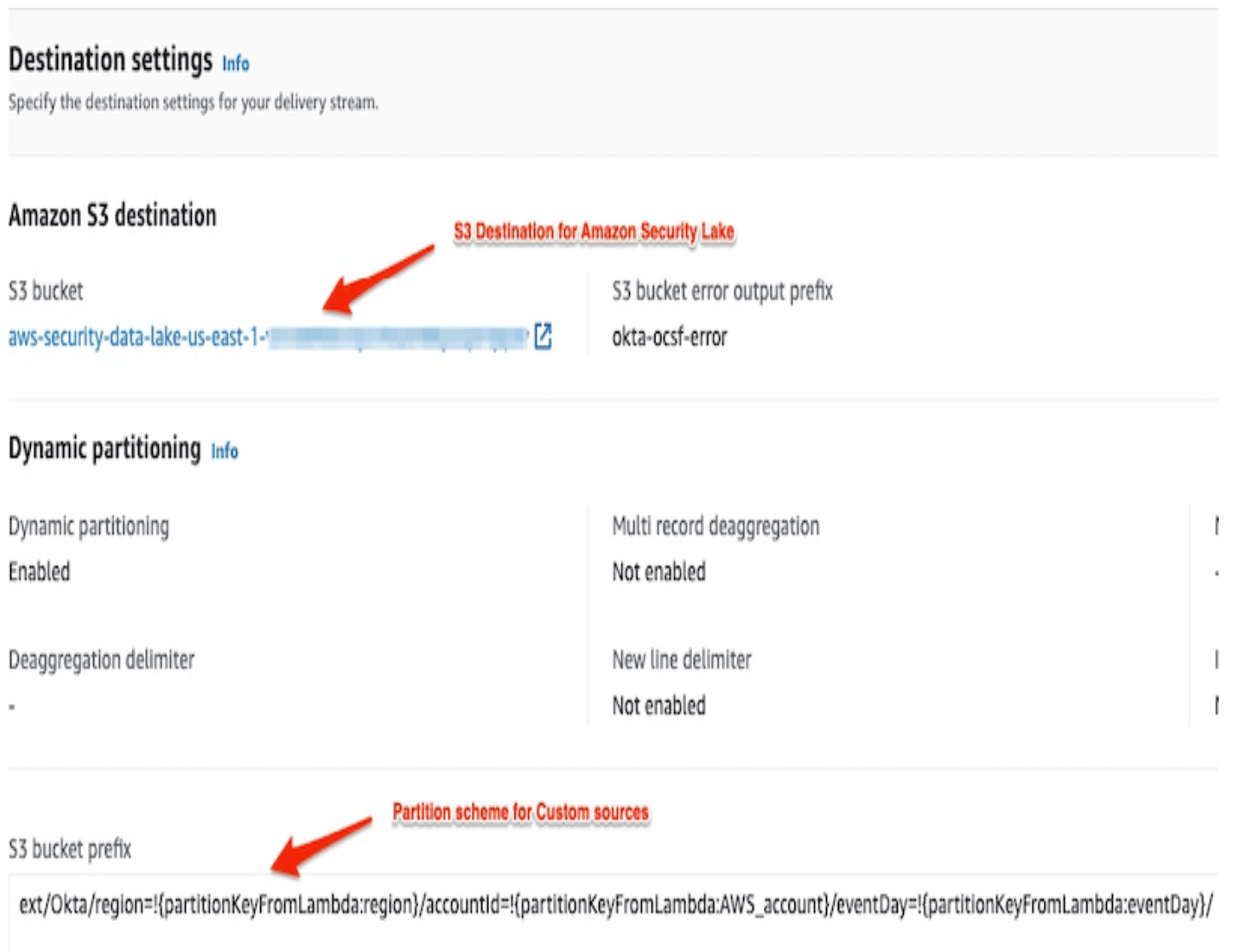

Return to the "Kinesis Firehose details" window, and view "Destination Settings."

- Amazon S3 Destination: Placeholder for final OCSF schema logs

- Dynamic Partitioning: Amazon S3 bucket is partitioned by the source of events (Okta System Log), region account, and time.

- Amazon S3 bucket prefix values: Configured via the CloudFormation template.

Generate Streaming Data (Test the setup)

We are considering an Okta successful authentication event. Lambda function, OCSF schema, and other artifacts are designed to satisfy this requirement. To initiate this event, a user must log in to an Okta portal.

Once you sign into Okta as a user, the process of Syslog generation is complete.

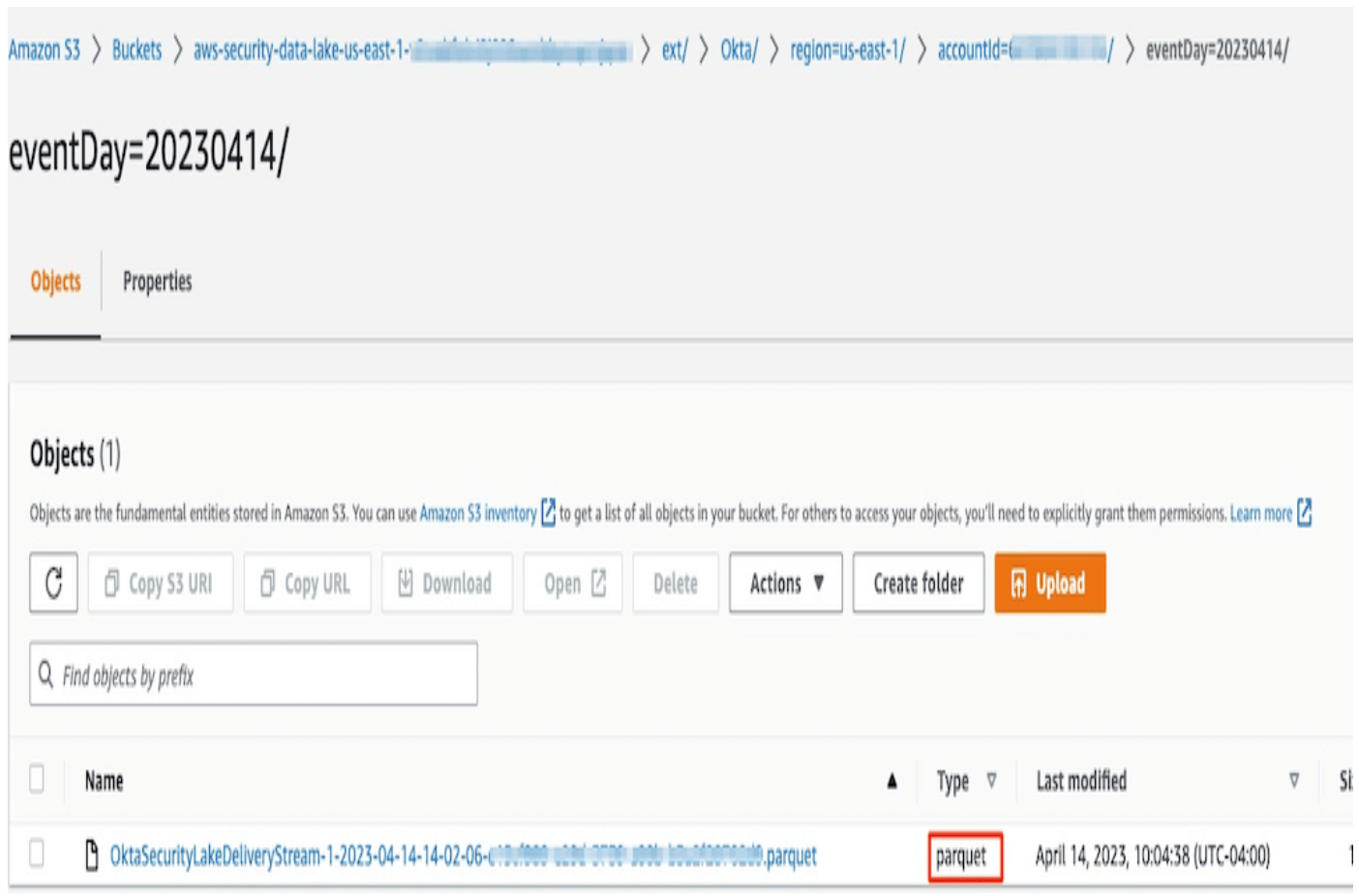

Verify the data in the S3 bucket

Navigate to the S3 Console.

Select the S3 bucket created as part of the Security Lake Initiation. You will see the data created under access-logs-parquet/ folder prefixed with E.g. ext/okta/region=!{partitionKeyFromLambda:region}/accountId=!{partitionKeyFromLambda:AWS_account}/eventDay=!{partitionKeyFromLambda:eventDay}/

Verify Parquet files and OCSF schema

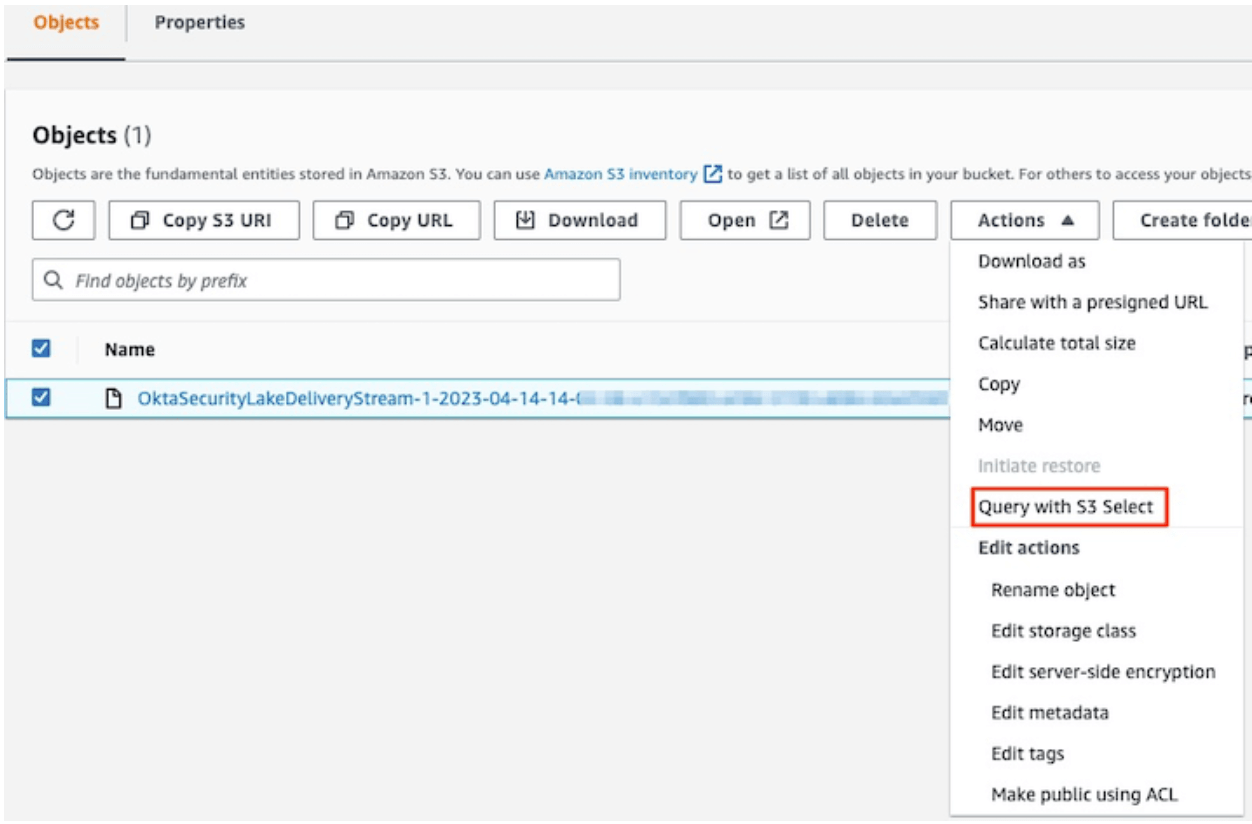

Select the converted parquet file, click Actions, and then click Query with S3 Select.

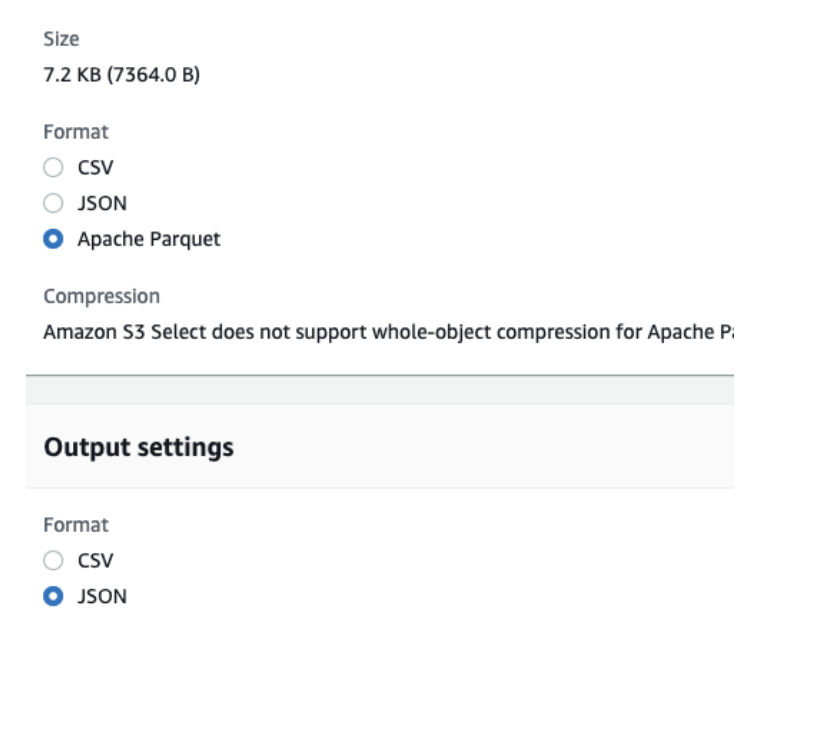

Select Input and Output settings

Select input as Apache Parquet and output as JSON string.

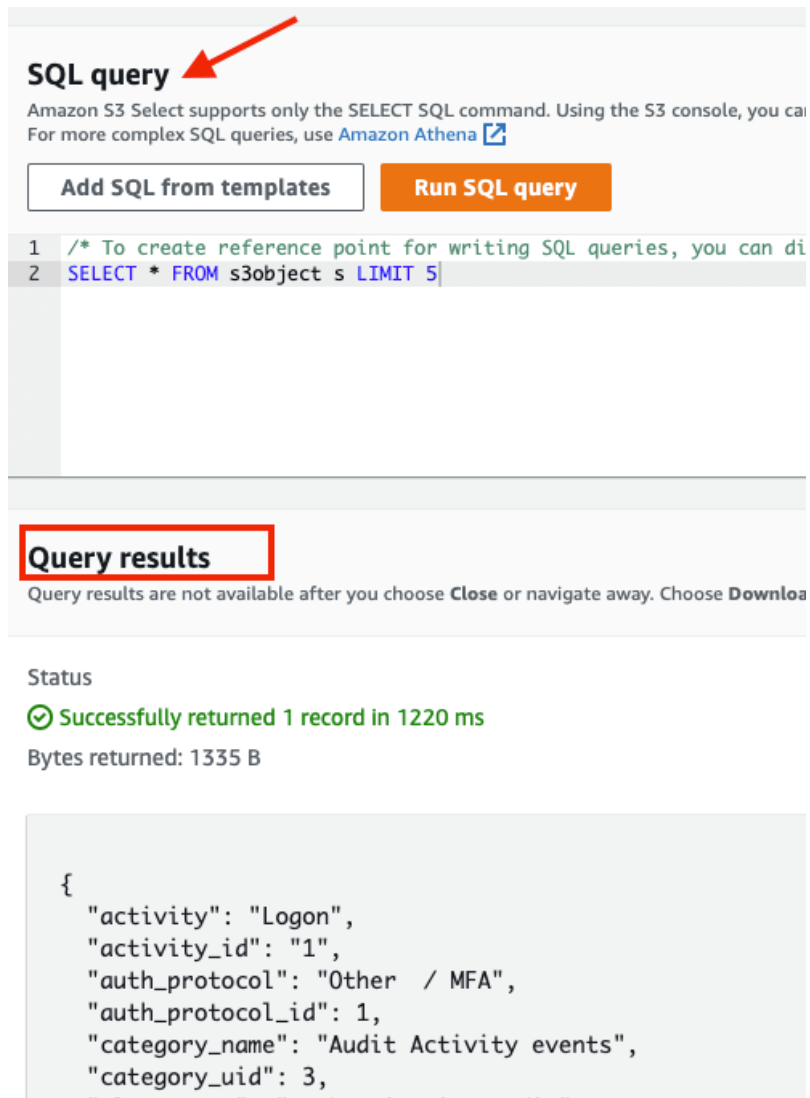

Query the result

Go to the SQL Query section, and click the Run SQL’query button. You will notice that the query result data is in the OCSF schema.

Conclusion

Security teams struggle to normalize data across various sources and in multiple formats. Adopting OCSF schema for all the generated logs helps security teams efficiently analyze and query security events and incidents. The approach described here demonstrates how customers can broaden their data normalization process to be completely automated.