What About the Customer? Leveraging Customer Identity to Better Engage Your Customers

Transcript

Details

Jen: Good morning, everyone. How's everyone doing? Good. So today, I'm joined by Robert and Paul, and we're really thrilled to talk to you about the digital customer experience at Con Edison. I want to start off with a quick overview. Robert's going to go through a technical deep dive of everything that we did, and how we did it and with API access management. How many developers or engineers do we have the crowd today? Good. Make sure you pay attention that one. And Paul is going to wrap up and close with our really practical, sort of retrospective items, which I think you'll all really going to enjoy. The focus today is about customers, right? And if we think about it, all IT organizations whether it's us or you, you have two types of customers. You have your external customers here on the right. Those are the people who are actually using your applications day-to-day, on the website, right? And then the other side of the house, you have those other applications, your third party providers. Either way, those are the people or the other applications, those consumers that are using your service layer. You support that as well. And it's not just unique to us, it's unique to you, and today we're going to talk about these two different customers, how they transfer data in between each other, through the service layer, through the digital channel, to all of those external customers. We're going to talk about who they are to us and to you. So Con Edison, anyone here from New York? East coast? Excellent. Con Edison is, you already know, is your electrical utility at New York. That's the five boroughs. Brooklyn, Queens, Staten Island, Manhattan, and Bronx. We also have Westchester, and it's also ... Westchester is also represented in Orange and Rockland, our sister utility company. We also do more than electricity. We also are a gas and steam distributor as well. And all these things put together with both Con Edison and Orange and Rockland, we've got 3.5 million users. And so these are account based, right? And so but this doesn't really represent is the additional user group, which could be larger. But try to guess for me, how many you think of these 3.5 million customers actually have a digital presence with us? Any thoughts? How many?

Speaker 1: 100 thousand.

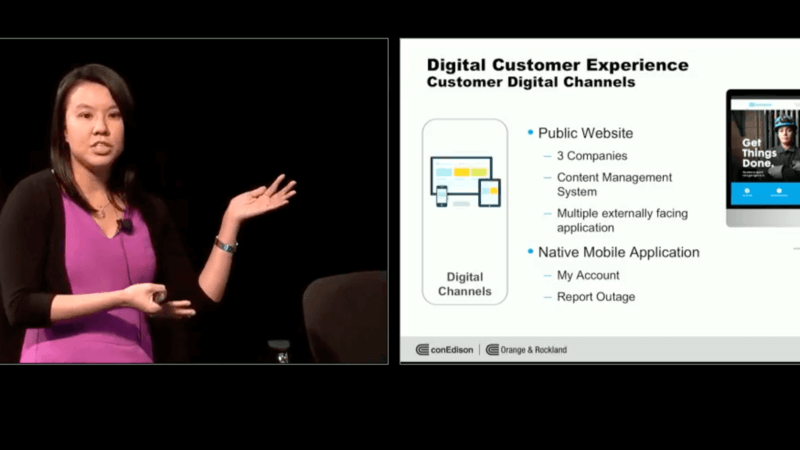

Jen: 10 percent. About a million actually, right? And so it's about a third. And so that's great. But there was a change sort of going on in Con Edison and the culture, and we really wanted to increase that. We saw that ever needing changes in technology, right? We saw our business needs go up and the demands of our business go up, and so our executives really wanted us to transform. They challenged us to get this digital footprint up higher, right? So how are we going to do that? So Digital Customer Experience Program was born. It's a multimillion triple joint initiative between IT, customer operations, and our branding department Corporate Affairs. And together, we went on this journey. We had a vision about one company, one experience for our customers that meets the needs today but also our needs for tomorrow. And so with that, we built up a vision, our pillars, customer first, agility, security, preferences, and there's one more. And security, right? And so together, we took this idea and we transformed our public website. We've come very far since then. We took away the 20000 million pages out there. We changed it into a beautiful website. UI designed one central location. This website actually is both all companies, Con Edison, ConEdi.com, and ORU.com. They all have the same look and feel. We also launched our native mobile apps in March for the my account experience and the report outage.

And so this is great, and this experience, these are all our digital channels. They just keep growing, and we had to really think about how we were going to support this from an infrastructure standpoint. Which leads us to our service layer. So it was really important for us not to just do a face lift of the UI of our website, but also look under the hood, replace that two liter engine with a Tesla electric one, and really make it robust, make it innovative, driven forward. These are some of the technical components of the service layer, which Robert's going to talk about later. But what was really important to us is that there's the enterprise service layer, and there's one source of place for all our consumers to touch our APIs and deliver that information to our customers.

So let's talk about some of those consumers, right? Those other customers that we have to support as IT. One of 'em, are our third party providers. You might be aware of one of 'em that we use today, it's Okta for all those single sign-on APIs that grow our service layer. We also have every third party providers, preferences, and payments. We also have our internal applications who need to communicate through our digital canvas our customers. We also have our interfaces, our source systems that protect and get data to and from those legacy source systems from those applications.

And so all of these consumers need to talk to that centralized one source of information on that service layer where all my APIs are stored. The problem is I have all these consumers and have all these digital channels that just keep growing, right? And we keep adding more and more. We have other third parties who we want to give our data to, to share them, and they don't need to necessarily go through the UI to get to the service layer. And so how are we going to protect them from all the applications, from all the data, to make sure they only have their rights that they need?

Well when we were building this infrastructure about a year ago, API access management came into preview. We took a look at it, and said this was the perfect thing to wrap our service layer, protect our digital channels that keep growing, and to protect our source systems on the backend side. And today, I'm going to turn it over to Robert to give us some more of an idea of how we did that, and why we did that.

Robert: So, why API access management? API access management is a layer of built-in security for our API end points, and it's all bundled in a seamless, easy to use interface. Now early on, we know that we needed a better system to secure our backend systems and provide a mechanism to control access to user data. So Okta released it in preview at the time and since we were already customers of Okta, given the options, evaluated what's the best tool for this? So Okta's API access management was what naturally fit as the best solution.

So with Okta's product, we can define granular controls based off of the defined policies, the scopes, the rules, and the claims for each given application. So adopting [O Off 2.0 00:07:45] was also a shift in change for the company. Enabling this change, it provided a huge security benefits. One of which was access tokens. Access tokens provide a way for applications to call upon the resource servers on behalf of the resource owner, which is the user. By allowing this, previously with the user credentials flow, if an account was compromised, the account was at risk at so many different levels in the backend system. With access tokens, the numerous benefits of which include limited expirations, revoking tokens. It can limit the extent of the damage down to the privileges granted for each access token. Now this is huge so imagine if you assigned a ... If there's a compromise access token for reading secret menu items. If that access token is compromised then the malicious attacker, although he has access to that access token, he cannot modify the menu items given that access token. So it's a simply a more secure process. You can also choose to encode the tokens instead of storing it in the database. Now Okta's API access management inherently provides this service through JWT tokens, which is another nice feature.

So, API access management, the core components that we're going to go over today for Okta's API access management is defining the authorization servers and under that is the policies that fall under each of the authorization servers, and there's a one to many relationship of rules that fall under these policies, and then we can define the underlying scopes. In Okta's API access management, you can also define the trusted origins based off the URI's that you provide. In addition, we will go over the integration with Swagger and how we leverage Azure API management on top of Okta's API access management. Okta API access management.

So authorization servers, what are they? They provide access tokens for the resource server to consume. These servers reflect the use cases on the orchestration layer not by the individual endpoint layer. Now we define the policies, scopes, and rules here at the authorization server. In our design, we built two authorization servers. One for applications, and one for enterprise components. The enterprise components are in charge of system related functions, retrieving secrets, configuration information, and the like. This separation of concern is important because it simplifies administration, management, and abates the risk that client applications get access to resources that they should not. Ideally, the best practice is each authorization server should only support one or few client applications. So scopes are the next layer that we define in Okta's API access management. Scopes are a focal point to how we restrict access to our services. We can construct four different types of scopes. Namely they are global with read or write scopes, client with read or write scopes, and the read access grant permissions only to read the data, write permissions like vice versa is the write permissions. Now client scopes represent cost made by a resource owner, the user to the resource server. What this means is that in our contacts it will be a customer signing into the public website to make a payment. That will be an example of using the client scope and making a payment would be of the write scope. And then global scopes represent cause made by the system and is embedded in the client credentials flow. This is used as an example for fetching something like the access code in the backend system to the client application, which is the customer facing website.

Now one common pitfall is creating too many scopes. So to circumvent that, it becomes hard to manage those. So to circumvent that is we regularly review proposals for new scopes on a case-by-case basis internally. As we continuously onboard new apps, groups, and scopes, we saw also the need for a standard naming convention. An example of this is for the scopes that we define, right? So an example would be, we define scopes by the application name followed by the action verb on the target. So what this means is something like bubble shop, bubble shop will have a re-permission on [Pro Flow 00:12:53] or on account. So that's an example of how we define some of our naming conventions. You can implement best practices. This is how we implement our workflow. Now for application names in Okta, there's no way to segment by the environments. So the way we circumvent is we include the environment in the application name. So why are naming conventions important? They provide developers and end users clarity in what they are working with. It can easily get complicated and overwhelming managing the multitude of applications that you support in Okta.

So access policies, that's the next thing. Access policies dictate how frequent API endpoints can be accessed. We can define also the access token expirations here. Early on, we implemented a rigorous testing program that validated the scopes assigned for each client ID. This way we can ensure that given apps don't have more access than they should. Now this is very important, and I highly recommend that you leverage this as well. We managed to automate the script later on, but this is very important. So our company has a micro-services architecture. We leverage as your API management as an additional layer of security on top of Okta's API access management. It's like akin to another [Medecule 00:14:30] Lock per say. Now the crux of this is that as your [Age's 00:14:34] global policies can dictate spot checks for the presence of an access token, the claims, and its signature. So it's a barrier to entry. A second check can be verified also at the service layer if you choose to do so. In addition to our API management, it also provides subscription keys, which can act as an identifier of the application making the call. API throttling which protects our services from getting abused-

Robert: API throttling, which protects our services from getting abused. A platform to track and monetize API products and also meaningful analytics with app insights. So these ... You can also prevent direct calls by rotated certificate and without, and not without us knowing. So the combination of these two products provide a secure backbone for our backend technology. So to illustrate this point first, to caller endpoint, you need to obtain the access token from the authorization server that we define in Okta. And then with that, it could be from the, for a global scope workflow, it could be from the client credentials that you have to provide as a grant type. Or for a client type scope workflow, you would, you can follow the authorization code workflow. And provide the client ID, redirect your AI and states and the core strengths. Now, in addition to this access token from Okta, you also need to provide the subscription key from Azure to companies. What this means is that Azure identifies who can make the call, and Okta's access token here can identify what call that this person can make. So this is important to have the right apps, making the right call at the right time.

So, in order for developers to utilize these tools successfully, we need some good documentation. We need to provide transparency on what kind of scopes are required for making a particular API endpoint call. So Azure offers us a solution for this. Some benefits are self-documentation. The XML comments that you write in your code can be displayed in Swagger. There's also schemer validation. Border code generation, border plate code generation and also access token, validation of scopes. So all of this is, can be provided with Swagger and it's free. It also provides a simple to use interface for calling API endpoints and that's really helpful for IT leads, for end business users who want to test our service, for UAT testing perhaps and it's very easy to get started and set up too. All you have to do is if you're a Microsoft shop, all you have to do is install swash buckle, and then you get packet manager. Configure the Swagger config file and the Swagger notations for each endpoint. And then after each code appointment, Swagger will also automatically update.

Lastly all you need to do is, take the Swagger URI and then import it to Azure API management and that will import the API to Azure seamlessly

Jen: So, functions of all time because it's self-documenting right. So the guys are coding it, they're putting the XML caution, XML tags in there and as they're being updated they generate automatically via DUI. So I have the full inventory of exactly what's being coded, how it's being coded, when we work in an Azure environment, it's always a challenge as when we actually start documenting, when do we do that process, when do we have time to do it. And this saves us a lot of time and communication with all our third parties and all other partners who actually use these going forward. It displays models and examples and all of the [Jason 00:18:38] that's required.

Robert: So, that's a good point. Thank you Jen.

Onboarding new applications. So we onboarded Okta to the company, not just for our application, not just for public website, but to serve a better purposes as enterprise solution for the company as a whole. And we wanted to model this for reuse, for scalability and for efficiency. So, we've been continuously integrating an array of external facing applications that are like built in house or by vendors or by cloud solutions. And as we do so, we're constantly optimizing and integrating, and optimizing and improving our integration process. So, some of the key questions that we ask are, to whoever is looking to leverage Okta within the company, what is the tech stack of your application. Is it built using mbc.net, angular JS, react, is it a native mobile app, native web app, not native web app, a web app or a SaaS product? What stage are you in development? Do you require SAML assertion or claims from Okta and do you plan to leverage the AP endpoints or our architecture with your new application?

And most importantly the question we ask is, how do you want the customer experience to be driven from your application with this new integration? Asking these key questions early will ensure you sim less integration and assure that good customer experience will follow. So all of these products were foreign to the company and left us some challenges. Now these challenges can be overcome but not without some lessons learnt. So, I turn it over to Paul to highlight some of the hurdles that we experienced as a company.

Paul: Thank you Robert.

Robert: Just take this.

Paul: So, as Robert was, what we talked about is we were earlier on a journey. And anytime you're on a journey you have milestones that you want to remember, the highlights, right? And you have a bunch of places, rough road that you kind of crossed. And what we hope to get across is not just what we did today, but some of the lessons learned that hopefully you folks can take with you as you embark on this journey as well and hopefully avoid some of the rough spots that we hit. And leverage some of the places that we really were able to make some significant progress and leverage that and go forward with it.

Just to give you a sense of scale before we start, you know, right now we're seeing about 500 API requests a day. All supported, protected by Okta's AP access management server and it's about 15 million a month, right. So substantial in terms of the number of hits, in terms of what we're actually trying to protect and each of those calls being expected and protected by API access management and Azure API management. So one of the things earlier on we had, you know, how to cut tackles. We have a preview environment Okta. We have production environment. Everything else is on our preview environment first, we do all the testing. We make sure that everything works the way it's intended. But then we got to get to production.

So some of the challenges we face is there aren't really a good set of production, migration tools or take something from that preview environment, move it to production. So we were doing a lot of that manually. By the time you do those things manually, you always raise the chance that there's an error that occurs, right. It works in production, you've done all your, you've got all your rules, you've tested them, now you go to production, you make a mistake, you have consequences to deal with, right. Now we weren't worried about the ones where we made a mistake and we forgot to give somebody a scope or a claim that he should access to, those people are going to tell us. We're really concerned about the ones where we give too much and then they somehow use that or you're able to use that get access to something they shouldn't have. And we're talking about customer data here. Nothing more important to a company than the protection to its customer data.

So we made sure and we double checked that each of those things and migrations were moved successfully, where two people were always looking at them. The most important to it and Robert alluded earlier on is we initiated that testing program very early, specifically around these scopes and these claims. We first started with a massive source spreadsheet, with all the different client IDs that we needed to test, all the different scopes they should have and all the different scopes they shouldn't have gotten. And we first started doing that manually, and then as we progressed we got better at that. We moved to an automated script in which every time we did a build, every time we made a change to Okta we ran through those scripts in a matter of minutes to make sure people only got what they needed to get and no more. So that's one I would definitely encourage, you should going through this, and those things again very quickly get complex. You know, it starts out couple of rules, couple of policies, then you get another business requirement, you make another adjustment before you know it, you've got 50 of these things. And they all have to make sure that you work and you give, you exit at the right time, if then statements. So that's one thing.

Another challenge that we had, if that's something that we got right the first time, thankfully, we didn't have any painful lessons learned there. But what we did have a bit more challenge was around tokens management and universal directory itself. So, when we did it with our own application, the public website we varied, well to find what attributes and client need to be in the user directory and what attributes I'm going to expose as part of that token, so applications can make authorizations access management decisions.

As we expanded it to the company, everybody wanted to store something else in universal directory about the customer. Which sounds good at first, 'cause everything is in there. The challenge became and they all wanted that as part of the token, and the token size would just explode, 'cause everybody needed everything. They also became various, different representations of the same data. The different applications and development to use at the end of the store, so we had to kind of pull back a little bit and say, you know what, universal directory is great, we can store information on there, but we're going to make decisions about what and when we can store in universal directory.

So, had to have a kind of governance program in there that we'd look at and say, this is what we're going to put in universal directory, this is what we're going to call it, this is how we're going to make sure that this is well maintained. Other things that were application specific that we deemed application specific, that they needed to know about a user, but we didn't want to put them in universal directory. We told applications they had to store and then we made sure the part of the token had the Okta user ID and they had to store that data along with the user ID in their application. So they can then retrieve and look up that additional information if they needed to make to decisions on it. So, we had a kind of manage the token size there.

Scope management is another one that started off well, quickly got out of hand and we had a kind of pull back, as Robert alluded to, we had a scope naming invention, that was great. Developers go off on their own, they would write all the scope, they come up with all the scopes and you would have 50 scopes, every endpoint would have its own scope. Or variation of end scope. So it quickly got out of hand because you're given 60, 70 scopes in terms of something to ease to use that to be able to do work. You don't want that. The other hand of it is you go too high level, then a personal can actually do something they shouldn't be able to do. So on an application level, we had to form a review process when I came in to look at the different types of scopes. Even with their own products, when we went and add every time we add a new scope or a new function, we had an architectural review.

So where is our security lead, our IT architect, myself as a program lead and usually one of the business owners, we get in a room, we would review the scopes, we review the functions and we would do two things. We make sure all the scopes are the right level, where I can do what I need to do. I make sure this seamlessly new function I'm giving it the scope, can I use that scope to do something else that wasn't intended for that customer, right. Right and some cases where we said, oh now if I give that scope, which seems to make sense, this customer can also do something else we don't want them to do. So, then we had to turn around and kind of we had to add another scope, another level of a scope, so some of those scopes are multi-level. And then make the appropriate code changes and in cases where they're only few cases thankfully, where there was some customer impact to the change of the scopes, we had to manage that with the customer. So, this is different types of things you're going to run into, and we run into had to deal with. Governance comes into play with a lot of this because Okta is a shared platform which is great. We took a lot of work away from our developers in terms of how to worry about security, how to lock these down. We did a lot with Swagger and automate a lot of the documentation. So that we didn't have to worry about it.

We put a lot in place in terms of governance, in terms of naming conventions and things like that, so that our end users, the people calling it didn't have to start from scratch for every one of their applications. And as Jen alluded to, we talked a lot about, we're starting to move other apps that are customer facing into the same identity management solution with that. So we're really trying to move that customer experience, where a customer had to do business with us, sometimes had six different IDs, and six different apps to log into. They're all different user experiences, but all different security profiles and registration. Hard for us to track, hard for us to understand what was going on and what they needed, how they were working with and obviously very, very painful from a user experience. No matter how many help desk calls we would get, I forgot this password to this app. A lot of that we've seen go away and we may be able to continue to move that forward as we take more and more applications in there.

So, as we went through, we worked very closely with Okta, you know as Jen said, we were actually using this in preview and that's so is consolation when you're, when you're trying to build the products, that's something that's going to be used by potentially three million customers. And then you want to put this thing in production with supported by a preview function, what's going to happen? Okta is very good at working with us every step of the way and making us feel that we were going to be able to support this adequately in production as we went through. But we still, you know, some bumps on the roads, and some future requests that we've Okta over the time to do that. One, I'm glad actually the CO, is just that the note and Frederic mentioned, as he mentioned, you know being able to test scopes and test policies, test those tokens, so we usually you get to use these scopes, you get the first thing we talked about the testing, there was no way that we usually see what the output was, and showed of using the end program. And when we had third parties using it, they weren't getting exactly what they expected to get. It became very hard to debug what was actually going on. What was it they were getting, what was it they were calling it the way they shouldn't have. Where they're getting some of the scopes, but all of the scopes they needed.

So being able to test those things directly within Okta and make sure you get the right outcome and you can see clearly what it was, would be very helpful in terms of reducing that, that troubleshooting time. We have a lot of applications that have grown, even at the short part, we make use, make space on the application needs of OAuth and SAML, OIDC, open ID, WS-FED for some of the dot net applications. And wind up resorting to having ... and we also have tests and dive obviously in a preview environments. We wind up having to resort to marking those as the name of the applications by putting all that metadata in the main application, so we can quickly sort them and see them and see who shared access to what. So, one of the things we think would be helpful is basically add customer tags to those applications that you can end search on and quickly see without having it arrive on the names. Anything else that I forgot, and you want to share.

Paul: Anything else that I forgot, that you want to share?

Robert: That was pretty good.

Paul: Thank you.

With that, we've got about ten minutes; open it up to some questions. Before I do, again, I hope that you get an understanding for what it is that we try to do with the journey, and then hopefully, most importantly, take away some lessons learned that if you undertake this journey you'll be able to apply without having to hit some of the same road blocks or same challenges that we did.

Speaker 2: One of the things you alluded to is the difference between all the different environments and one of the things we're challenged with is trying to keep our Dev-test and production environments in sync. It sounds like you used a lot of scripting to do that. Maybe you can talk a little bit more about that just mainly because that's a big pain in our butt.

Paul: Sure. I'll let these folks jump in, too. It's always one of my concerns was the programming for this, is how do you move those and then you're trying to move from a, so you think you had it locked down, but people are changing the next release, especially in AGILE. So, you're trying to move a point in time to that. In some cases, we work with the APIs and try to automate some of the APIs in OKTA to be able to create some of these things behind so then we can turn around and use config files to do that. That worked to a certain degree, but it was very hard to keep control of that. I don't think we have a great answer for it other than it's meticulous planning and really staying on top of that and making sure you're doing sufficient enough testing that when you do move it you're not causing those, not running into some of those problems. I think it's an area that I would like to see improve over time, that configuration management, source control aspect that you see in others in order to be able to hopefully reduce some of that going forward.

Speaker 3: Great presentation.

Paul: Thank you.

Speaker 3: Is this in production, first of all?

Paul: Yes.

Speaker 3: Okay, and I didn't see anything about the user experience. Do you have anything to share there? Any learnings from the user experience? Something that we should be careful?

Paul: Yeah, it's great. I want to be clear. This is production. The MyAccount website has been in production for about over a year now.

Jen: Yeah, so. Transactional went in July 2017, so that's when the service layer went live. The non-content went live at the end of 2016 in December. You can go there and look at it. If you're a New York customer, sign up for your new account experience. You'll be using OKTA to go through that flow.

Robert: So, when you make a payment, it'll be through our backend service architecture.

Paul: That was the public website and as Jen mentioned earlier our new mobile app released in March of this year, so not that old. We are doing 15 million requests, API requests a month from third parties and customers either through the website or directly.

Speaker 3: So again, user experience is how?

Paul: The user experience, a couple of things to tackle there. We were trying to really rebrand the site and make it user friendly. We looked at the transactions themselves, business transactions themselves and how we really make sure that they look like modern and frictionless as possible, only asking for the key information that we needed. Some of our earlier versions requested tons of information, and it was kind of like, "We'll get everything and figure out what we need later." Not great for the customer. We made sure we did some of those.

Some key areas that we really had to tackle was around registration. We had one million customers in our old platform where we still used username and password. Now we're moving to OKTA. We've got to basically get those people to re-register. That can become a challenge, right? We've done that slowly. Even though we started in June of last year rolling out, first we didn't force anybody to go to the new site. We let people go to the old site. If they chose to register, they could. Then after we kind of worked out, made sure everything was working, worked out some of the other kinks from early feedback that we got on Twitter and other places, we started to push communications. Now as we're getting closer and we've got over 600,000 customers registered with the new experience now, so almost about half of our old registered customers, we're going to be pushing harder and eventually be able to say, "After a certain date, you will no longer be able to do that." That's one thing to very carefully, if you're doing these migrations, to think about the user experience, think about the communication, and think about the right time in their life cycle to bring them into the new experience. While we are migrating the old, being New York City, we have a lot of users that are coming in and out, customers, and they're starting service with us. They're starting utility service and leaving. Early on, basically if you're starting a service after that date, you're doing the new experience. We looked at that to hopefully help take that. That's one thing to think about.

The other thing that was a bit of a challenge for us was we initiated MFA for the first time as part of this. We didn't have any multifactor authentication on the old site, so we had to do that. We had to choose which levels we wanted to offer. SMS or security questions we had to because we have some folks that being a public utility you have to serve everybody, and some people just don't have phones still, so we have to be able to do that. That was a conversation between security. We got security involved very early in those conversations. And then a lot of user education because it was new to them. We had to manage a higher than larger call volume for some of those things in terms of doing that. We had to provide our CSRs with the tools to be able to quickly reset factors and/or walk them through the process to do that. Those are some areas from the customer experience that we had to tackle.

Jen: Registration was a key thing for us. We actually took a pause in our road map because of sort of our analytics we could quickly see how many people were registering and they were having trouble and which step they were having trouble with. Prior to the re-registration that you see today in production, the flow, the break of the flow between creating a password and also getting to MFA. People didn't necessarily want to do that all at the same time. We said, "How are we going to resolve this?" So we quickly got our mind melds together. We updated the services. We updated the UI so that if for any reason you don't make it through password, you never set up your MFA properly, when you try to log in it automatically takes you to the MFA page. A lot of things are lessons learned. We take pride in our usability and customer experience. We had a very strict road map, but it was important to us that we got this one right. In the end, it was worth it, taking the time to really take a look at it and fix it for our customers to get us through over and over the hump.

Speaker 4: I had a question about, you said you have over a million users or several million users that are out there. It's a very customer focused and oriented website. What are you folks doing around security? There are OKTA system logs that can be leveraged to extract data out 30, 60, 90 days out and put it into your own system for any kind of anomaly detection, for phishing attempts and other things that are out there. Have you folks focused on that at all? Just curious.

Paul: We have focused on it, not to the degree, obviously we always want to get better at those type of things. We do routinely look and we have security. Our security team is routinely looking through those logs. They're looking for anomalies. We have some pre-written queries that we run periodically. As Jen and Robert have, we have application analytics behind who are pulling all that data in looking for things. We know if an API is getting hit out of proportion to normal. We know if an API is responding less. We know if we're having tokens that are getting rejected more frequently than normal. We've got some of that application insight, some lightweight AI kind of looking at it and go, "This doesn't match the pattern." We're trying to get more of that, because you set up all these things but the rate of information and amount of data that's coming in you need almost another computer to look at it and go, "This doesn't look right. Now, go do the deep dives."

We are in the process of looking at Splunk. We own Splunk. We use Splunk for a bunch of our internal systems. We're working on being able to take that OKTA data and put it into Splunk so we get a better picture into that and to draw that on a map, as well. That's something that we're currently working on.

Robert: I actually reviewed the event logs with a tester quite frequently to troubleshoot production issues for customers who have issues logging in or gaining access to an application. Because of our large customer base, sometimes the assignment of a user to an application may take a while to process. Users who create an account right away, they immediately seek access to application and then they get a, "You're not authorized to the application." But because our service layer also adds the user to the group, that user is in the group. He should have authorization to the application. It just takes that process time to assign that user to the application. We experienced some of those requests early on. We identified it, opened a support case, and we used the event logs quite frequently.

Speaker 5: Hello. You mentioned a previous system and now your customers are in OKTA. How do you handle migration, migrating the users from the previous system to OKTA? Is it a self-help process or is it imported process?

Paul: We did not migrate anybody. Basically, users had to re-register. We basically said, "We don't really care as much what you did in the old system. Once you came in, you've got to start from scratch from a registration perspective which is a new username and password." We support this goal experience for a while which was tricky in itself because we actually had to put something in front of our log-in page where you entered your username and then we knew whether you were allowed to go the old experience or not and actually divert the customer behind the scenes to the right space. We had to do some of those.

There was customer information in the old accounts, what accounts or residences they actually had power at commercially how to do that. We had to leverage some of the backend system and some of the auto matching. What we called auto matching was if you're registering with an e-mail and you prove that it's your e-mail and you've given us your phone number for MFA and the phone number and e-mail match our customer account records for that account, we would automatically basically say you had access to that because you've proven that it's your information and that information's on the customer account record. There were other cases where we didn't have that information or that information didn't match, so we had to make sure that we had a process where the users could basically claim their account by entering an account number and some other information about themselves that we felt comfortable was making that match. We had to do that outside as part of registration process but then update the OKTA record with it. We're very excited about some of the upcoming features about the hooks and extensibility so we can plug that into part of that process instead of trying to do it separately.

From a customer perspective, if it was an existing customer they were essentially registering as a new customer for the first time. We had to very carefully manage that through communication and looking for the right levers and users life cycle to pick the opportune time to get them to do that with the least resistance.

Jen: Something else you might be interested in is because of this dual log-in, we have what we call legacy lock out. It allows us the mechanism to actually control those user groups who can't go into the new experience. It's a progressive system which is a way of controlling the registration process of the new registration process. It also is really important to us because we have a limited call center who has specific SLAs and we didn't want to overwhelm them all at once with millions of users. As the business was comfortable, as we were comfortable with the process, we were able to lock out as little or as many as we want at any time.

Robert: Another point I'd like to add is continuous communication is important. Make it clear for customers what the changes are. Software leases are good. If you're making production appointments and the like.

Paul: So with that, we're out of time. Thank you very much. I hope you learned something today.

Done right, Customer Identity can be a tool and channel to better engage and communicate with your customers or partner organizations. In this session, experts from Consolidated Edison discuss how to use technology and customer identity as a tool to create digital channels and build better relationships with customers.

Speakers:

Paul Verrone, Director Of Information Technology, Consolidated Edison

Jennifer Moy, IT Lead, Consolidated Edison

Robert Chang, System Analyst, Consolidated Edison