Every business leader is asking: How can we leverage AI for a competitive edge? Security leaders are asking a different question: How do we adopt AI without creating thousands of new, autonomous, and invisible security risks?

From our vantage point, helping secure more than 20,000 global organizations, we see this challenge unfolding in real time. The rise of generative and agentic AI is driving an explosion of non-human identities (NHIs). These AI agents can operate at machine speed, access sensitive data, call critical APIs, and make decisions on behalf of users.

This creates a fundamental identity crisis. Traditional IAM systems, designed for predictable human-driven access, are not equipped for this reality. Without a modern approach, enterprises face a blind spot: Which agents have access to what? Are their permissions appropriate? How do you stop a compromised agent before it causes damage?

Securing the AI future begins with identity. This article explores how emerging AI standards and frameworks, such as MCP, A2A, and RAG, enable new functionality and also introduce significant security challenges — and why solving these requires an identity-first approach.

Who is adopting AI? And why are they adopting AI?

Across industries, organizations are pursuing AI adoption in three main ways:

AI for the workforce: Many enterprises are deploying AI technologies to empower employees. Success depends on acquiring a trusted vendor and managing the growing web of access that AI platforms gain inside the enterprise.

AI for B2B partnerships: Some organizations are embedding AI into partner or vendor-facing services. Their priority is quick time-to-value, combined with secure exposure of AI capabilities to third parties.

AI-native product builders: These companies are developing the AI-powered products that others are evaluating. They focus on innovation and rely on strong security frameworks to help ensure trust in production environments.

Across all three groups, the need is consistent: security that scales with AI adoption.

Evolving standards in the AI ecosystem

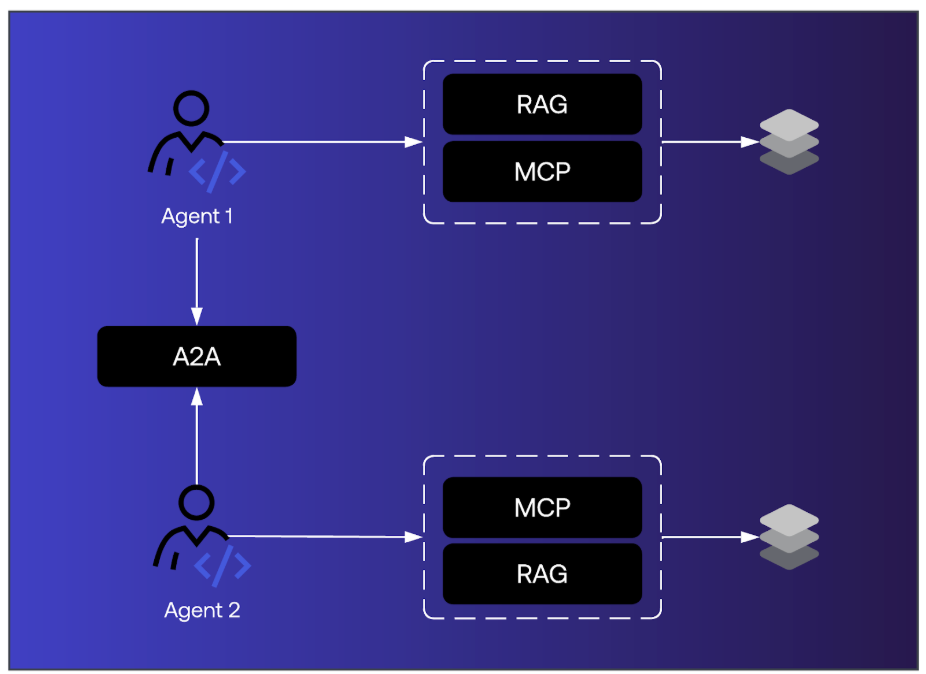

The AI ecosystem is moving quickly toward standardization. Many protocols are being proposed, but three stand out for their near-term enterprise impact: Model Context Protocol (MCP), agent-to-agent communication (A2A), and retrieval-augmented generation (RAG).

MCP: A context-oriented protocol

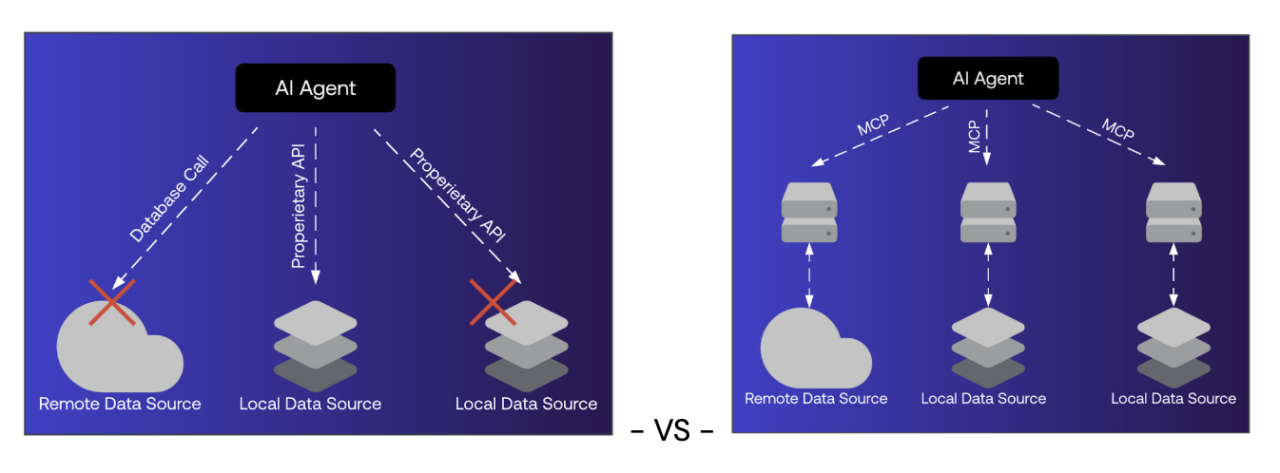

MCP, developed by Anthropic, acts as a universal translator between AI models and external systems. Instead of requiring every agent to adapt to the quirks of each API, MCP provides a consistent protocol for accessing tools and resources.

For example, a CEO could ask an AI assistant to analyze sales data across a CRM, inventory system, and accounting platform. With MCP, the assistant does not need custom integrations; it can interact with each system through a common standard. MCP turns the AI agent into a universal toolbelt.

The security implications are significant. MCP makes access easier and more consistent, but it also increases the stakes of authentication and authorization. OAuth 2.1 is part of the specification, but it does not fully address the dynamic, task-driven nature of agent onboarding and impersonation risks.

A2A: An inter-agent protocol

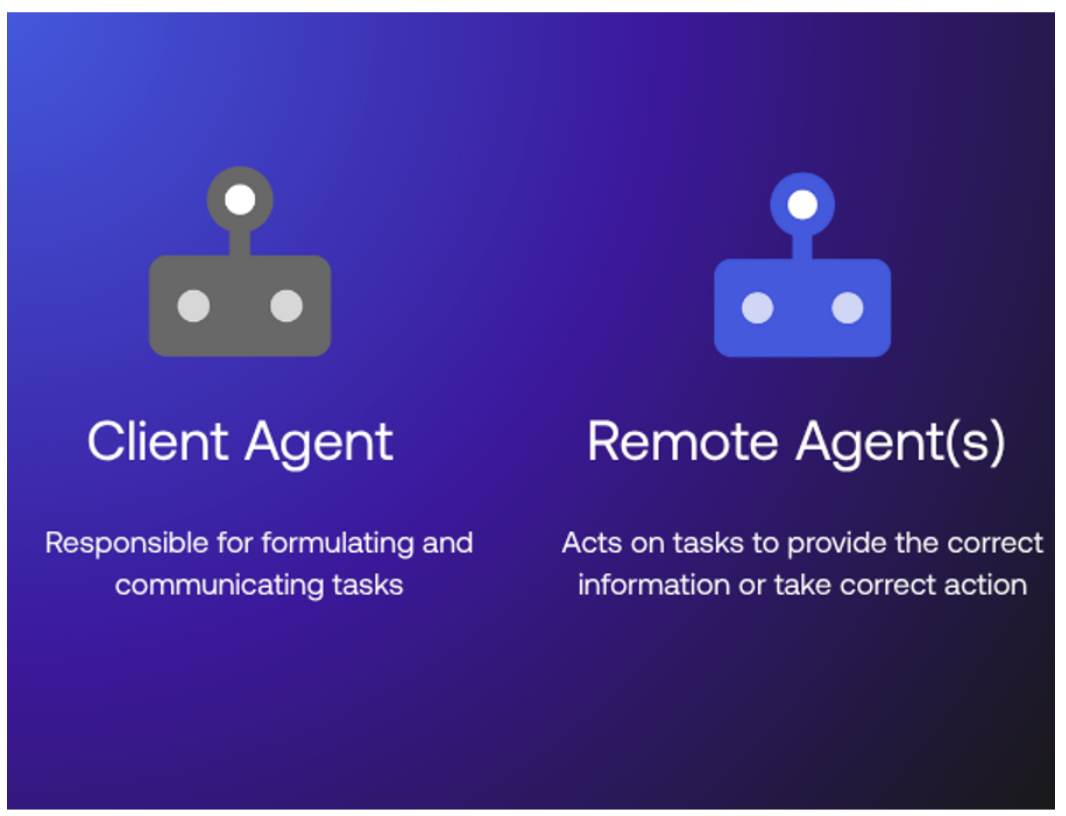

If MCP equips individual agents, A2A enables them to work together. A2A is an emerging framework from Google Cloud that proposes standardized “Agent Cards” to describe agent capabilities. These cards allow agents to divide work, coordinate tasks, and collaborate on complex goals. The potential is clear. Imagine AI agents representing suppliers, shippers, and retailers coordinating in real time to resolve a supply chain disruption.

Security challenges are also clear. Without strong identity controls, A2A can become a channel for impersonation, interception, or malicious collaboration. Threat modeling with the Cloud Security Alliance’s MAESTRO framework has already identified scenarios such as agents posing as trusted entities or servers masquerading as legitimate participants.

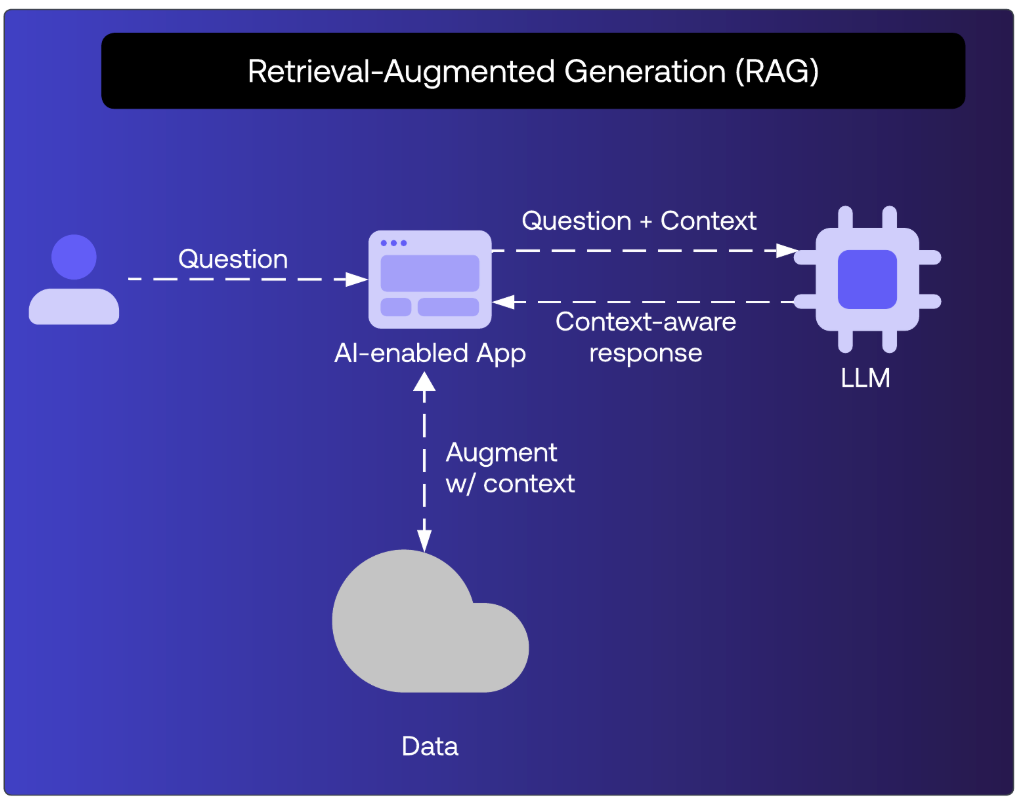

RAG: Not a protocol but a framework

Generative AI models are powerful, but their knowledge is limited to training data. Retrieval-Augmented Generation (RAG) enhances them by retrieving information from live sources before generating a response. This helps ensure outputs are accurate, current, and grounded in enterprise data.

Consider a CFO asking about Q4 budget performance. A model without RAG might provide an outdated or fabricated answer. With RAG, the model queries the company’s actual systems first, then responds with verified numbers.

RAG is critical for enterprise trust, but it also creates challenges around authorization. RAG pipelines often involve vast datasets, and deciding what portion of those datasets an agent should access requires fine-grained, real-time controls.

Bringing it all together: Standards as catalysts and risk multipliers

MCP, A2A, and RAG each expand the capabilities of AI agents. Together, they form the backbone of the agentic AI ecosystem.

Their adoption, however, also amplifies risk. NHIss are multiplying at a pace that current IAM systems cannot manage. Agents act autonomously, unpredictably, and at scale. Without identity at the center, enterprises face security exposures that could undermine the benefits of AI.

How standards create new security considerations

Organizations have long struggled with machine identities like service accounts and API keys. These often become over-privileged or orphaned, creating governance blind spots.

Agentic AI magnifies the problem. Instead of managing hundreds of accounts, enterprises will soon manage thousands of agents, each with its own dynamic permissions. This represents a shift in kind, not only in scale. Current IAM frameworks were not designed for this level of complexity.

Gaps introduced by standards

MCP creates new identity and access management challenges for agents. MCP improves interoperability by giving agents a standardized way to access enterprise tools and systems. At the same time, it introduces new identity challenges. Traditional IAM frameworks struggle to recognize, authenticate, and authorize AI agents whose access patterns are dynamic and task-driven.

An agent that can use MCP to query multiple systems must first be securely onboarded and continuously verified. Without those safeguards, the same efficiency that makes MCP powerful also raises the risk of unauthorized access, rogue behavior, or impersonation by malicious agents.

A2A introduces new risks for inter-agent trust and secure communication. The promise of A2A is seamless collaboration among autonomous agents. The reality is that without strong identity foundations, every new connection becomes a potential vulnerability.

As agents exchange sensitive data and coordinate tasks, attackers can attempt to impersonate trusted agents, intercept messages, or manipulate interactions. A2A threat modeling has already highlighted risks such as malicious servers posing as legitimate companies, stolen agent credentials, and unauthorized escalation of trust. Establishing secure communication and verifiable identity between diverse agents is essential if A2A is to move from concept to enterprise adoption.

RAG creates new access patterns that raise data accuracy and information disclosure risks. RAG reduces hallucinations by grounding outputs in enterprise data, but doing so introduces complex authorization requirements. An AI system equipped with RAG may need to query across massive data stores.

Deciding which portions of those stores are available to which agents, in which contexts, requires authorization that is far more granular than what most enterprises apply today. Without these controls, agents could expose sensitive information or generate outputs that violate compliance standards. As enterprises scale RAG pipelines, the ability to enforce real-time, fine-grained access decisions becomes a security requirement, not a feature.

Broader challenges for enterprises

As AI agents take on more autonomous actions, enterprises need to know exactly who — or what — initiated each step. Was it a human making a direct request, or an agent acting on behalf of that user hours later?

Without clear attribution, forensic investigations stall and compliance reporting becomes unreliable. This lack of accountability also weakens trust, as organizations struggle to demonstrate control over autonomous decision-making.

AI systems are particularly vulnerable to techniques such as data poisoning, model inversion, and prompt injection. These attacks are designed to manipulate agent behavior or extract sensitive information.

In an agentic ecosystem, where systems communicate and build on each other’s outputs, a single compromised agent can spread risk across many domains. Protecting against adversarial tactics requires endpoint security and continuous identity-based monitoring of agent behavior.

AI agents often operate on long-running tasks. They may request approval once, then continue executing actions long after the human has moved on. This creates risks like approval fatigue, where users click through repeated prompts without scrutiny, or context loss, where it becomes unclear what exactly the agent is authorized to do. Without a mechanism to reintroduce humans into the loop at the right moments, enterprises risk losing accountability and exposing themselves to unauthorized actions.

The OAuth framework has long enabled users to connect applications by granting cross-platform access. In consumer contexts, this assumption — that the end user owns and controls the connection — makes sense. In the enterprise, it does not.

Enterprises need to authorize these connections by policy, not by individual discretion. As AI scales, the number of cross-application and agent-to-application connections will increase dramatically, compounding a problem that already exists today. Without centralized governance, security teams are left unaware of the sprawling mesh of permissions agents create.

How Okta secures agentic AI

Identity must be the foundation of AI security. Okta’s platform governs both human and NHIs, addressing the gaps introduced by MCP, A2A, and RAG.

Okta’s comprehensive AI security framework

Okta can discover, onboard, govern, and monitor AI agents the same way it manages human identities. Policies help ensure visibility, accountability, and alignment with enterprise standards.

Security before, during, and after authentication

- Before authentication: Enterprises gain insight into agent access and apply policy-driven onboarding.

- During authentication: Access decisions are contextual. An agent may have entitlements, but policies determine whether it can act on them in a given scenario.

- After authentication: Continuous monitoring enables ongoing compliance. Okta can revoke tokens, notify SOC teams, or automate remediation if risks are detected.

Okta capabilities for AI security

Cross-app access (XAA): An extension of OAuth tailored for agentic AI, XAA centralizes oversight of app-to-app and agent-driven connections, allowing enterprises to enforce policies without relying on repetitive user approvals.

Preventing authorization sharing: Through support for OAuth2 Demonstrating Proof-of-Possession (DPoP) and OAuth 2.0 Mutual-TLS Client Authentication (mTLS), Okta helps ensure tokens cannot be stolen or reused. Only the agent that receives a token can use it.

Okta token vault (MCP broker): Developers building AI applications often need to connect to many external services. Token Vault stores user tokens securely and acts as an MCP broker, simplifying integration at scale.

Secure A2A access. With token exchange, Okta supports delegated authorization chains that begin with a human identity and extend through multiple agents, maintaining precision and auditability.

Asynchronous validation. Using OAuth2’s Client-Initiated Backchannel Authentication Flow (CIBA), Okta enables secure human approvals for long-running agent processes, balancing accountability with efficiency.

Fine-grained authorization for RAG. Okta’s FGA solution applies real-time authorization at the query level, helping ensure AI agents access only the data that users are entitled to view.

Why Okta leads in AI security

Okta is already delivering solutions for AI identity challenges.

- First-mover advantage: AI agent identity management is available now, not on a roadmap.

- Enterprise scale: Trusted by more than 20,000 customers managing billions of monthly logins.

- Standards leadership: Active participation in emerging AI security standards like MCP and A2A.

- Comprehensive platform: Identity, governance, and authorization that address both current and future AI security challenges

Through new initiatives like Auth0 for AI Agents and XAA, Okta is extending its identity framework to meet the unique requirements of AI agents and their interactions.

Next steps for enterprise leaders

AI standards are evolving rapidly, and security gaps are expanding at the same pace. Enterprises that act now will establish the foundation for secure AI adoption at scale.

Four actions can help organizations begin.

- Assess current AI security posture: Evaluate existing identity management capabilities against AI agent requirements.

- Plan for scale: Design identity architectures that can handle millions of AI agents at scale

- Partner with Okta: Leverage Okta's comprehensive AI security framework to build secure, scalable AI infrastructure.

- Start small, then scale up: Begin with pilot AI agent deployments using Okta as a foundation, then scale up fast.

The future of AI will belong to enterprises that innovate with confidence. Okta is ready to help secure that future.

Ready to secure your AI infrastructure? Contact Okta to learn how our comprehensive AI security framework can protect your organization's AI investments and enable secure innovation at scale.