Artificial intelligence (AI) is the defining paradigm shift of this generation, changing how organizations and the people within them operate.

To better understand why and how organizations are integrating AI into their operations — and concerns going forward — we commissioned an AlphaSights survey of 260 executives from companies of all sizes, in more than a dozen industries, from nine countries. The survey targeted chief technology officers (CTOs), chief information officers (CIOs), chief security officers (CSOs), chief information security officers (CISOs), and vice presidents with similar focus areas to gather informed leadership perspectives on innovation, governance, and security.

The insights they provided highlight the tension between the rush to introduce transformational technology and the need to manage the risks associated with doing so.

Key findings include:

91% of organizations are already using AI agents, with task automation as the most common use case.

On average, respondents report their organizations are using AI agents for nearly five distinct use cases.

84% of leaders cited increased productivity as a realized benefit, well ahead of cost savings (60%).

Data privacy and security risks are the top AI-related concerns, cited by 68% and 60% of respondents, respectively.

85% of leaders regard identity and access management (IAM) as vital to the successful adoption and integration of AI.

Governance of AI is lagging deployment, with only 10% of respondents reporting that their organization has a well-developed strategy or roadmap for managing non-human identities (NHIs).

AI: Today’s business imperative

Two-thirds of leaders regard AI as key to their business strategy

Trends come and go with frequency, but truly transformative shifts that cause leaders to rethink their long-term strategies are rarer — think digital, internet, mobile, and cloud. We can comfortably add AI to that list: 43% of respondents reported that adoption of AI is “very critical” to their business strategy, and a further 23% consider it to be “absolutely essential.” In fact, only 4% deem AI to be “not critical.”

Automation and process optimization use cases continue to be most common … for now

Broad adoption of AI can be attributed to its long list of applications and use cases.

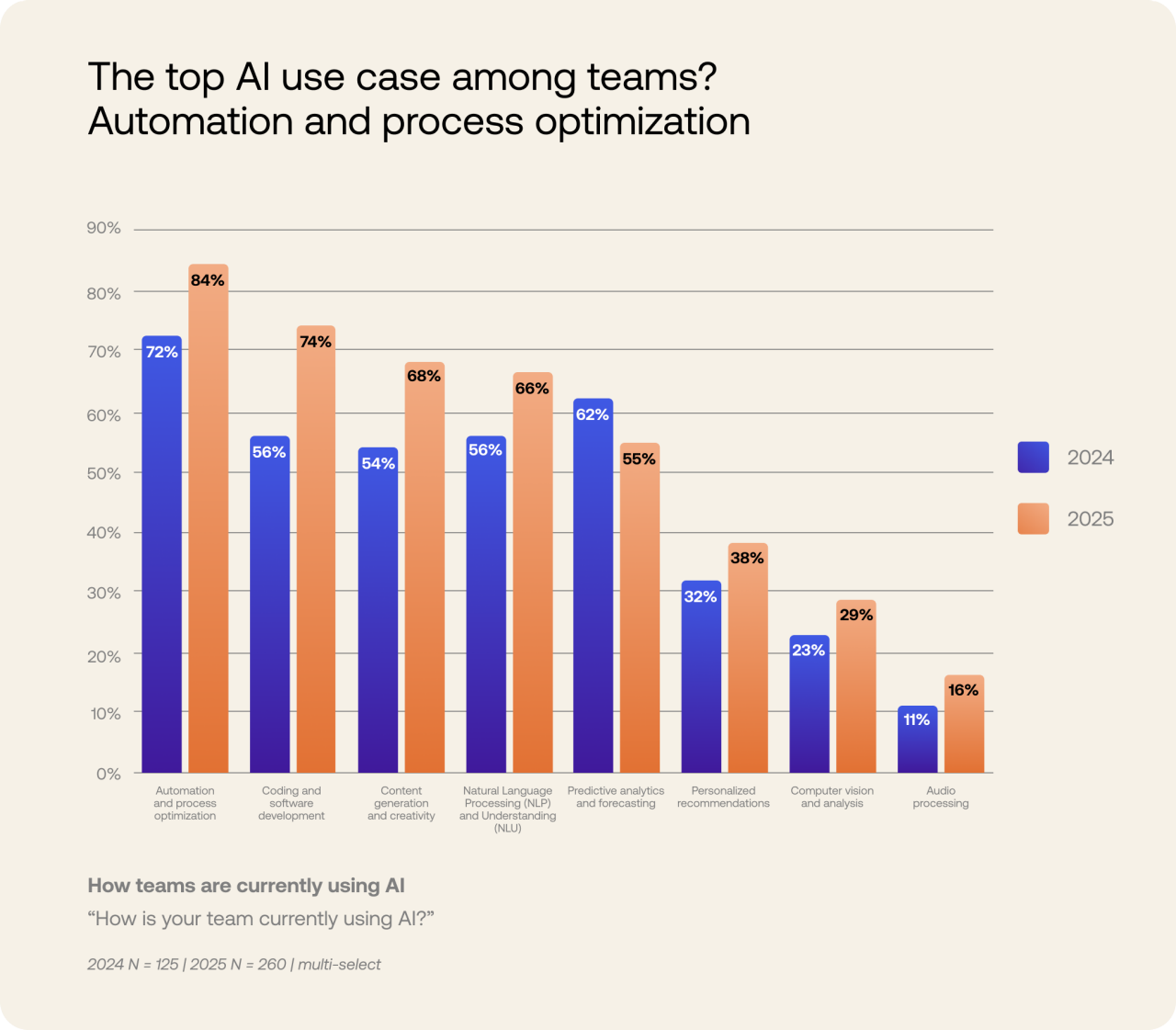

For the second year in a row, automation and process optimization use cases are most common, with 84% of respondents indicating that their organization employs AI in this manner.

But looking beyond the top spot, the survey revealed quite a bit of year-over-year change:

Coding and software development rose from fourth place in 2024 (56%) to second place (74%), on the strength of an 18-percentage-point increase (the largest exhibited).

Content generation and creativity use cases jumped from fifth to third.

Moving in the other direction, predictive analytics and forecasting fell from second to fifth, declining seven percentage points — notably, this is the only use case to experience decreased adoption.

The rise of AI agents

91% of organizations are already using AI agents, with a wide range of reported benefits

At the heart of the AI transformation are AI agents — autonomous software systems that leverage large language models (LLMs), machine learning (ML), and application programming interface (APIs) to perform tasks without direct human intervention. Unlike traditional software, they can interpret and respond to natural language inputs, analyze real-time data, and take actions on behalf of users.

More than any other type of non-human identity (NHI), AI agents are not only transforming how people work at a tactical level but also causing companies to change their strategies to survive and thrive in a dynamic, global market.

Blowing past even recent projections, a staggering 91% of respondents reported that their organization is currently using AI agents. Not investigating, not planning, but actually already using.

"In my experience, successful AI adoption and integration require a clear strategy aligned with business outcomes … Avoid generic ‘AI for AI’s sake’ initiatives." — Vice President, Technology, Canada

From automation to innovation: AI agent use cases and benefits

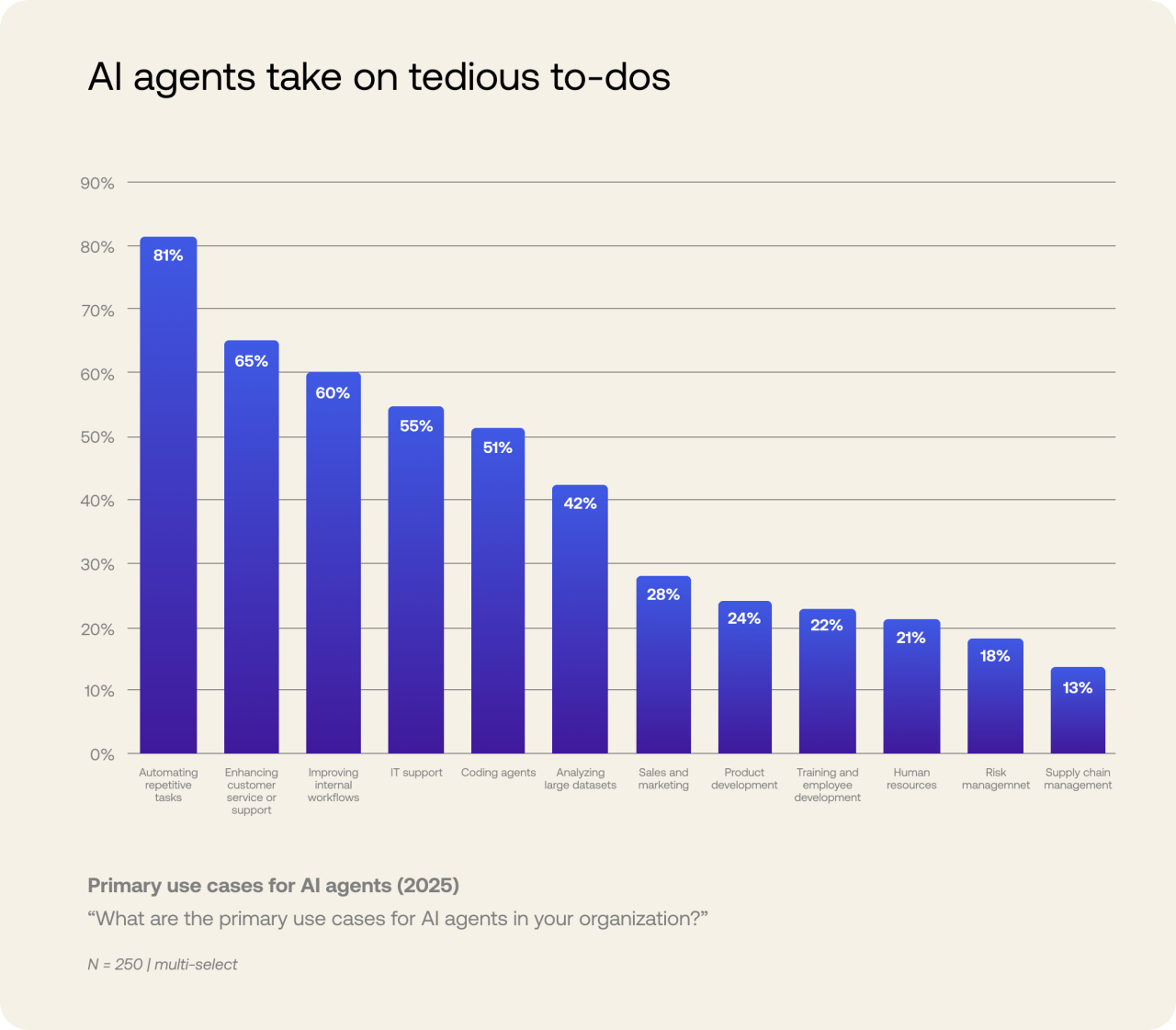

Focusing on AI agents, specifically, respondents reported a mean of nearly five (4.8) use cases within their respective organizations. Task automation was cited most frequently (81% of respondents), likely a result of its broad appeal and range of applications (what organization doesn’t have tasks, right?).

Organizations are also deploying agents in support of specific teams and functions, led by enhancing customer service or support (65%), providing IT support (55%), and coding agents (51%), and accompanied by several more in the long tail. These more targeted use cases indicate that AI agents are proliferating widely within organizations, increasing their impact.

Tangible benefits will continue to drive AI agent rollouts

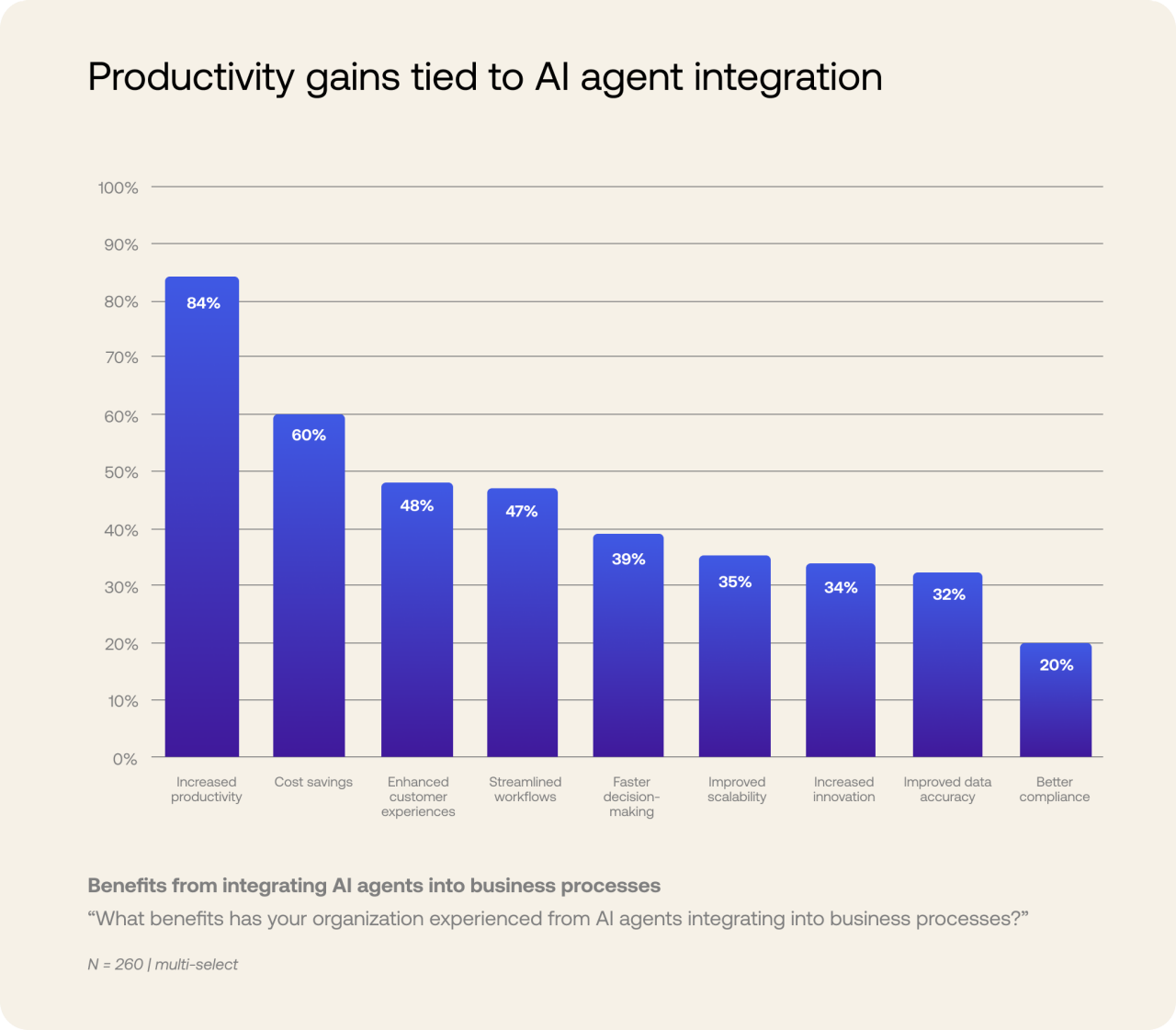

Importantly, leaders report that AI agents are delivering meaningful benefits. Increased productivity (cited by 84% of respondents) and cost savings (60%) lead the way, but these outcomes represent only the tip of the iceberg.

Nearly half of respondents reported using AI agents to enhance customer experiences and streamline workflows, while over one-third realized gains in decision-making, scalability, and innovation with AI agent adoption.

“Emerging AI agent trends impacting our industry include autonomous multi-agent collaboration, goal-oriented agents with memory, and tool-using agents that integrate with APIs. These enhance software development, system safety, and automation.” — Vice President, Technology, Japan

Navigating AI risks: Identity, access, and oversight

Data privacy and security risks top leaders’ AI concerns

To fulfill the diverse range of use cases listed previously, AI agents may require access to an organization’s data, systems, and resources.

And the more access they have, the more they can do. The temptation, then — especially during this “Wild West” phase of exploration and experimentation — may be to hand over the keys to the kingdom.

However, increased access brings increased risk: Poorly built, deployed, or managed AI agents can present new attack vectors, including prompt injection and account takeovers. Even absent malicious intent, unexpected behaviors can potentially result in breaches, reputational damage, and non-compliance with regulatory requirements.

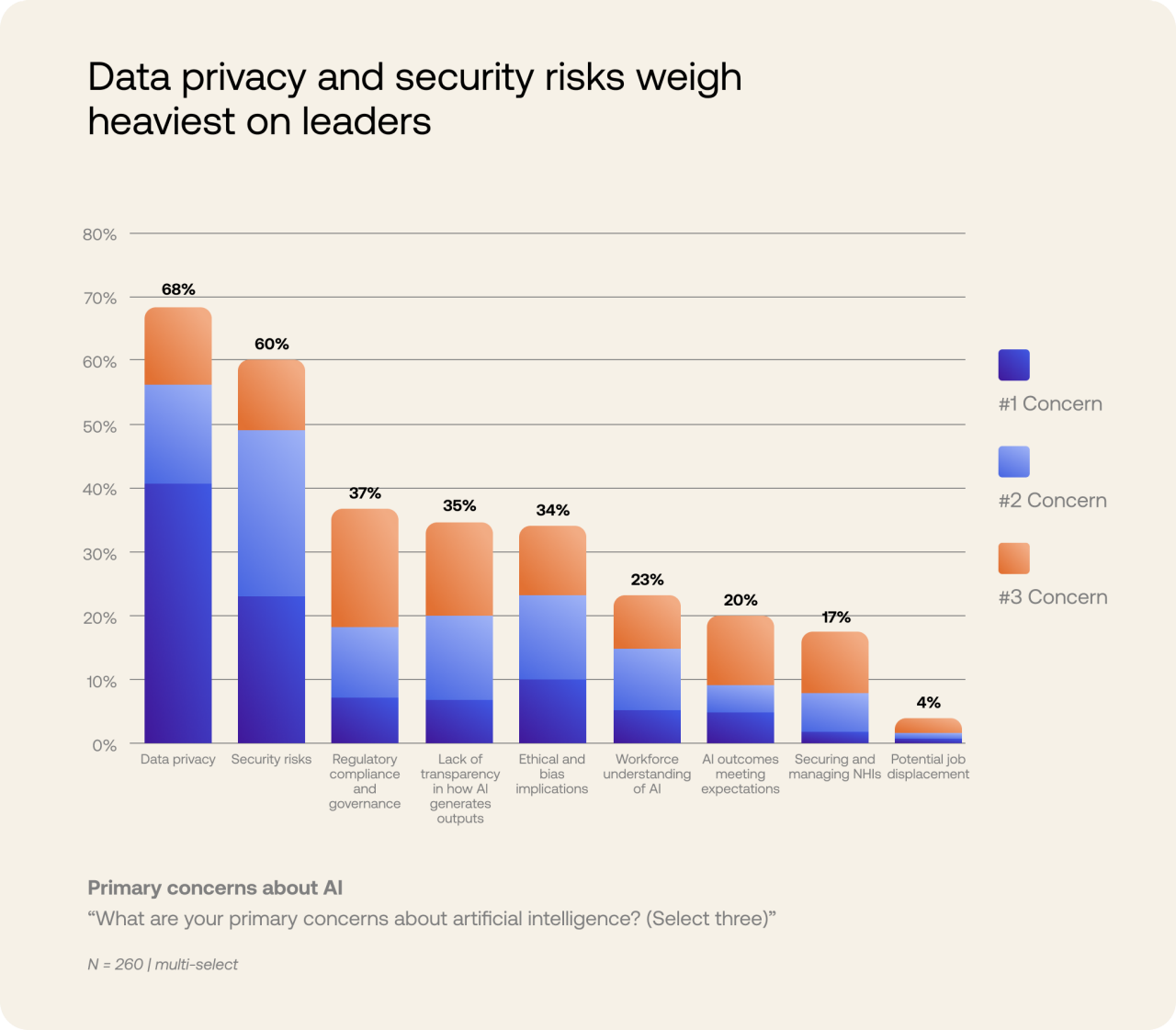

For their part, survey respondents recognize these risks. When asked to select their primary concerns about AI, they placed data privacy and security risks as first and second, respectively, by both severity (ranked as their top concern) and frequency (cited most often).

“When you consider the data AI agents have access to — or will have access to in the future — it’s essential to have the same levels of controls as human agents.” — C-Suite, Technology, UK

85% of leaders regard identity and access management (IAM) as vital to the successful adoption and integration of AI

Managing AI agent identity is different from managing human user identities due to key distinctions in definition, lifecycle, and governance.

AI agents:

Lack accountability to a specific person.

Have short, dynamic lifespans requiring rapid provisioning and de-provisioning.

Rely on various non-human authentication methods like API tokens and cryptographic certificates.

Need very specific and granular permissions for limited periods and often access privileged information, making robust control crucial to preventing prolonged escalated access.

Often lack traceable ownership and consistent logging, which complicates post-breach audits and remediation.

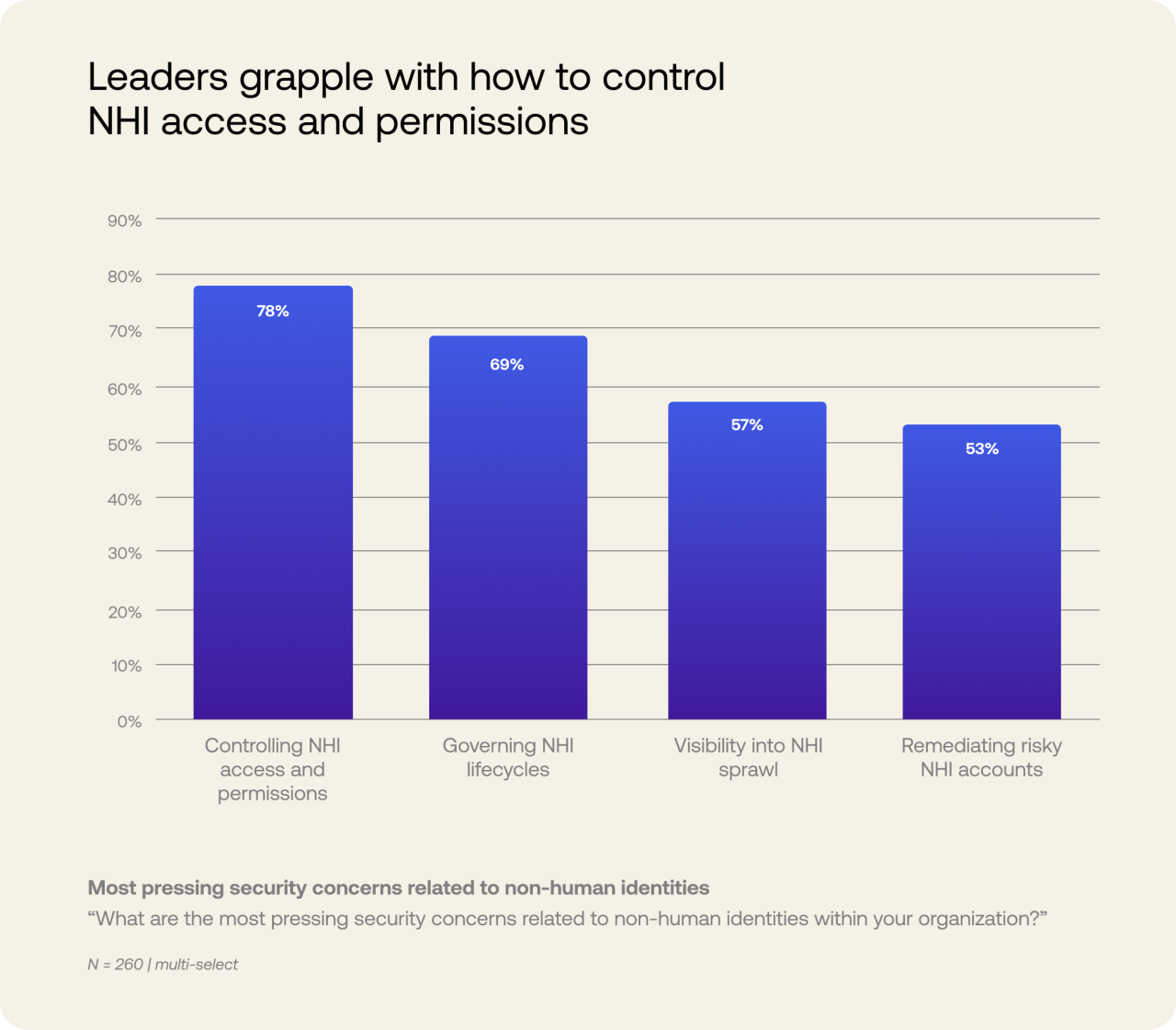

Leaders’ understanding and appreciation of these qualities are reflected in their responses about their organizations’ most pressing NHI-related security concerns. Controlling NHI access and permissions (selected by 78% of respondents) is No. 1, but concerns about lifecycle management (69%), poor visibility (57%), and remediating risky NHI accounts (53%) were also selected by a majority of respondents.

“Governance and access control are critical given the level of access and ability to execute that AI may have.” — C-Suite, Banking and Finance, US

It should be little surprise, then, that leaders regard identity and access management (IAM) as a vital part of their AI strategy.

Fully 85% of survey respondents (a seven-percentage-point increase over last year) indicated that IAM is either “very important” (52% of respondents) or “important” (33%) to the successful adoption and integration of AI within their organization.

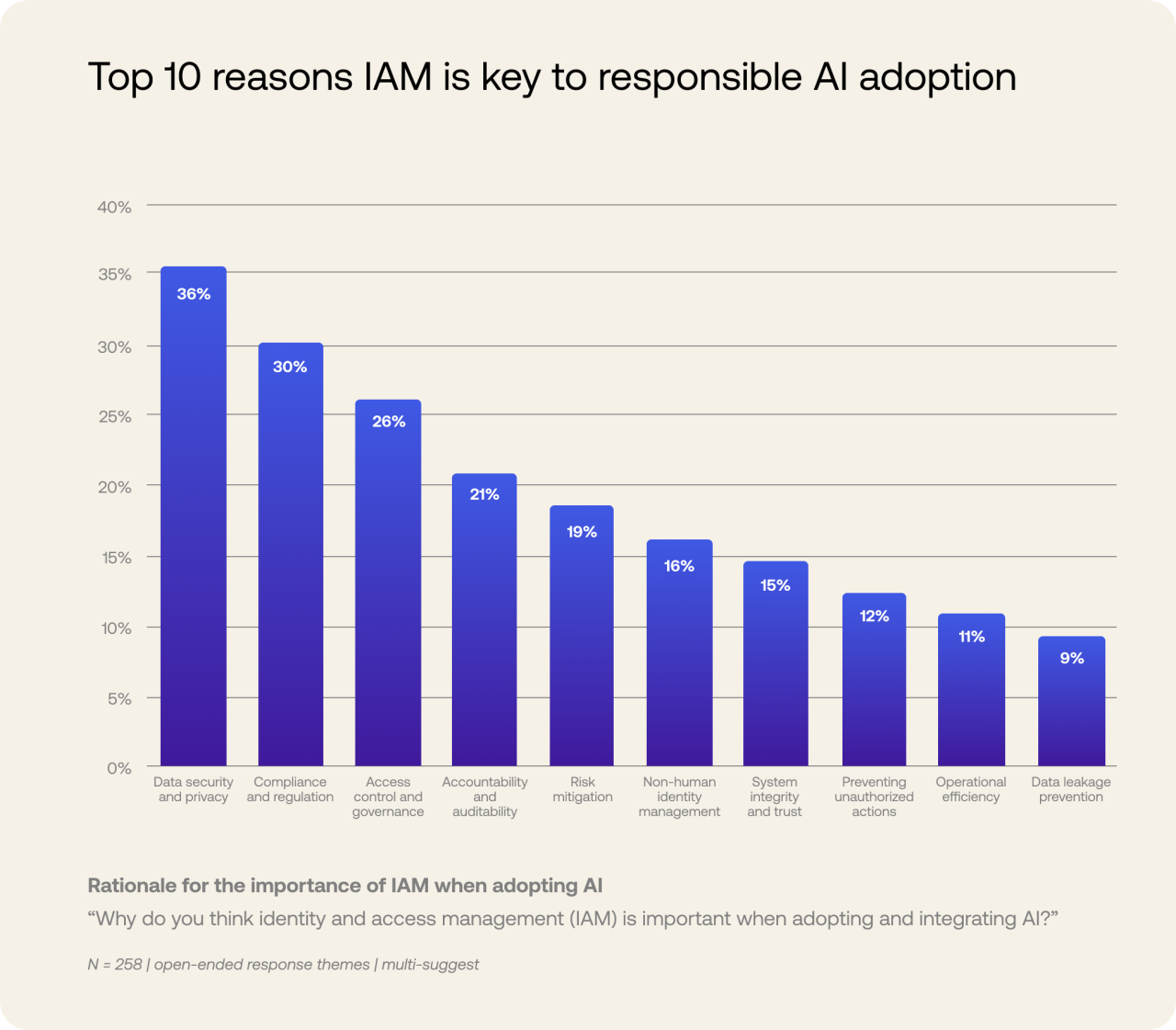

Going one layer deeper, respondents pointed to a long list of reasons why IAM is so crucial. The duo of data security and privacy was the most frequently cited reason, followed by compliance and regulation.

“I’m most concerned about AI systems having too much access without proper controls. If not carefully managed, they can expose sensitive data or be exploited for attacks. Strong oversight and access control are essential to keep AI secure.” — C-Suite, Healthcare and Pharmaceuticals, Australia

The governance gap: Only 10% of organizations have a well-developed strategy for managing NHIs

Continuing in the governance line of thought, leaders’ top two AI agent-related security concerns over the next three years are:

AI governance and oversight (selected by 58% of respondents)

Compliance and regulatory requirements (50%)

But while these are top-of-mind issues, there are strong indications that AI rollouts are outpacing organizations’ ability to keep up with governance, oversight, compliance, and regulatory requirements. For example:

Only 10% of respondents reported that their organization has a well-developed strategy or roadmap for managing NHIs

Only 32% of organizations always treat digital labor forces with the same degree of governance as human workforces

Only 36% of organizations currently have a centralized governance model for AI

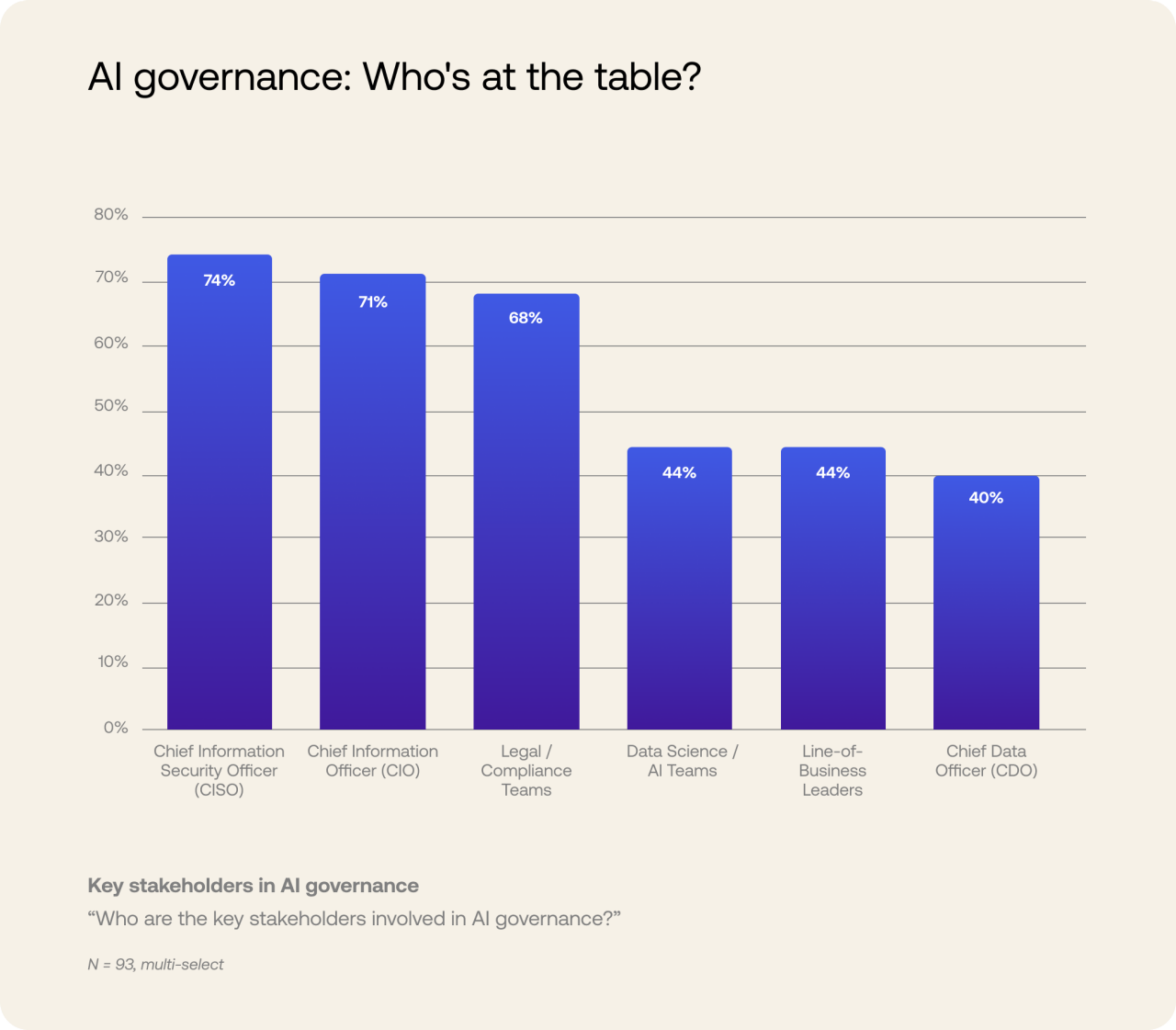

Within those organizations that make up the latter 36%, the most common key stakeholders in AI governance are CISOs, CIOs, and legal/compliance teams. However, we also see the welcome involvement of data scientists, AI teams, line-of-business leaders, and Chief Data Officers (CDOs) — all of whom can bring informed perspectives to this increasingly important and ever-complicated subject.

“We are missing a roadmap and are not aligned on how we as a group should implement AI. Some of the team are working as silos, so we do not yet have a cohesive approach to adopting AI.” — VP, Retail, France

The upshot

The identity security landscape has never been more complex, and every AI agent introduced compounds that complexity.

AI agents as a category represent just one form of non-human identity — devices, applications, services, automated processes, and other entities with assigned identities each require an approach to identity and access management with the same rigor.

Leaders must not lose sight of the necessity to establish strong governance and to implement reliable guardrails.

Next steps in your AI and identity journey

As NHIs become increasingly interconnected and autonomous, managing their identities will become the critical foundation for trust and security — both within your organization and in customer interactions.

Here are some suggestions to help you manage identity-related risks on your AI journey:

Get ahead of (or catch up with) governance, and include a range of perspectives. The survey suggests that 90% of organizations don’t have a well-developed strategy or roadmap for managing NHIs, and that 64% lack a centralized governance model for AI. Governance shouldn’t be regarded as a burden, but rather as a way to help safeguard your organization against costly consequences. In addition to executives traditionally involved with governance, risk, and compliance (GRC), strongly consider inviting leaders who are at the vanguard of your AI projects, including data officers, data scientists, and line-of-business leaders.

When exploring or introducing AI into your business and its processes, make sure security is embedded within your identity fabric. Combining visibility, access controls (through the entire lifecycle), and the ability to detect and respond to threats, this approach will enable agents to be deployed more securely and governed as first-class identities within your organization.

Treat your digital labor forces with the same degree of governance as human workforces. Just as contractors, consultants, vendors, partners, and other members of your extended workforce should be subject to the same strict IAM controls as your in-house workforce, so too should the NHIs operating within your IT environment.

And if, like 90% of survey respondents (up from 85% a year ago), your organization uses AI in customer-facing products and services, be sure to apply a secure-by-design approach to user authentication, API access controls, asynchronous workflows, and authorization for Retrieval Augmented Generation (RAG). All of these factors require different approaches for AI agents (even when working on behalf of customers) than for human users.

Methodology

Commissioned by Okta, AlphaSights recruited 260 executives, both C-suite and vice presidents, to take an online double-blinded survey on their sentiments, concerns, and business priorities regarding AI. Recruitment focused on CIOs, CTOs, CISOs, and vice presidents with similar functional areas. By role, 73% of respondents were C-suite, and 27% were vice presidents. Respondents were recruited from Australia (12%), Canada (12%), France (12%), Germany (12%), India (12%), the Netherlands (12%), the UK (12%), the US (12%), and Japan (8%).

These experts were engaged via an initial phone conversation with AlphaSights associates to determine whether or not they had relevant experience; those with applicable experience received the survey. AlphaSights fielded the survey in April and May 2025 and recruited the panel. A third-party consultant provided an analysis of the key findings. The Okta Newsroom team reviewed the findings and produced this article.