AI agents are no longer a thing of the future; they're already here, quietly running in the background of your business with the keys to sensitive resources. They're automating workflows, making decisions, and interacting with sensitive data. But as these agents become more powerful and autonomous, how do we secure them?

Our research reveals how widespread this challenge has become: According to Okta's "AI at Work 2025" survey of 260 executives, 91% of organizations are already using AI agents across nearly five distinct use cases on average. Yet only 10% have a well-developed strategy for managing these non-human identities (NHIs). This creates a dangerous disconnect: Organizations are deploying AI agents at an unprecedented scale while leaving governance as an afterthought.

The result: a brewing crisis where powerful autonomous systems operate without proper identity controls, creating exactly the security gaps that attackers eagerly exploit.

New security blind spots

Why are organizations struggling with AI agentic security? Because AI agents break the traditional security mold. Think about how you secure a human employee: They have an identity, a clear role, and a boss who's accountable for their actions.

An AI agent is different.

Who’s in charge? AI agents often work on behalf of a system, not a person. This makes it hard to trace who's responsible when something goes wrong.

They come and go in a flash: AI agents are created and destroyed at a rapid pace. If you can’t keep up with this dynamic lifecycle, you'll be left with a trail of unmonitored access points — a perfect invitation for a breach.

They need just enough access: An agent might need high-level access to complete a specific task, but only for a moment. Granting broad, standing privileges to an agent is like handing over the keys to the entire building.

They're invisible during a crisis: Without a clear record of an agent's actions, it's nearly impossible to figure out what happened during a security incident or to shut down the threat quickly.

They’re non-deterministic: It’s hard to predict what data or system an agent might access, in what order, and for how long

Simply put, AI agents are your newest opportunity, identity type, and insider threat. If you're using them without a governance model or identity security strategy designed for their unique nature, you're leaving your business exposed.

AI agents belong in your identity security fabric

We’ve been dealing with NHIs for years. Whether it’s an unmanaged service account or outdated credentials, we need ways to find them, lock them down, and govern them.

Traditional identity models fall short and require a new strategy for an identity security fabric in the age of AI: a fabric that secures every identity type, every use case, and every resource across all environments with consistent, scalable controls.

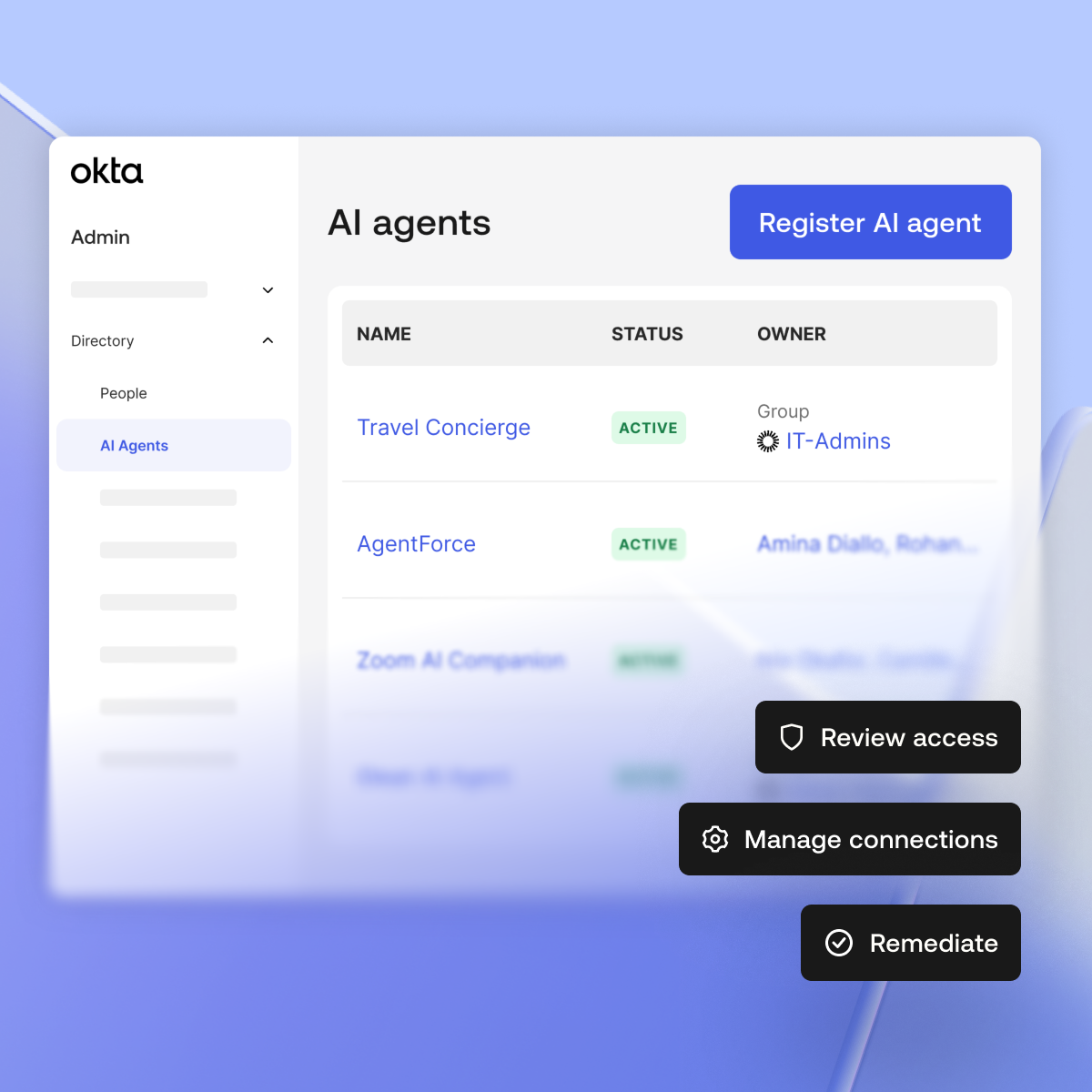

Okta will soon help you bring AI agents into your identity security fabric. Once in the fabric, you will get these four use cases:

- Detect and discover: Actively find every agent in your environment, understand their patterns, and assess the risk they pose.

- Provision and register: Treat agents as you would human identities. Each one should have a unique identity linked to a human owner who is accountable for its actions.

- Authorize and protect: Agents should operate with only the access they need, for the time they need it, through recently introduced Cross App Access (XAA), a new open protocol that helps standardize how users, AI agents, and applications connect securely.

- Govern and monitor: You must constantly monitor agent activity, looking for any unusual behavior that could signal a threat through automated access requests and periodic certifications to facilitate ongoing compliance with a clear audit trail.

AI security is ultimately an identity security problem. You can’t succeed at one without the other. As AI agents rapidly gain more autonomy and influence, the need for visibility, control, and accountability skyrockets. You cannot secure what you can’t see, manage, or govern.

We will release Okta for AI Agents in early 2026. Learn more about how Okta secures the entire AI agent lifecycle, end-to-end, at www.okta.com/secure-ai/.

This blog includes information on a product currently in its early access phase. The features and functionalities discussed are subject to change as we continue our development and refinement processes.

As an early access product, it may contain bugs or incomplete features. The content presented here is for informational purposes only and should not be considered a guarantee of future performance or final features.