AI is the defining platform shift of our generation and is rapidly transforming organizations of every size and industry. At the heart of this transformation are AI agents, increasingly autonomous AI systems that act on behalf of people, teams, and organizations.

Each AI agent requires access to critical systems and sensitive data to achieve its goals, but this often comes with blind spots. For one, many organizations are using outdated authentication methods that create a goldmine for threat actors looking to exploit misconfigured or overprivileged identities.

Recent analysis from Okta Threat Intelligence reveals that current approaches to granting AI agents access to corporate resources are exposing organizations to excessive risk, leading to an alarming buildup of “identity debt.” Below, we’ll share what these findings mean for businesses and what you can do about them.

AI agents are accelerating identity debt

Interactions with AI agents may seem “human,” but they must be managed as non-human identities (NHIs). AI agents take action independently, and the more access they get to data, resources, and feedback, the more capable they become. But the flipside to increased access is increased risk — and the potential to exacerbate data loss in the event of a compromised account.

Identity debt accumulates when static, shared secrets — long-lived and known or stored by more than one user — proliferate within a system.

Our findings show that agentic AI is accelerating this accumulation. When an agent is given broad permissions, particularly when credentials are rotated infrequently, it can become a super admin inside your organization with keys to enter the kingdom whenever it wants. If that agent is compromised, threat actors can walk in and out of your systems as often as they’d like.

In Okta’s 2025 AI at Work report, when asked about major concerns with AI, organization leaders ranked data privacy and security risks as first and second, respectively, by both severity (top concern) and frequency (cited most often). Though all eyes are on securing AI, only 10% of organizations have a well-developed strategy or roadmap for managing NHIs.

So while we’re speedrunning our way to agentic AI — 91% of leaders say their organizations are already using AI agents — poorly built, deployed, and managed agents are becoming new attack vectors. All it takes is one prompt injection or persona switching attack to access sensitive access tokens that allow lateral movement.

Why many agent systems still fail to externalize access controls — and what that exposes

To understand the cause of insecure credential protection, we traced it back to the lack of externalized access controls.

Looking at what authentication methods were used to connect security applications to Microsoft Copilot, our analysis of third-party plugin manifests on GitHub revealed a concerning trend in authentication choices:

Basic authentication (20%): This method requires administrators to upload usernames and passwords directly to servers and doesn’t support MFA, leaving accounts vulnerable to credential stuffing and password spray attacks.

Static API keys (75%): While offering some improvements over basic authentication (e.g. configurable expirations, MFA), these long-lived tokens are routinely stored in logs and saved in plaintext files on developer workstations, making them prime targets for attackers. Once intercepted, these tokens can provide persistent access.

OAuth 2.0 (5%): Enterprise-grade OAuth 2.0 flows act as externalized access controls that use short-lived access tokens and allow scopes to be set at the service application level, not just the user level.

Within the mix of standard authentication methods, basic authentication and static API keys bear a higher risk of exposing sensitive data. However, the data on the authentication methods used to connect these security applications to Microsoft Copilot indicates a slow adoption of OAuth flows.

As AI agents continue to scale, complying with basic authentication methods will only increase organizations' blast radius of any single compromise.

MCP server configurations and the silent spread of static credentials

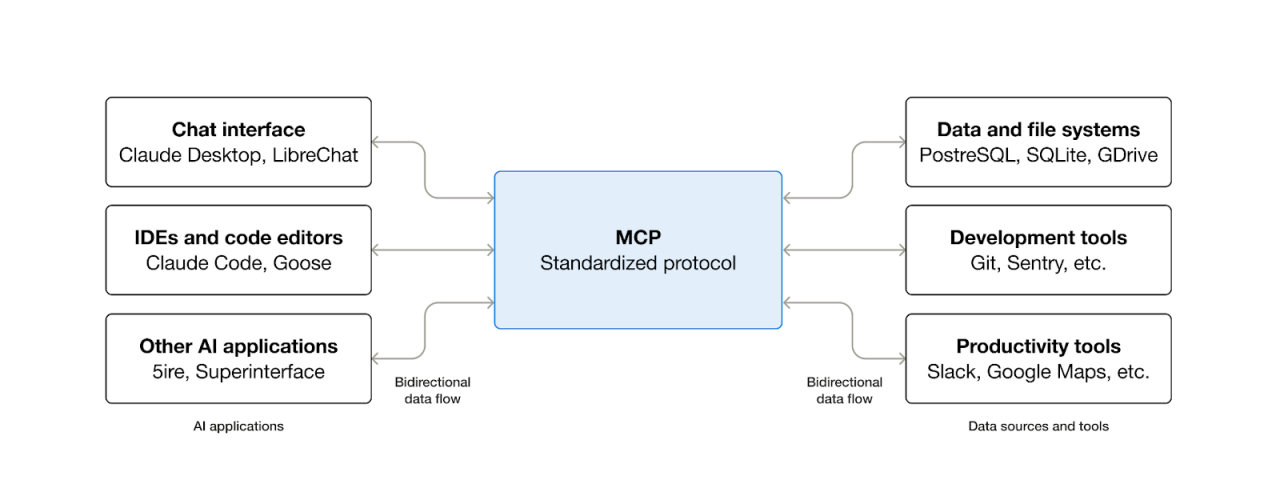

The momentum in agentic AI growth is now building around the Model Context Protocol (MCP), a standardized interface connecting AI systems and the underlying data they require. MCP’s client-server architecture allows software developers to easily “plug and play” AI applications with the data sources and tools that provide them context.

Source: modelcontextprotocol.io

Source: modelcontextprotocol.io

MCP servers require secrets (like API keys and personal access tokens) to connect with AI models and remote data sources. We analyzed the location of these credentials within configuration files and discovered that many were in plain-text format. Due to their static nature and accessible location, any user could easily access these critical credentials.

This issue extends to seemingly secure “production-ready” environments and widely used third-party community integrations not endorsed by the service providers in our report findings. For more specifics on credential storage for MCP servers, check out the full report.

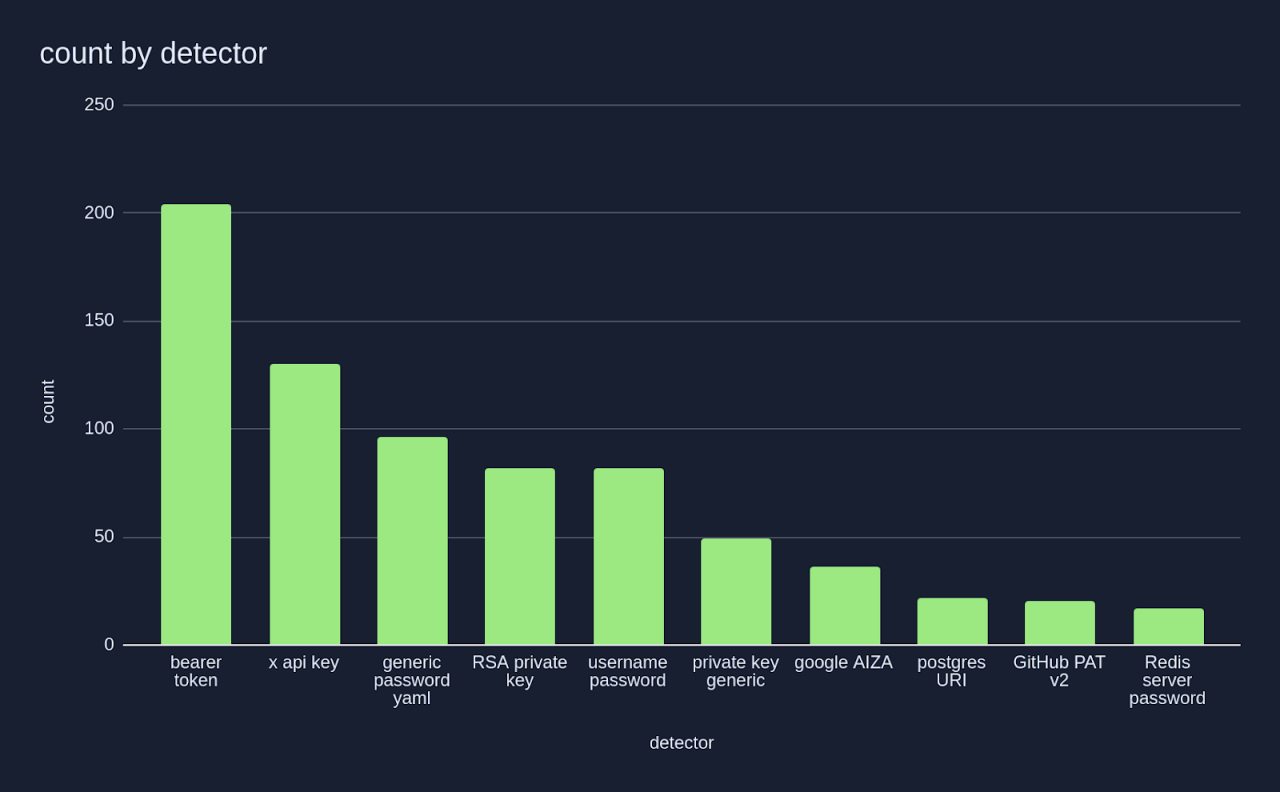

Credentials stored in insecure ways, like API keys, open a floodgate for attackers. Within software repositories, static API keys are prominent among plaintext credentials. Alternative approaches, such as running MCP servers in containers, direct rather than divert access to cleartext secrets. For malware designed to locate specific paths and file types where credentials are stored, threat actors can swiftly identify these static API tokens and amplify access to organization-wide resources.

Source: “A look into the secrets of MCP”, GitGuardian, April 2025

Source: “A look into the secrets of MCP”, GitGuardian, April 2025

Considerations for effectively managing the agent identity lifecycle

Identity systems were built to secure human identities, but AI agents don’t operate like people. To effectively manage the agent identity lifecycle, a different set of lenses is required to recognize key differences in provisioning, permissions and visibility:

Programmatic Provisioning: Agents are deployed from a CI/CD pipeline and need to be provisioned automatically without human interaction.

Highly-specific permissions: Each agent serves a use-case-specific purpose and requires granular-level permissions balanced with limited time access to minimize exposure.

Short life spans: Agents are dynamic and short-lived, which means rapid provisioning and de-provisioning are required to manage the field.

Visibility: Unlike human accounts, agents often lack traceable ownership and consistent logging; strong lifecycle and identity controls are required to track their actions and when they were taken.

Another consideration is the growing adoption of AI tools like Gemini Code Assist and platforms like Zapier Central, unlocking a no-code builder era. This new era has resulted in an explosion of user-generated agents that are fast, scalable, and often invisible to IT, reshaping the identity landscape.

Recommendations to govern and secure AI identities across environments

As an industry, we must resist the temptation to repurpose outdated authentication methods and opt instead for secure authorization flows built for the AI era.

Building a secure future with agentic AI isn’t just one team’s responsibility — it requires a coordinated effort across the org:

For service providers: Prioritize adopting OAuth 2.1 as the standard authorization model for all MCP servers.

For developers: Restrict static API tokens to non-production environments with limited scopes, use OS-level secret vaults for dynamically fetching secrets at runtime, and use .gitignore to prevent sensitive files from being accidentally committed to version control.

For security teams: Restrict development to secure workstations, require phishing-resistant authentication for all user access, and use dedicated secrets management solutions for production credentials.

For more detailed recommendations, read the full report. To learn how SaaS leaders are adopting the latest identity protocols to secure agentic AI applications, register for the Okta Identity Summit on Securing Agentic AI.